-

Notifications

You must be signed in to change notification settings - Fork 51

Merge upstream #299

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merge upstream #299

Conversation

…file ## What changes were proposed in this pull request? While spark code changes, there are new events in event log: apache#19649 And we used to maintain a whitelist to avoid exceptions: apache#15663 Currently Spark history server will stop parsing on unknown events or unrecognized properties. We may still see part of the UI data. For better compatibility, we can ignore unknown events and parse through the log file. ## How was this patch tested? Unit test Author: Wang Gengliang <[email protected]> Closes apache#19953 from gengliangwang/ReplayListenerBus.

## What changes were proposed in this pull request? PR closed with all the comments -> apache#19793 It solves the problem when submitting a wrong CreateSubmissionRequest to Spark Dispatcher was causing a bad state of Dispatcher and making it inactive as a Mesos framework. https://issues.apache.org/jira/browse/SPARK-22574 ## How was this patch tested? All spark test passed successfully. It was tested sending a wrong request (without appArgs) before and after the change. The point is easy, check if the value is null before being accessed. This was before the change, leaving the dispatcher inactive: ``` Exception in thread "Thread-22" java.lang.NullPointerException at org.apache.spark.scheduler.cluster.mesos.MesosClusterScheduler.getDriverCommandValue(MesosClusterScheduler.scala:444) at org.apache.spark.scheduler.cluster.mesos.MesosClusterScheduler.buildDriverCommand(MesosClusterScheduler.scala:451) at org.apache.spark.scheduler.cluster.mesos.MesosClusterScheduler.org$apache$spark$scheduler$cluster$mesos$MesosClusterScheduler$$createTaskInfo(MesosClusterScheduler.scala:538) at org.apache.spark.scheduler.cluster.mesos.MesosClusterScheduler$$anonfun$scheduleTasks$1.apply(MesosClusterScheduler.scala:570) at org.apache.spark.scheduler.cluster.mesos.MesosClusterScheduler$$anonfun$scheduleTasks$1.apply(MesosClusterScheduler.scala:555) at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48) at org.apache.spark.scheduler.cluster.mesos.MesosClusterScheduler.scheduleTasks(MesosClusterScheduler.scala:555) at org.apache.spark.scheduler.cluster.mesos.MesosClusterScheduler.resourceOffers(MesosClusterScheduler.scala:621) ``` And after: ``` "message" : "Malformed request: org.apache.spark.deploy.rest.SubmitRestProtocolException: Validation of message CreateSubmissionRequest failed!\n\torg.apache.spark.deploy.rest.SubmitRestProtocolMessage.validate(SubmitRestProtocolMessage.scala:70)\n\torg.apache.spark.deploy.rest.SubmitRequestServlet.doPost(RestSubmissionServer.scala:272)\n\tjavax.servlet.http.HttpServlet.service(HttpServlet.java:707)\n\tjavax.servlet.http.HttpServlet.service(HttpServlet.java:790)\n\torg.spark_project.jetty.servlet.ServletHolder.handle(ServletHolder.java:845)\n\torg.spark_project.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:583)\n\torg.spark_project.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1180)\n\torg.spark_project.jetty.servlet.ServletHandler.doScope(ServletHandler.java:511)\n\torg.spark_project.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1112)\n\torg.spark_project.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141)\n\torg.spark_project.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:134)\n\torg.spark_project.jetty.server.Server.handle(Server.java:524)\n\torg.spark_project.jetty.server.HttpChannel.handle(HttpChannel.java:319)\n\torg.spark_project.jetty.server.HttpConnection.onFillable(HttpConnection.java:253)\n\torg.spark_project.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:273)\n\torg.spark_project.jetty.io.FillInterest.fillable(FillInterest.java:95)\n\torg.spark_project.jetty.io.SelectChannelEndPoint$2.run(SelectChannelEndPoint.java:93)\n\torg.spark_project.jetty.util.thread.strategy.ExecuteProduceConsume.executeProduceConsume(ExecuteProduceConsume.java:303)\n\torg.spark_project.jetty.util.thread.strategy.ExecuteProduceConsume.produceConsume(ExecuteProduceConsume.java:148)\n\torg.spark_project.jetty.util.thread.strategy.ExecuteProduceConsume.run(ExecuteProduceConsume.java:136)\n\torg.spark_project.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:671)\n\torg.spark_project.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:589)\n\tjava.lang.Thread.run(Thread.java:745)" ``` Author: German Schiavon <[email protected]> Closes apache#19966 from Gschiavon/fix-submission-request.

…es in elt ## What changes were proposed in this pull request? In SPARK-22550 which fixes 64KB JVM bytecode limit problem with elt, `buildCodeBlocks` is used to split codes. However, we should use `splitExpressionsWithCurrentInputs` because it considers both normal and wholestage codgen (it is not supported yet, so it simply doesn't split the codes). ## How was this patch tested? Existing tests. Author: Liang-Chi Hsieh <[email protected]> Closes apache#19964 from viirya/SPARK-22772.

Use a semaphore to synchronize the tasks with the listener code that is trying to cancel the job or stage, so that the listener won't try to cancel a job or stage that has already finished. Author: Marcelo Vanzin <[email protected]> Closes apache#19956 from vanzin/SPARK-22764.

## What changes were proposed in this pull request? This pr fixed a compilation error of TPCDS `q75`/`q77` caused by apache#19813; ``` java.util.concurrent.ExecutionException: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 371, Column 16: failed to compile: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 371, Column 16: Expression "bhj_matched" is not an rvalue at com.google.common.util.concurrent.AbstractFuture$Sync.getValue(AbstractFuture.java:306) at com.google.common.util.concurrent.AbstractFuture$Sync.get(AbstractFuture.java:293) at com.google.common.util.concurrent.AbstractFuture.get(AbstractFuture.java:116) at com.google.common.util.concurrent.Uninterruptibles.getUninterruptibly(Uninterruptibles.java:135) ``` ## How was this patch tested? Manually checked `q75`/`q77` can be properly compiled Author: Takeshi Yamamuro <[email protected]> Closes apache#19969 from maropu/SPARK-22600-FOLLOWUP.

…S q75/q77" This reverts commit ef92999.

…ns under wholestage codegen" This reverts commit c7d0148.

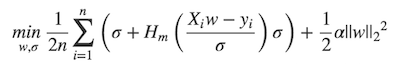

## What changes were proposed in this pull request? MLlib ```LinearRegression``` supports _huber_ loss addition to _leastSquares_ loss. The huber loss objective function is:  Refer Eq.(6) and Eq.(8) in [A robust hybrid of lasso and ridge regression](http://statweb.stanford.edu/~owen/reports/hhu.pdf). This objective is jointly convex as a function of (w, σ) ∈ R × (0,∞), we can use L-BFGS-B to solve it. The current implementation is a straight forward porting for Python scikit-learn [```HuberRegressor```](http://scikit-learn.org/stable/modules/generated/sklearn.linear_model.HuberRegressor.html). There are some differences: * We use mean loss (```lossSum/weightSum```), but sklearn uses total loss (```lossSum```). * We multiply the loss function and L2 regularization by 1/2. It does not affect the result if we multiply the whole formula by a factor, we just keep consistent with _leastSquares_ loss. So if fitting w/o regularization, MLlib and sklearn produce the same output. If fitting w/ regularization, MLlib should set ```regParam``` divide by the number of instances to match the output of sklearn. ## How was this patch tested? Unit tests. Author: Yanbo Liang <[email protected]> Closes apache#19020 from yanboliang/spark-3181.

## What changes were proposed in this pull request? This PR provides DataSourceV2 API support for structured streaming, including new pieces needed to support continuous processing [SPARK-20928]. High level summary: - DataSourceV2 includes new mixins to support micro-batch and continuous reads and writes. For reads, we accept an optional user specified schema rather than using the ReadSupportWithSchema model, because doing so would severely complicate the interface. - DataSourceV2Reader includes new interfaces to read a specific microbatch or read continuously from a given offset. These follow the same setter pattern as the existing Supports* mixins so that they can work with SupportsScanUnsafeRow. - DataReader (the per-partition reader) has a new subinterface ContinuousDataReader only for continuous processing. This reader has a special method to check progress, and next() blocks for new input rather than returning false. - Offset, an abstract representation of position in a streaming query, is ported to the public API. (Each type of reader will define its own Offset implementation.) - DataSourceV2Writer has a new subinterface ContinuousWriter only for continuous processing. Commits to this interface come tagged with an epoch number, as the execution engine will continue to produce new epoch commits as the task continues indefinitely. Note that this PR does not propose to change the existing DataSourceV2 batch API, or deprecate the existing streaming source/sink internal APIs in spark.sql.execution.streaming. ## How was this patch tested? Toy implementations of the new interfaces with unit tests. Author: Jose Torres <[email protected]> Closes apache#19925 from joseph-torres/continuous-api.

SQLConf allows some callers to define a custom default value for configs, and that complicates a little bit the handling of fallback config entries, since most of the default value resolution is hidden by the config code. This change peaks into the internals of these fallback configs to figure out the correct default value, and also returns the current human-readable default when showing the default value (e.g. through "set -v"). Author: Marcelo Vanzin <[email protected]> Closes apache#19974 from vanzin/SPARK-22779.

…WritableColumnVector ## What changes were proposed in this pull request? These dictionary related APIs are special to `WritableColumnVector` and should not be in `ColumnVector`, which will be public soon. ## How was this patch tested? existing tests Author: Wenchen Fan <[email protected]> Closes apache#19970 from cloud-fan/final.

## What changes were proposed in this pull request? `ColumnVector.anyNullsSet` is not called anywhere except tests, and we can easily replace it with `ColumnVector.numNulls > 0` ## How was this patch tested? existing tests Author: Wenchen Fan <[email protected]> Closes apache#19980 from cloud-fan/minor.

## What changes were proposed in this pull request? This PR adds check whether Java code generated by Catalyst can be compiled by `janino` correctly or not into `TPCDSQuerySuite`. Before this PR, this suite only checks whether analysis can be performed correctly or not. This check will be able to avoid unexpected performance degrade by interpreter execution due to a Java compilation error. ## How was this patch tested? Existing a test case, but updated it. Author: Kazuaki Ishizaki <[email protected]> Closes apache#19971 from kiszk/SPARK-22774.

## What changes were proposed in this pull request? In multiple text analysis problems, it is not often desirable for the rows to be split by "\n". There exists a wholeText reader for RDD API, and this JIRA just adds the same support for Dataset API. ## How was this patch tested? Added relevant new tests for both scala and Java APIs Author: Prashant Sharma <[email protected]> Author: Prashant Sharma <[email protected]> Closes apache#14151 from ScrapCodes/SPARK-16496/wholetext.

…e in scala-2.12 ## What changes were proposed in this pull request? Missing some changes about limit in TaskSetManager.scala ## How was this patch tested? running tests Please review http://spark.apache.org/contributing.html before opening a pull request. Author: kellyzly <[email protected]> Closes apache#19976 from kellyzly/SPARK-22660.2.

…rnetesClusterManager ## What changes were proposed in this pull request? This PR added the missing service metadata for `KubernetesClusterManager`. Without the metadata, the service loader couldn't load `KubernetesClusterManager`, and caused the driver to fail to create a `ExternalClusterManager`, as being reported in SPARK-22778. The PR also changed the `k8s:` prefix used to `k8s://`, which is what existing Spark on k8s users are familiar and used to. ## How was this patch tested? Manual testing verified that the fix resolved the issue in SPARK-22778. /cc vanzin felixcheung jiangxb1987 Author: Yinan Li <[email protected]> Closes apache#19972 from liyinan926/fix-22778.

…amExecution. ## What changes were proposed in this pull request? StreamExecution is now an abstract base class, which MicroBatchExecution (the current StreamExecution) inherits. When continuous processing is implemented, we'll have a new ContinuousExecution implementation of StreamExecution. A few fields are also renamed to make them less microbatch-specific. ## How was this patch tested? refactoring only Author: Jose Torres <[email protected]> Closes apache#19926 from joseph-torres/continuous-refactor.

## What changes were proposed in this pull request? since hive 2.0+ upgrades log4j to log4j2,a lot of [changes](https://issues.apache.org/jira/browse/HIVE-11304) are made working on it. as spark is not to ready to update its inner hive version(1.2.1) , so I manage to make little changes. the function registerCurrentOperationLog is moved from SQLOperstion to its parent class ExecuteStatementOperation so spark can use it. ## How was this patch tested? manual test Closes apache#19721 from ChenjunZou/operation-log. Author: zouchenjun <[email protected]> Closes apache#19961 from ChenjunZou/spark-22496.

## What changes were proposed in this pull request? Add a test suite to ensure all the TPC-H queries can be successfully analyzed, optimized and compiled without hitting the max iteration threshold. ## How was this patch tested? N/A Author: gatorsmile <[email protected]> Closes apache#19982 from gatorsmile/testTPCH.

## What changes were proposed in this pull request? As the discussion in apache#16481 and apache#18975 (comment) Currently the BaseRelation returned by `dataSource.writeAndRead` only used in `CreateDataSourceTableAsSelect`, planForWriting and writeAndRead has some common code paths. In this patch I removed the writeAndRead function and added the getRelation function which only use in `CreateDataSourceTableAsSelectCommand` while saving data to non-existing table. ## How was this patch tested? Existing UT Author: Yuanjian Li <[email protected]> Closes apache#19941 from xuanyuanking/SPARK-22753.

This reverts commit 0ea2d8c.

## What changes were proposed in this pull request? Add a test suite to ensure all the [SSB (Star Schema Benchmark)](https://www.cs.umb.edu/~poneil/StarSchemaB.PDF) queries can be successfully analyzed, optimized and compiled without hitting the max iteration threshold. ## How was this patch tested? Added `SSBQuerySuite`. Author: Takeshi Yamamuro <[email protected]> Closes apache#19990 from maropu/SPARK-22800.

## What changes were proposed in this pull request? Basic tests for IfCoercion and CaseWhenCoercion ## How was this patch tested? N/A Author: Yuming Wang <[email protected]> Closes apache#19949 from wangyum/SPARK-22762.

…not available. ## What changes were proposed in this pull request? pyspark.ml.tests is missing a py4j import. I've added the import and fixed the test that uses it. This test was only failing when testing without Hive. ## How was this patch tested? Existing tests. Please review http://spark.apache.org/contributing.html before opening a pull request. Author: Bago Amirbekian <[email protected]> Closes apache#19997 from MrBago/fix-ImageReaderTest2.

## What changes were proposed in this pull request? `testthat` 2.0.0 is released and AppVeyor now started to use it instead of 1.0.2. And then, we started to have R tests failed in AppVeyor. See - https://ci.appveyor.com/project/ApacheSoftwareFoundation/spark/build/1967-master ``` Error in get(name, envir = asNamespace(pkg), inherits = FALSE) : object 'run_tests' not found Calls: ::: -> get ``` This seems because we rely on internal `testthat:::run_tests` here: https://github.com/r-lib/testthat/blob/v1.0.2/R/test-package.R#L62-L75 https://github.com/apache/spark/blob/dc4c351837879dab26ad8fb471dc51c06832a9e4/R/pkg/tests/run-all.R#L49-L52 However, seems it was removed out from 2.0.0. I tried few other exposed APIs like `test_dir` but I failed to make a good compatible fix. Seems we better fix the `testthat` version first to make the build passed. ## How was this patch tested? Manually tested and AppVeyor tests. Author: hyukjinkwon <[email protected]> Closes apache#20003 from HyukjinKwon/SPARK-22817.

## What changes were proposed in this pull request? Easy fix in the link. ## How was this patch tested? Tested manually Author: Mahmut CAVDAR <[email protected]> Closes apache#19996 from mcavdar/master.

## What changes were proposed in this pull request? Test Coverage for `PromoteStrings` and `InConversion`, this is a Sub-tasks for [SPARK-22722](https://issues.apache.org/jira/browse/SPARK-22722). ## How was this patch tested? N/A Author: Yuming Wang <[email protected]> Closes apache#20001 from wangyum/SPARK-22816.

…ith container ## What changes were proposed in this pull request? Changes discussed in apache#19946 (comment) docker -> container, since with CRI, we are not limited to running only docker images. ## How was this patch tested? Manual testing Author: foxish <[email protected]> Closes apache#19995 from foxish/make-docker-container.

This change restores the functionality that keeps a limited number of different types (jobs, stages, etc) depending on configuration, to avoid the store growing indefinitely over time. The feature is implemented by creating a new type (ElementTrackingStore) that wraps a KVStore and allows triggers to be set up for when elements of a certain type meet a certain threshold. Triggers don't need to necessarily only delete elements, but the current API is set up in a way that makes that use case easier. The new store also has a trigger for the "close" call, which makes it easier for listeners to register code for cleaning things up and flushing partial state to the store. The old configurations for cleaning up the stored elements from the core and SQL UIs are now active again, and the old unit tests are re-enabled. Author: Marcelo Vanzin <[email protected]> Closes apache#19751 from vanzin/SPARK-20653.

This reverts commit e58f275.

|

@robert3005 please do b22c776 also |

|

fysa @robert3005 pushed a commit to capture the failed mvn-install log |

|

@mccheah @ash211 @onursatici are these valid? |

|

It is correctly identifying a change in API of that class which was introduced in https://github.com/apache-spark-on-k8s/spark/pull/224/files#diff-ec2c1511cb40d6f7310e819e2b53b345R31 and brought into Palantir Spark. It's not in Apache yet: https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/cluster/CoarseGrainedClusterMessage.scala#L31 This landed back in July though -- 8ea3cf0 -- curious that MIMA is only just now flagging it. Maybe they started checking these classes for backcompat recently? |

|

Maybe it's because we tagged a new version? I imagine we should add these to excludes |

|

Yeah I think we should just exclude this class from mima checks -- the change was on the in-cluster message objects, which shouldn't actually have compatibility requirements since the versions are always in sync between driver and executors of a Spark cluster. I think that's an addition to |

|

Actually the ignores seem wrong for this class, for every other message it's |

|

I don't see those ignores in this branch -- where did |

|

There's GenerateMIMAIgnore that produces list of package private things to ignore. This class should be covered by this list but somehow is treated differently when scanning classpath. I will poke over weekend. |

|

So the fail is legit in that in 2.2.0 this object is a case object and it got converted to case class. Excludes are generated from previous version and not current so it's not ignored (as potentially new thing in api) - it's internal though so we should ignore. I am curious though why it wasn't flagging before - maybe previous version didn't get updated for mima checks? |

d578a6d to

4ca2282

Compare

4ca2282 to

b8380fd

Compare

|

Most recent errors look like some of our Parquet changes got reverted, or new tests got added that assume older parquet / bad timestamp format: SPARK-22673: InMemoryRelation should utilize existing stats of the plan to be cached - org.apache.spark.sql.execution.columnar.InMemoryColumnarQuerySuite |

…is reused ## What changes were proposed in this pull request? With this change, we can easily identify the plan difference when subquery is reused. When the reuse is enabled, the plan looks like ``` == Physical Plan == CollectLimit 1 +- *(1) Project [(Subquery subquery240 + ReusedSubquery Subquery subquery240) AS (scalarsubquery() + scalarsubquery())#253] : :- Subquery subquery240 : : +- *(2) HashAggregate(keys=[], functions=[avg(cast(key#13 as bigint))], output=[avg(key)#250]) : : +- Exchange SinglePartition : : +- *(1) HashAggregate(keys=[], functions=[partial_avg(cast(key#13 as bigint))], output=[sum#256, count#257L]) : : +- *(1) SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData, true])).key AS key#13] : : +- Scan[obj#12] : +- ReusedSubquery Subquery subquery240 +- *(1) SerializeFromObject +- Scan[obj#12] ``` When the reuse is disabled, the plan looks like ``` == Physical Plan == CollectLimit 1 +- *(1) Project [(Subquery subquery286 + Subquery subquery287) AS (scalarsubquery() + scalarsubquery())#299] : :- Subquery subquery286 : : +- *(2) HashAggregate(keys=[], functions=[avg(cast(key#13 as bigint))], output=[avg(key)#296]) : : +- Exchange SinglePartition : : +- *(1) HashAggregate(keys=[], functions=[partial_avg(cast(key#13 as bigint))], output=[sum#302, count#303L]) : : +- *(1) SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData, true])).key AS key#13] : : +- Scan[obj#12] : +- Subquery subquery287 : +- *(2) HashAggregate(keys=[], functions=[avg(cast(key#13 as bigint))], output=[avg(key)#298]) : +- Exchange SinglePartition : +- *(1) HashAggregate(keys=[], functions=[partial_avg(cast(key#13 as bigint))], output=[sum#306, count#307L]) : +- *(1) SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData, true])).key AS key#13] : +- Scan[obj#12] +- *(1) SerializeFromObject +- Scan[obj#12] ``` ## How was this patch tested? Modified the existing test. Closes apache#24258 from gatorsmile/followupSPARK-27279. Authored-by: gatorsmile <[email protected]> Signed-off-by: gatorsmile <[email protected]>

…is reused With this change, we can easily identify the plan difference when subquery is reused. When the reuse is enabled, the plan looks like ``` == Physical Plan == CollectLimit 1 +- *(1) Project [(Subquery subquery240 + ReusedSubquery Subquery subquery240) AS (scalarsubquery() + scalarsubquery())#253] : :- Subquery subquery240 : : +- *(2) HashAggregate(keys=[], functions=[avg(cast(key#13 as bigint))], output=[avg(key)#250]) : : +- Exchange SinglePartition : : +- *(1) HashAggregate(keys=[], functions=[partial_avg(cast(key#13 as bigint))], output=[sum#256, count#257L]) : : +- *(1) SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData, true])).key AS key#13] : : +- Scan[obj#12] : +- ReusedSubquery Subquery subquery240 +- *(1) SerializeFromObject +- Scan[obj#12] ``` When the reuse is disabled, the plan looks like ``` == Physical Plan == CollectLimit 1 +- *(1) Project [(Subquery subquery286 + Subquery subquery287) AS (scalarsubquery() + scalarsubquery())#299] : :- Subquery subquery286 : : +- *(2) HashAggregate(keys=[], functions=[avg(cast(key#13 as bigint))], output=[avg(key)#296]) : : +- Exchange SinglePartition : : +- *(1) HashAggregate(keys=[], functions=[partial_avg(cast(key#13 as bigint))], output=[sum#302, count#303L]) : : +- *(1) SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData, true])).key AS key#13] : : +- Scan[obj#12] : +- Subquery subquery287 : +- *(2) HashAggregate(keys=[], functions=[avg(cast(key#13 as bigint))], output=[avg(key)#298]) : +- Exchange SinglePartition : +- *(1) HashAggregate(keys=[], functions=[partial_avg(cast(key#13 as bigint))], output=[sum#306, count#307L]) : +- *(1) SerializeFromObject [knownnotnull(assertnotnull(input[0, org.apache.spark.sql.test.SQLTestData$TestData, true])).key AS key#13] : +- Scan[obj#12] +- *(1) SerializeFromObject +- Scan[obj#12] ``` Modified the existing test. Closes apache#24258 from gatorsmile/followupSPARK-27279. Authored-by: gatorsmile <[email protected]> Signed-off-by: gatorsmile <[email protected]>

Fixes #298

To get all the 2.3.0 release goodies and correctness