-

Notifications

You must be signed in to change notification settings - Fork 7.1k

Migrate mnist dataset from np.frombuffer #4598

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

good point, somehow on the first format run - I've got a lot of changes. Seems like after rebasing it's all good now. So sorry for the inconvenience 🙈 |

|

No worries at all. I have similar issues all the time ;) |

|

Could you test this against the original data? IIRC, there was something about the byte order, which is why I implemented the same in the new prototype like this:

If this works with |

|

@pmeier We are happy to wait until you are back from PTO to allow for more thorough tests. Would you mind marking this with "Request changes" so that it's not accidentally merged? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

See #4598 (comment)

|

It seems to work just fine, but if we use it, we are getting an "old" warning back: This was only removed only recently in #4184. Maybe we can send a patch upstream to fix it, but the warning seems justified to me. |

|

According to the docs of

so I'm not sure we can avoid the warning if we switch to I would suggest to either:

|

|

Agreed about the options. I would go with option 1 as this aligns with our previous decision to copy instead of ignore the warning. |

Not sure what you mean here. If we go with option 1. we need to suppress the warning, because we can only copy ( |

|

@pmeier Apologies, I misread the previous comment. TBH I would prefer to merge the PR because it removes the extra code of juggling between numpy and pytorch. Concerning fixing the warning, we could either call |

Sorry to be pedantic here, but we need to suppress and clone. with suppress_read_only_warning():

data = torch.from_buffer(buffer, dtype=dtype).clone()This issues did not arise before, because |

|

Let's do that. @lc0 , would you mind updating the PR with something similar to what |

|

I might be missing something here but why can't we copy the buffer and make it mutable? Something like the following should work because the parsed = torch.frombuffer(bytearray(data), dtype=torch_type, offset=(4 * (nd + 1)))We do copy but we don't have to suppress warnings etc. |

|

Yes we can either copy the buffer (and not suppress the warning) or copy the end tensor (and suppress). They're all the same in terms of complexity / time overhead (i.e.: minimal), so we can go with whichever we want. But I'd go with the first one that we're all happy with :) |

|

I like |

|

I fixed accordingly with the suggestion from @datumbox and no more warnings :) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@lc0 The MNIST files are stored in big endian byte order, but torch has no notion of byte order AFAIK. Thus, if your system uses little endian, we will get wrong results if we just read the data with torch.frombuffer. In numpy you can specify the byte order by prefixing > before the type to indicate big endian. If you don't specify anything, it will use the system default as torch does.

By removing numpy, we need to take care of that manually. See #4651 for an example where I did this for a prototype implementation of MNIST datasets.

|

Hi @pmeier it's a good point. It feels that we are maintaining two implementations of dataset API. Does it make sense somehow abstract it away and do not implement/maintain it twice? :) Also, if this is broken currently, I guess it's subtle enough to be useful enough to cover with a test. What do you think? |

Yes, currently there are two API for datasets:

It is not currently broken. If you look in the dtype dict, you see that all dtypes with more than one byte, have a variant that is prefixed with Or do you mean that this PR is currently broken, but our tests are passing? In that case I agree. |

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM, thanks @lc0!

|

Hey @datumbox! You merged this PR, but no labels were added. The list of valid labels is available at https://github.com/pytorch/vision/blob/main/.github/process_commit.py |

Summary: * Migrate mnist dataset from np.frombuffer * Add a copy with bytearray for non-writable buffers * Add byte reversal for mnist Reviewed By: NicolasHug Differential Revision: D31916333 fbshipit-source-id: 9af4d8dc4fb7e11c63fcc82c87004314fbc2d225 Co-authored-by: Vasilis Vryniotis <[email protected]>

* Migrate mnist dataset from np.frombuffer * Add a copy with bytearray for non-writable buffers * Add byte reversal for mnist Co-authored-by: Vasilis Vryniotis <[email protected]>

Accordingly to pytorch/pytorch#59077 this PR migrates from np.frombuffer to pytorch.frombuffer and closes #4552

This PR is closing the last piece with mnist dataset.

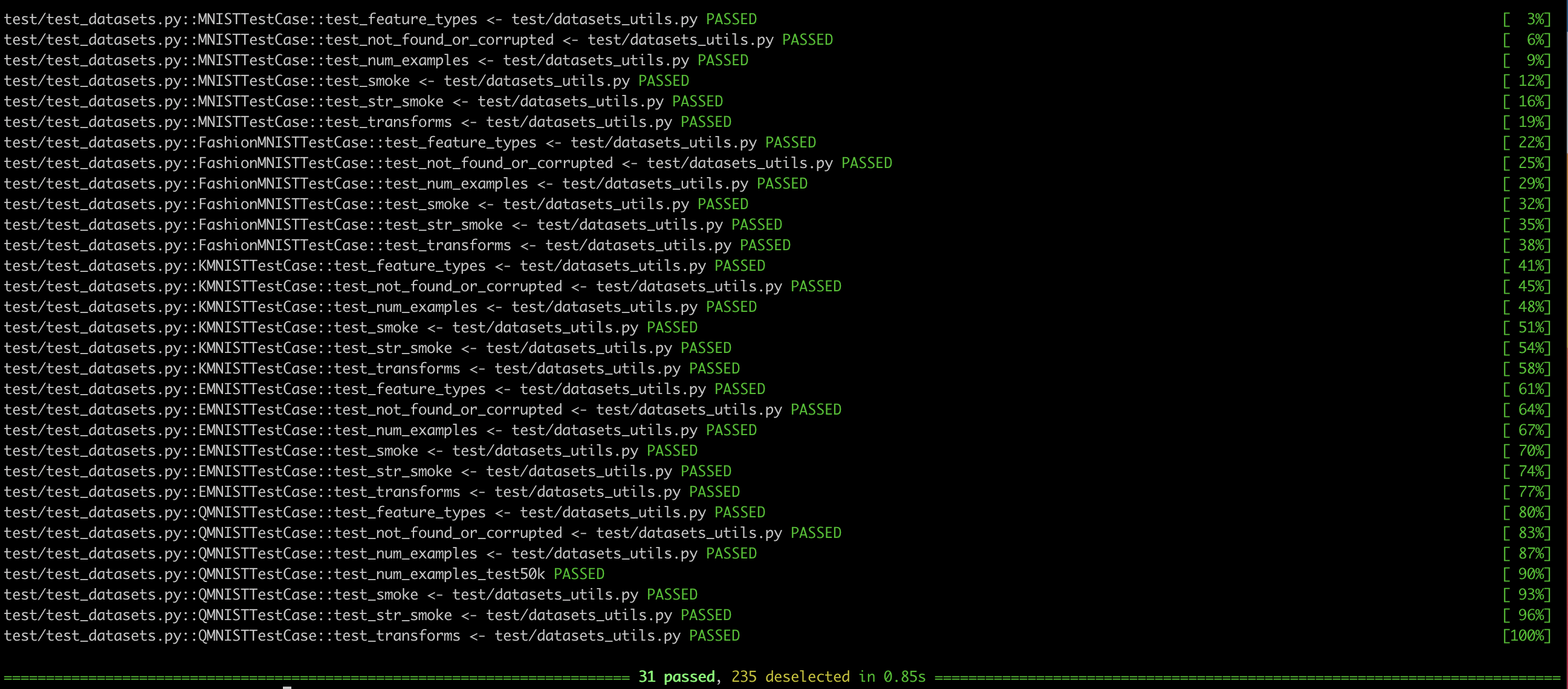

So far I tested all mnist tests:

cc @pmeier @datumbox