-

Notifications

You must be signed in to change notification settings - Fork 28.8k

[SPARK-35398][SQL] Simplify the way to get classes from ClassBodyEvaluator in CodeGenerator.updateAndGetCompilationStats method

#32536

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

CodeGenerator.updateAndGetCompilationStats method

CodeGenerator.updateAndGetCompilationStats methodCodeGenerator.updateAndGetCompilationStats method

|

Waiting for CI |

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #138505 has finished for PR 32536 at commit

|

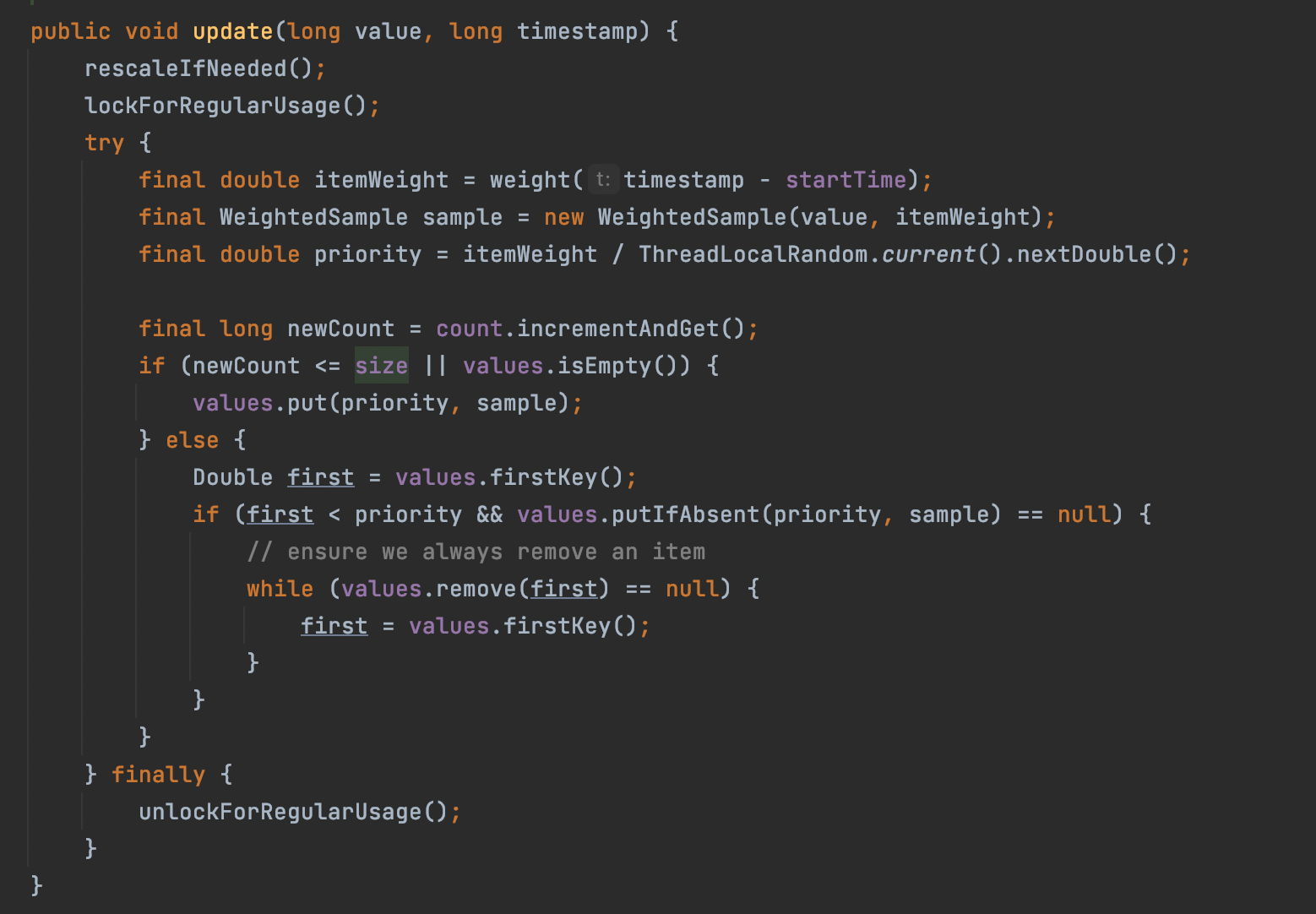

| classesField.setAccessible(true) | ||

| classesField.get(loader).asInstanceOf[JavaMap[String, Array[Byte]]].asScala | ||

| } | ||

| val classes = evaluator.getBytecodes.asScala |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It seems fine, but have you checked if this stat value does not change before/after this PR?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I did some manual tests to check this.

For example, add a afterEach() method to CodeGenerationSuite to record the CodegenMetrics.METRIC_GENERATED_CLASS_BYTECODE_SIZE.getSnapshot.getValues after each case and the stat value not change before/after this PR.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the same way, the stat values of CodegenMetrics.METRIC_GENERATED_METHOD_BYTECODE_SIZE has not changed before/after this PR.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@maropu If we want to do some code checking, maybe we can enhanced the case metrics are recorded on compile in CodeGenerationSuite as follows:

test("metrics are recorded on compile") {

...

val metricGeneratedClassByteCodeSizeSnapshot =

CodegenMetrics.METRIC_GENERATED_CLASS_BYTECODE_SIZE.getSnapshot.getValues

val metricGeneratedMethodByteCodeSizeSnapshot =

CodegenMetrics.METRIC_GENERATED_METHOD_BYTECODE_SIZE.getSnapshot.getValues

GenerateOrdering.generate(Add(Literal(123), Literal(1)).asc :: Nil)

...

// Make sure the stat content doesn't change before/after SPARK-35398

assert(CodegenMetrics.METRIC_GENERATED_CLASS_BYTECODE_SIZE.getSnapshot.getValues

.diff(metricGeneratedClassByteCodeSizeSnapshot)

.sameElements(Array(740, 1293)))

assert(CodegenMetrics.METRIC_GENERATED_METHOD_BYTECODE_SIZE.getSnapshot.getValues

.diff(metricGeneratedMethodByteCodeSizeSnapshot)

.sameElements(Array(5, 5, 6, 7, 10, 15, 46)))

}

The new assertion can be passed before and after this pr, however, if we update the version of janino or change the codegen of Add, we may need to update the content of the assertion because the size of the generated code may change.

For example CodegenMetrics.METRIC_GENERATED_CLASS_BYTECODE_SIZE with janino 3.1.4 are Array(740, 1293), but with janino 3.0.16 are Array(687, 1036), so I'm not sure if we need to add these assertions in this pr.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@maropu If we want to do some code checking, maybe we can enhanced the case metrics are recorded on compile in CodeGenerationSuite as follows:

Ah, I see. Thank for the explanation, @LuciferYang. Could you add a new test unit for the assert with the prefix SPARK-35398: ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ok

|

Test build #138606 has finished for PR 32536 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #138611 has finished for PR 32536 at commit

|

|

New test case will fail when running with other tests, let me check this. |

|

I printed size of So this case always fails when run and this case will success when run |

|

@maropu for the above problem, can we add a case similar to the following to compare the result of |

|

Ah, okay. Thank you for the investigation. If so, I think we don't need to add tests. Instead of it, could you update the PR description to describe how you checked if the stats values are the same (Adding the code example above looks fine)? |

|

Kubernetes integration test unable to build dist. exiting with code: 1 |

|

Test build #138682 has finished for PR 32536 at commit

|

|

Thank you, @LuciferYang . Merged to master. |

|

thx all ~ |

…sion to v3.1.4" ### What changes were proposed in this pull request? This PR reverts #32455 and its followup #32536 , because the new janino version has a bug that is not fixed yet: janino-compiler/janino#148 ### Why are the changes needed? avoid regressions ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? existing tests Closes #33302 from cloud-fan/revert. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

…sion to v3.1.4" ### What changes were proposed in this pull request? This PR reverts #32455 and its followup #32536 , because the new janino version has a bug that is not fixed yet: janino-compiler/janino#148 ### Why are the changes needed? avoid regressions ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? existing tests Closes #33302 from cloud-fan/revert. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]> (cherry picked from commit ae6199a) Signed-off-by: Hyukjin Kwon <[email protected]>

### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - #32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes #37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache/spark#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes #37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache/spark#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes #37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache/spark#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes #37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes apache#37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 29ed337)

* ODP-2189 Upgrade snakeyaml version to 2.0 * [SPARK-35579][SQL] Bump janino to 3.1.7 ### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes apache#37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 29ed337) * [SPARK-40633][BUILD] Upgrade janino to 3.1.9 ### What changes were proposed in this pull request? This pr aims upgrade janino from 3.1.7 to 3.1.9 ### Why are the changes needed? This version bring some improvement and bug fix, and janino 3.1.9 will no longer test Java 12, 15, 16 because these STS versions have been EOL: - janino-compiler/janino@v3.1.7...v3.1.9 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions - Manual test this pr with Scala 2.13, all test passed Closes apache#38075 from LuciferYang/SPARK-40633. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 49e102b) * ODP-2167 Upgrade janino version from 3.1.9 to 3.1.10 * ODP-2190 Upgrade guava version to 32.1.3-jre * ODP-2193 Upgrade jettison version to 1.5.4 * ODP-2194 Upgrade wildfly-openssl version to 1.1.3 * ODP-2198 Upgrade gson version to 2.11.0 * ODP-2199 Upgrade kryo-shaded version to 4.0.3 * ODP-2200 Upgrade datanucleus-core and datanucleus-rdbms versions to 5.2.3 * ODP-2203 Upgrade Snappy and common-compress to 1.1.10.4 and 1.26.0 respectively * ODP-2198 Excluded gson from tink library * ODP-2205 Upgrade jdom2 to 2.0.6.1 * ODP-2198 Excluded gson from hive-exec * ODP-2175|SPARK-47018 Upgrade libthrift version and hive version * [SPARK-39688][K8S] `getReusablePVCs` should handle accounts with no PVC permission ### What changes were proposed in this pull request? This PR aims to handle `KubernetesClientException` in `getReusablePVCs` method to handle gracefully the cases where accounts has no PVC permission including `listing`. ### Why are the changes needed? To prevent a regression in Apache Spark 3.4. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with the newly added test case. Closes apache#37095 from dongjoon-hyun/SPARK-39688. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 79f133b) * [SPARK-40458][K8S] Bump Kubernetes Client Version to 6.1.1 ### What changes were proposed in this pull request? Bump kubernetes-client version from 5.12.3 to 6.1.1 and clean up all the deprecations. ### Why are the changes needed? To keep up with kubernetes-client [changes](fabric8io/kubernetes-client@v5.12.3...v6.1.1). As this is an upgrade where the main version changed I have cleaned up all the deprecations. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? #### Unit tests #### Manual tests for submit and application management Started an application in a non-default namespace (`bla`): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit \ --master k8s://http://127.0.0.1:8001 \ --deploy-mode cluster \ --name spark-pi \ --class org.apache.spark.examples.SparkPi \ --conf spark.executor.instances=5 \ --conf spark.kubernetes.namespace=bla \ --conf spark.kubernetes.container.image=docker.io/kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D \ local:///opt/spark/examples/jars/spark-examples_2.12-3.4.0-SNAPSHOT.jar 200000 ``` Check that we cannot find it in the default namespace even with glob without the namespace definition: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=default Context "minikube" modified. ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. No applications found. ``` Then check we can find it by specifying the namespace: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "bla:spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission bla:spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Changing the namespace to `bla` with `kubectl`: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=bla Context "minikube" modified. ``` Checking we can find it without specifying the namespace (and glob): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Killing the app: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --kill "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request to kill submission spark-pi-* in k8s://http://127.0.0.1:8001. Grace period in secs: not set. Deleting driver pod: spark-pi-4c4e70837c86ae1a-driver. ``` Closes apache#37990 from attilapiros/SPARK-40458. Authored-by: attilapiros <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit fa88651) * [SPARK-36462][K8S] Add the ability to selectively disable watching or polling ### What changes were proposed in this pull request? Add the ability to selectively disable watching or polling Updated version of apache#34264 ### Why are the changes needed? Watching or polling for pod status on Kubernetes can place additional load on etcd, with a large number of executors and large number of jobs this can have negative impacts and executors register themselves with the driver under normal operations anyways. ### Does this PR introduce _any_ user-facing change? Two new config flags. ### How was this patch tested? New unit tests + manually tested a forked version of this on an internal cluster with both watching and polling disabled. Closes apache#36433 from holdenk/SPARK-36462-allow-spark-on-kube-to-operate-without-watchers. Lead-authored-by: Holden Karau <[email protected]> Co-authored-by: Holden Karau <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 5bffb98) * ODP-2201|SPARK-48867 Upgrade okhttp to 4.12.0, okio to 3.9.0 and esdk-obs-java to 3.24.3 * [SPARK-41958][CORE][3.3] Disallow arbitrary custom classpath with proxy user in cluster mode Backporting fix for SPARK-41958 to 3.3 branch from apache#39474 Below description from original PR. -------------------------- ### What changes were proposed in this pull request? This PR proposes to disallow arbitrary custom classpath with proxy user in cluster mode by default. ### Why are the changes needed? To avoid arbitrary classpath in spark cluster. ### Does this PR introduce _any_ user-facing change? Yes. User should reenable this feature by `spark.submit.proxyUser.allowCustomClasspathInClusterMode`. ### How was this patch tested? Manually tested. Closes apache#39474 from Ngone51/dev. Lead-authored-by: Peter Toth <peter.tothgmail.com> Co-authored-by: Yi Wu <yi.wudatabricks.com> Signed-off-by: Hyukjin Kwon <gurwls223apache.org> (cherry picked from commit 909da96) ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes apache#41428 from degant/spark-41958-3.3. Lead-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> * ODP-2049 Changing Spark3 version from 3.3.3.3.2.3.2-2 to 3.3.3.3.2.3.2-201 * ODP-2049 Changing libthrift version to 0.16 in deps files * ODP-2049 Changing derby version to 10.14.3.0 --------- Signed-off-by: Dongjoon Hyun <[email protected]> Co-authored-by: Prashant Singh <[email protected]> Co-authored-by: yangjie01 <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Co-authored-by: attilapiros <[email protected]> Co-authored-by: Holden Karau <[email protected]> Co-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]>

* ODP-2189 Upgrade snakeyaml version to 2.0 * [SPARK-35579][SQL] Bump janino to 3.1.7 ### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes apache#37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 29ed337) * [SPARK-40633][BUILD] Upgrade janino to 3.1.9 ### What changes were proposed in this pull request? This pr aims upgrade janino from 3.1.7 to 3.1.9 ### Why are the changes needed? This version bring some improvement and bug fix, and janino 3.1.9 will no longer test Java 12, 15, 16 because these STS versions have been EOL: - janino-compiler/janino@v3.1.7...v3.1.9 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions - Manual test this pr with Scala 2.13, all test passed Closes apache#38075 from LuciferYang/SPARK-40633. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 49e102b) * ODP-2167 Upgrade janino version from 3.1.9 to 3.1.10 * ODP-2190 Upgrade guava version to 32.1.3-jre * ODP-2193 Upgrade jettison version to 1.5.4 * ODP-2194 Upgrade wildfly-openssl version to 1.1.3 * ODP-2198 Upgrade gson version to 2.11.0 * ODP-2199 Upgrade kryo-shaded version to 4.0.3 * ODP-2200 Upgrade datanucleus-core and datanucleus-rdbms versions to 5.2.3 * ODP-2203 Upgrade Snappy and common-compress to 1.1.10.4 and 1.26.0 respectively * ODP-2198 Excluded gson from tink library * ODP-2205 Upgrade jdom2 to 2.0.6.1 * ODP-2198 Excluded gson from hive-exec * ODP-2175|SPARK-47018 Upgrade libthrift version and hive version * [SPARK-39688][K8S] `getReusablePVCs` should handle accounts with no PVC permission ### What changes were proposed in this pull request? This PR aims to handle `KubernetesClientException` in `getReusablePVCs` method to handle gracefully the cases where accounts has no PVC permission including `listing`. ### Why are the changes needed? To prevent a regression in Apache Spark 3.4. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with the newly added test case. Closes apache#37095 from dongjoon-hyun/SPARK-39688. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 79f133b) * [SPARK-40458][K8S] Bump Kubernetes Client Version to 6.1.1 ### What changes were proposed in this pull request? Bump kubernetes-client version from 5.12.3 to 6.1.1 and clean up all the deprecations. ### Why are the changes needed? To keep up with kubernetes-client [changes](fabric8io/kubernetes-client@v5.12.3...v6.1.1). As this is an upgrade where the main version changed I have cleaned up all the deprecations. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? #### Unit tests #### Manual tests for submit and application management Started an application in a non-default namespace (`bla`): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit \ --master k8s://http://127.0.0.1:8001 \ --deploy-mode cluster \ --name spark-pi \ --class org.apache.spark.examples.SparkPi \ --conf spark.executor.instances=5 \ --conf spark.kubernetes.namespace=bla \ --conf spark.kubernetes.container.image=docker.io/kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D \ local:///opt/spark/examples/jars/spark-examples_2.12-3.4.0-SNAPSHOT.jar 200000 ``` Check that we cannot find it in the default namespace even with glob without the namespace definition: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=default Context "minikube" modified. ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. No applications found. ``` Then check we can find it by specifying the namespace: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "bla:spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission bla:spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Changing the namespace to `bla` with `kubectl`: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=bla Context "minikube" modified. ``` Checking we can find it without specifying the namespace (and glob): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Killing the app: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --kill "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request to kill submission spark-pi-* in k8s://http://127.0.0.1:8001. Grace period in secs: not set. Deleting driver pod: spark-pi-4c4e70837c86ae1a-driver. ``` Closes apache#37990 from attilapiros/SPARK-40458. Authored-by: attilapiros <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit fa88651) * [SPARK-36462][K8S] Add the ability to selectively disable watching or polling ### What changes were proposed in this pull request? Add the ability to selectively disable watching or polling Updated version of apache#34264 ### Why are the changes needed? Watching or polling for pod status on Kubernetes can place additional load on etcd, with a large number of executors and large number of jobs this can have negative impacts and executors register themselves with the driver under normal operations anyways. ### Does this PR introduce _any_ user-facing change? Two new config flags. ### How was this patch tested? New unit tests + manually tested a forked version of this on an internal cluster with both watching and polling disabled. Closes apache#36433 from holdenk/SPARK-36462-allow-spark-on-kube-to-operate-without-watchers. Lead-authored-by: Holden Karau <[email protected]> Co-authored-by: Holden Karau <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 5bffb98) * ODP-2201|SPARK-48867 Upgrade okhttp to 4.12.0, okio to 3.9.0 and esdk-obs-java to 3.24.3 * [SPARK-41958][CORE][3.3] Disallow arbitrary custom classpath with proxy user in cluster mode Backporting fix for SPARK-41958 to 3.3 branch from apache#39474 Below description from original PR. -------------------------- ### What changes were proposed in this pull request? This PR proposes to disallow arbitrary custom classpath with proxy user in cluster mode by default. ### Why are the changes needed? To avoid arbitrary classpath in spark cluster. ### Does this PR introduce _any_ user-facing change? Yes. User should reenable this feature by `spark.submit.proxyUser.allowCustomClasspathInClusterMode`. ### How was this patch tested? Manually tested. Closes apache#39474 from Ngone51/dev. Lead-authored-by: Peter Toth <peter.tothgmail.com> Co-authored-by: Yi Wu <yi.wudatabricks.com> Signed-off-by: Hyukjin Kwon <gurwls223apache.org> (cherry picked from commit 909da96) ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes apache#41428 from degant/spark-41958-3.3. Lead-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> * ODP-2049 Changing Spark3 version from 3.3.3.3.2.3.2-2 to 3.3.3.3.2.3.2-201 * ODP-2049 Changing libthrift version to 0.16 in deps files * ODP-2049 Changing derby version to 10.14.3.0 --------- Signed-off-by: Dongjoon Hyun <[email protected]> Co-authored-by: Prashant Singh <[email protected]> Co-authored-by: yangjie01 <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Co-authored-by: attilapiros <[email protected]> Co-authored-by: Holden Karau <[email protected]> Co-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]>

* ODP-2189 Upgrade snakeyaml version to 2.0 * [SPARK-35579][SQL] Bump janino to 3.1.7 ### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes apache#37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 29ed337) * [SPARK-40633][BUILD] Upgrade janino to 3.1.9 ### What changes were proposed in this pull request? This pr aims upgrade janino from 3.1.7 to 3.1.9 ### Why are the changes needed? This version bring some improvement and bug fix, and janino 3.1.9 will no longer test Java 12, 15, 16 because these STS versions have been EOL: - janino-compiler/janino@v3.1.7...v3.1.9 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions - Manual test this pr with Scala 2.13, all test passed Closes apache#38075 from LuciferYang/SPARK-40633. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 49e102b) * ODP-2167 Upgrade janino version from 3.1.9 to 3.1.10 * ODP-2190 Upgrade guava version to 32.1.3-jre * ODP-2193 Upgrade jettison version to 1.5.4 * ODP-2194 Upgrade wildfly-openssl version to 1.1.3 * ODP-2198 Upgrade gson version to 2.11.0 * ODP-2199 Upgrade kryo-shaded version to 4.0.3 * ODP-2200 Upgrade datanucleus-core and datanucleus-rdbms versions to 5.2.3 * ODP-2203 Upgrade Snappy and common-compress to 1.1.10.4 and 1.26.0 respectively * ODP-2198 Excluded gson from tink library * ODP-2205 Upgrade jdom2 to 2.0.6.1 * ODP-2198 Excluded gson from hive-exec * ODP-2175|SPARK-47018 Upgrade libthrift version and hive version * [SPARK-39688][K8S] `getReusablePVCs` should handle accounts with no PVC permission ### What changes were proposed in this pull request? This PR aims to handle `KubernetesClientException` in `getReusablePVCs` method to handle gracefully the cases where accounts has no PVC permission including `listing`. ### Why are the changes needed? To prevent a regression in Apache Spark 3.4. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with the newly added test case. Closes apache#37095 from dongjoon-hyun/SPARK-39688. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 79f133b) * [SPARK-40458][K8S] Bump Kubernetes Client Version to 6.1.1 ### What changes were proposed in this pull request? Bump kubernetes-client version from 5.12.3 to 6.1.1 and clean up all the deprecations. ### Why are the changes needed? To keep up with kubernetes-client [changes](fabric8io/kubernetes-client@v5.12.3...v6.1.1). As this is an upgrade where the main version changed I have cleaned up all the deprecations. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? #### Unit tests #### Manual tests for submit and application management Started an application in a non-default namespace (`bla`): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit \ --master k8s://http://127.0.0.1:8001 \ --deploy-mode cluster \ --name spark-pi \ --class org.apache.spark.examples.SparkPi \ --conf spark.executor.instances=5 \ --conf spark.kubernetes.namespace=bla \ --conf spark.kubernetes.container.image=docker.io/kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D \ local:///opt/spark/examples/jars/spark-examples_2.12-3.4.0-SNAPSHOT.jar 200000 ``` Check that we cannot find it in the default namespace even with glob without the namespace definition: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=default Context "minikube" modified. ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. No applications found. ``` Then check we can find it by specifying the namespace: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "bla:spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission bla:spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Changing the namespace to `bla` with `kubectl`: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=bla Context "minikube" modified. ``` Checking we can find it without specifying the namespace (and glob): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Killing the app: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --kill "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request to kill submission spark-pi-* in k8s://http://127.0.0.1:8001. Grace period in secs: not set. Deleting driver pod: spark-pi-4c4e70837c86ae1a-driver. ``` Closes apache#37990 from attilapiros/SPARK-40458. Authored-by: attilapiros <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit fa88651) * [SPARK-36462][K8S] Add the ability to selectively disable watching or polling ### What changes were proposed in this pull request? Add the ability to selectively disable watching or polling Updated version of apache#34264 ### Why are the changes needed? Watching or polling for pod status on Kubernetes can place additional load on etcd, with a large number of executors and large number of jobs this can have negative impacts and executors register themselves with the driver under normal operations anyways. ### Does this PR introduce _any_ user-facing change? Two new config flags. ### How was this patch tested? New unit tests + manually tested a forked version of this on an internal cluster with both watching and polling disabled. Closes apache#36433 from holdenk/SPARK-36462-allow-spark-on-kube-to-operate-without-watchers. Lead-authored-by: Holden Karau <[email protected]> Co-authored-by: Holden Karau <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 5bffb98) * ODP-2201|SPARK-48867 Upgrade okhttp to 4.12.0, okio to 3.9.0 and esdk-obs-java to 3.24.3 * [SPARK-41958][CORE][3.3] Disallow arbitrary custom classpath with proxy user in cluster mode Backporting fix for SPARK-41958 to 3.3 branch from apache#39474 Below description from original PR. -------------------------- ### What changes were proposed in this pull request? This PR proposes to disallow arbitrary custom classpath with proxy user in cluster mode by default. ### Why are the changes needed? To avoid arbitrary classpath in spark cluster. ### Does this PR introduce _any_ user-facing change? Yes. User should reenable this feature by `spark.submit.proxyUser.allowCustomClasspathInClusterMode`. ### How was this patch tested? Manually tested. Closes apache#39474 from Ngone51/dev. Lead-authored-by: Peter Toth <peter.tothgmail.com> Co-authored-by: Yi Wu <yi.wudatabricks.com> Signed-off-by: Hyukjin Kwon <gurwls223apache.org> (cherry picked from commit 909da96) ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes apache#41428 from degant/spark-41958-3.3. Lead-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> * ODP-2049 Changing Spark3 version from 3.3.3.3.2.3.2-2 to 3.3.3.3.2.3.2-201 * ODP-2049 Changing libthrift version to 0.16 in deps files * ODP-2049 Changing derby version to 10.14.3.0 --------- Signed-off-by: Dongjoon Hyun <[email protected]> Co-authored-by: Prashant Singh <[email protected]> Co-authored-by: yangjie01 <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Co-authored-by: attilapiros <[email protected]> Co-authored-by: Holden Karau <[email protected]> Co-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]>

* ODP-2189 Upgrade snakeyaml version to 2.0 * [SPARK-35579][SQL] Bump janino to 3.1.7 ### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes apache#37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 29ed337) * [SPARK-40633][BUILD] Upgrade janino to 3.1.9 ### What changes were proposed in this pull request? This pr aims upgrade janino from 3.1.7 to 3.1.9 ### Why are the changes needed? This version bring some improvement and bug fix, and janino 3.1.9 will no longer test Java 12, 15, 16 because these STS versions have been EOL: - janino-compiler/janino@v3.1.7...v3.1.9 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions - Manual test this pr with Scala 2.13, all test passed Closes apache#38075 from LuciferYang/SPARK-40633. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 49e102b) * ODP-2167 Upgrade janino version from 3.1.9 to 3.1.10 * ODP-2190 Upgrade guava version to 32.1.3-jre * ODP-2193 Upgrade jettison version to 1.5.4 * ODP-2194 Upgrade wildfly-openssl version to 1.1.3 * ODP-2198 Upgrade gson version to 2.11.0 * ODP-2199 Upgrade kryo-shaded version to 4.0.3 * ODP-2200 Upgrade datanucleus-core and datanucleus-rdbms versions to 5.2.3 * ODP-2203 Upgrade Snappy and common-compress to 1.1.10.4 and 1.26.0 respectively * ODP-2198 Excluded gson from tink library * ODP-2205 Upgrade jdom2 to 2.0.6.1 * ODP-2198 Excluded gson from hive-exec * ODP-2175|SPARK-47018 Upgrade libthrift version and hive version * [SPARK-39688][K8S] `getReusablePVCs` should handle accounts with no PVC permission ### What changes were proposed in this pull request? This PR aims to handle `KubernetesClientException` in `getReusablePVCs` method to handle gracefully the cases where accounts has no PVC permission including `listing`. ### Why are the changes needed? To prevent a regression in Apache Spark 3.4. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with the newly added test case. Closes apache#37095 from dongjoon-hyun/SPARK-39688. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 79f133b) * [SPARK-40458][K8S] Bump Kubernetes Client Version to 6.1.1 ### What changes were proposed in this pull request? Bump kubernetes-client version from 5.12.3 to 6.1.1 and clean up all the deprecations. ### Why are the changes needed? To keep up with kubernetes-client [changes](fabric8io/kubernetes-client@v5.12.3...v6.1.1). As this is an upgrade where the main version changed I have cleaned up all the deprecations. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? #### Unit tests #### Manual tests for submit and application management Started an application in a non-default namespace (`bla`): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit \ --master k8s://http://127.0.0.1:8001 \ --deploy-mode cluster \ --name spark-pi \ --class org.apache.spark.examples.SparkPi \ --conf spark.executor.instances=5 \ --conf spark.kubernetes.namespace=bla \ --conf spark.kubernetes.container.image=docker.io/kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D \ local:///opt/spark/examples/jars/spark-examples_2.12-3.4.0-SNAPSHOT.jar 200000 ``` Check that we cannot find it in the default namespace even with glob without the namespace definition: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=default Context "minikube" modified. ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. No applications found. ``` Then check we can find it by specifying the namespace: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "bla:spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission bla:spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Changing the namespace to `bla` with `kubectl`: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=bla Context "minikube" modified. ``` Checking we can find it without specifying the namespace (and glob): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Killing the app: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --kill "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request to kill submission spark-pi-* in k8s://http://127.0.0.1:8001. Grace period in secs: not set. Deleting driver pod: spark-pi-4c4e70837c86ae1a-driver. ``` Closes apache#37990 from attilapiros/SPARK-40458. Authored-by: attilapiros <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit fa88651) * [SPARK-36462][K8S] Add the ability to selectively disable watching or polling ### What changes were proposed in this pull request? Add the ability to selectively disable watching or polling Updated version of apache#34264 ### Why are the changes needed? Watching or polling for pod status on Kubernetes can place additional load on etcd, with a large number of executors and large number of jobs this can have negative impacts and executors register themselves with the driver under normal operations anyways. ### Does this PR introduce _any_ user-facing change? Two new config flags. ### How was this patch tested? New unit tests + manually tested a forked version of this on an internal cluster with both watching and polling disabled. Closes apache#36433 from holdenk/SPARK-36462-allow-spark-on-kube-to-operate-without-watchers. Lead-authored-by: Holden Karau <[email protected]> Co-authored-by: Holden Karau <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 5bffb98) * ODP-2201|SPARK-48867 Upgrade okhttp to 4.12.0, okio to 3.9.0 and esdk-obs-java to 3.24.3 * [SPARK-41958][CORE][3.3] Disallow arbitrary custom classpath with proxy user in cluster mode Backporting fix for SPARK-41958 to 3.3 branch from apache#39474 Below description from original PR. -------------------------- ### What changes were proposed in this pull request? This PR proposes to disallow arbitrary custom classpath with proxy user in cluster mode by default. ### Why are the changes needed? To avoid arbitrary classpath in spark cluster. ### Does this PR introduce _any_ user-facing change? Yes. User should reenable this feature by `spark.submit.proxyUser.allowCustomClasspathInClusterMode`. ### How was this patch tested? Manually tested. Closes apache#39474 from Ngone51/dev. Lead-authored-by: Peter Toth <peter.tothgmail.com> Co-authored-by: Yi Wu <yi.wudatabricks.com> Signed-off-by: Hyukjin Kwon <gurwls223apache.org> (cherry picked from commit 909da96) ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes apache#41428 from degant/spark-41958-3.3. Lead-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> * ODP-2049 Changing Spark3 version from 3.3.3.3.2.3.2-2 to 3.3.3.3.2.3.2-201 * ODP-2049 Changing libthrift version to 0.16 in deps files * ODP-2049 Changing derby version to 10.14.3.0 --------- Signed-off-by: Dongjoon Hyun <[email protected]> Co-authored-by: Prashant Singh <[email protected]> Co-authored-by: yangjie01 <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Co-authored-by: attilapiros <[email protected]> Co-authored-by: Holden Karau <[email protected]> Co-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]>

* ODP-2189 Upgrade snakeyaml version to 2.0 * [SPARK-35579][SQL] Bump janino to 3.1.7 ### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes apache#37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 29ed337) * [SPARK-40633][BUILD] Upgrade janino to 3.1.9 ### What changes were proposed in this pull request? This pr aims upgrade janino from 3.1.7 to 3.1.9 ### Why are the changes needed? This version bring some improvement and bug fix, and janino 3.1.9 will no longer test Java 12, 15, 16 because these STS versions have been EOL: - janino-compiler/janino@v3.1.7...v3.1.9 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions - Manual test this pr with Scala 2.13, all test passed Closes apache#38075 from LuciferYang/SPARK-40633. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 49e102b) * ODP-2167 Upgrade janino version from 3.1.9 to 3.1.10 * ODP-2190 Upgrade guava version to 32.1.3-jre * ODP-2193 Upgrade jettison version to 1.5.4 * ODP-2194 Upgrade wildfly-openssl version to 1.1.3 * ODP-2198 Upgrade gson version to 2.11.0 * ODP-2199 Upgrade kryo-shaded version to 4.0.3 * ODP-2200 Upgrade datanucleus-core and datanucleus-rdbms versions to 5.2.3 * ODP-2203 Upgrade Snappy and common-compress to 1.1.10.4 and 1.26.0 respectively * ODP-2198 Excluded gson from tink library * ODP-2205 Upgrade jdom2 to 2.0.6.1 * ODP-2198 Excluded gson from hive-exec * ODP-2175|SPARK-47018 Upgrade libthrift version and hive version * [SPARK-39688][K8S] `getReusablePVCs` should handle accounts with no PVC permission ### What changes were proposed in this pull request? This PR aims to handle `KubernetesClientException` in `getReusablePVCs` method to handle gracefully the cases where accounts has no PVC permission including `listing`. ### Why are the changes needed? To prevent a regression in Apache Spark 3.4. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with the newly added test case. Closes apache#37095 from dongjoon-hyun/SPARK-39688. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 79f133b) * [SPARK-40458][K8S] Bump Kubernetes Client Version to 6.1.1 ### What changes were proposed in this pull request? Bump kubernetes-client version from 5.12.3 to 6.1.1 and clean up all the deprecations. ### Why are the changes needed? To keep up with kubernetes-client [changes](fabric8io/kubernetes-client@v5.12.3...v6.1.1). As this is an upgrade where the main version changed I have cleaned up all the deprecations. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? #### Unit tests #### Manual tests for submit and application management Started an application in a non-default namespace (`bla`): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit \ --master k8s://http://127.0.0.1:8001 \ --deploy-mode cluster \ --name spark-pi \ --class org.apache.spark.examples.SparkPi \ --conf spark.executor.instances=5 \ --conf spark.kubernetes.namespace=bla \ --conf spark.kubernetes.container.image=docker.io/kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D \ local:///opt/spark/examples/jars/spark-examples_2.12-3.4.0-SNAPSHOT.jar 200000 ``` Check that we cannot find it in the default namespace even with glob without the namespace definition: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=default Context "minikube" modified. ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. No applications found. ``` Then check we can find it by specifying the namespace: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "bla:spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission bla:spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Changing the namespace to `bla` with `kubectl`: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=bla Context "minikube" modified. ``` Checking we can find it without specifying the namespace (and glob): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Killing the app: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --kill "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request to kill submission spark-pi-* in k8s://http://127.0.0.1:8001. Grace period in secs: not set. Deleting driver pod: spark-pi-4c4e70837c86ae1a-driver. ``` Closes apache#37990 from attilapiros/SPARK-40458. Authored-by: attilapiros <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit fa88651) * [SPARK-36462][K8S] Add the ability to selectively disable watching or polling ### What changes were proposed in this pull request? Add the ability to selectively disable watching or polling Updated version of apache#34264 ### Why are the changes needed? Watching or polling for pod status on Kubernetes can place additional load on etcd, with a large number of executors and large number of jobs this can have negative impacts and executors register themselves with the driver under normal operations anyways. ### Does this PR introduce _any_ user-facing change? Two new config flags. ### How was this patch tested? New unit tests + manually tested a forked version of this on an internal cluster with both watching and polling disabled. Closes apache#36433 from holdenk/SPARK-36462-allow-spark-on-kube-to-operate-without-watchers. Lead-authored-by: Holden Karau <[email protected]> Co-authored-by: Holden Karau <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 5bffb98) * ODP-2201|SPARK-48867 Upgrade okhttp to 4.12.0, okio to 3.9.0 and esdk-obs-java to 3.24.3 * [SPARK-41958][CORE][3.3] Disallow arbitrary custom classpath with proxy user in cluster mode Backporting fix for SPARK-41958 to 3.3 branch from apache#39474 Below description from original PR. -------------------------- ### What changes were proposed in this pull request? This PR proposes to disallow arbitrary custom classpath with proxy user in cluster mode by default. ### Why are the changes needed? To avoid arbitrary classpath in spark cluster. ### Does this PR introduce _any_ user-facing change? Yes. User should reenable this feature by `spark.submit.proxyUser.allowCustomClasspathInClusterMode`. ### How was this patch tested? Manually tested. Closes apache#39474 from Ngone51/dev. Lead-authored-by: Peter Toth <peter.tothgmail.com> Co-authored-by: Yi Wu <yi.wudatabricks.com> Signed-off-by: Hyukjin Kwon <gurwls223apache.org> (cherry picked from commit 909da96) ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes apache#41428 from degant/spark-41958-3.3. Lead-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> * ODP-2049 Changing Spark3 version from 3.3.3.3.2.3.2-2 to 3.3.3.3.2.3.2-201 * ODP-2049 Changing libthrift version to 0.16 in deps files * ODP-2049 Changing derby version to 10.14.3.0 --------- Signed-off-by: Dongjoon Hyun <[email protected]> Co-authored-by: Prashant Singh <[email protected]> Co-authored-by: yangjie01 <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Co-authored-by: attilapiros <[email protected]> Co-authored-by: Holden Karau <[email protected]> Co-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]>

* ODP-2189 Upgrade snakeyaml version to 2.0 * [SPARK-35579][SQL] Bump janino to 3.1.7 ### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes apache#37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 29ed337) * [SPARK-40633][BUILD] Upgrade janino to 3.1.9 ### What changes were proposed in this pull request? This pr aims upgrade janino from 3.1.7 to 3.1.9 ### Why are the changes needed? This version bring some improvement and bug fix, and janino 3.1.9 will no longer test Java 12, 15, 16 because these STS versions have been EOL: - janino-compiler/janino@v3.1.7...v3.1.9 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions - Manual test this pr with Scala 2.13, all test passed Closes apache#38075 from LuciferYang/SPARK-40633. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 49e102b) * ODP-2167 Upgrade janino version from 3.1.9 to 3.1.10 * ODP-2190 Upgrade guava version to 32.1.3-jre * ODP-2193 Upgrade jettison version to 1.5.4 * ODP-2194 Upgrade wildfly-openssl version to 1.1.3 * ODP-2198 Upgrade gson version to 2.11.0 * ODP-2199 Upgrade kryo-shaded version to 4.0.3 * ODP-2200 Upgrade datanucleus-core and datanucleus-rdbms versions to 5.2.3 * ODP-2203 Upgrade Snappy and common-compress to 1.1.10.4 and 1.26.0 respectively * ODP-2198 Excluded gson from tink library * ODP-2205 Upgrade jdom2 to 2.0.6.1 * ODP-2198 Excluded gson from hive-exec * ODP-2175|SPARK-47018 Upgrade libthrift version and hive version * [SPARK-39688][K8S] `getReusablePVCs` should handle accounts with no PVC permission ### What changes were proposed in this pull request? This PR aims to handle `KubernetesClientException` in `getReusablePVCs` method to handle gracefully the cases where accounts has no PVC permission including `listing`. ### Why are the changes needed? To prevent a regression in Apache Spark 3.4. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with the newly added test case. Closes apache#37095 from dongjoon-hyun/SPARK-39688. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 79f133b) * [SPARK-40458][K8S] Bump Kubernetes Client Version to 6.1.1 ### What changes were proposed in this pull request? Bump kubernetes-client version from 5.12.3 to 6.1.1 and clean up all the deprecations. ### Why are the changes needed? To keep up with kubernetes-client [changes](fabric8io/kubernetes-client@v5.12.3...v6.1.1). As this is an upgrade where the main version changed I have cleaned up all the deprecations. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? #### Unit tests #### Manual tests for submit and application management Started an application in a non-default namespace (`bla`): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit \ --master k8s://http://127.0.0.1:8001 \ --deploy-mode cluster \ --name spark-pi \ --class org.apache.spark.examples.SparkPi \ --conf spark.executor.instances=5 \ --conf spark.kubernetes.namespace=bla \ --conf spark.kubernetes.container.image=docker.io/kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D \ local:///opt/spark/examples/jars/spark-examples_2.12-3.4.0-SNAPSHOT.jar 200000 ``` Check that we cannot find it in the default namespace even with glob without the namespace definition: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=default Context "minikube" modified. ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. No applications found. ``` Then check we can find it by specifying the namespace: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "bla:spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission bla:spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Changing the namespace to `bla` with `kubectl`: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=bla Context "minikube" modified. ``` Checking we can find it without specifying the namespace (and glob): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Killing the app: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --kill "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request to kill submission spark-pi-* in k8s://http://127.0.0.1:8001. Grace period in secs: not set. Deleting driver pod: spark-pi-4c4e70837c86ae1a-driver. ``` Closes apache#37990 from attilapiros/SPARK-40458. Authored-by: attilapiros <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit fa88651) * [SPARK-36462][K8S] Add the ability to selectively disable watching or polling ### What changes were proposed in this pull request? Add the ability to selectively disable watching or polling Updated version of apache#34264 ### Why are the changes needed? Watching or polling for pod status on Kubernetes can place additional load on etcd, with a large number of executors and large number of jobs this can have negative impacts and executors register themselves with the driver under normal operations anyways. ### Does this PR introduce _any_ user-facing change? Two new config flags. ### How was this patch tested? New unit tests + manually tested a forked version of this on an internal cluster with both watching and polling disabled. Closes apache#36433 from holdenk/SPARK-36462-allow-spark-on-kube-to-operate-without-watchers. Lead-authored-by: Holden Karau <[email protected]> Co-authored-by: Holden Karau <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 5bffb98) * ODP-2201|SPARK-48867 Upgrade okhttp to 4.12.0, okio to 3.9.0 and esdk-obs-java to 3.24.3 * [SPARK-41958][CORE][3.3] Disallow arbitrary custom classpath with proxy user in cluster mode Backporting fix for SPARK-41958 to 3.3 branch from apache#39474 Below description from original PR. -------------------------- ### What changes were proposed in this pull request? This PR proposes to disallow arbitrary custom classpath with proxy user in cluster mode by default. ### Why are the changes needed? To avoid arbitrary classpath in spark cluster. ### Does this PR introduce _any_ user-facing change? Yes. User should reenable this feature by `spark.submit.proxyUser.allowCustomClasspathInClusterMode`. ### How was this patch tested? Manually tested. Closes apache#39474 from Ngone51/dev. Lead-authored-by: Peter Toth <peter.tothgmail.com> Co-authored-by: Yi Wu <yi.wudatabricks.com> Signed-off-by: Hyukjin Kwon <gurwls223apache.org> (cherry picked from commit 909da96) ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes apache#41428 from degant/spark-41958-3.3. Lead-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> * ODP-2049 Changing Spark3 version from 3.3.3.3.2.3.2-2 to 3.3.3.3.2.3.2-201 * ODP-2049 Changing libthrift version to 0.16 in deps files * ODP-2049 Changing derby version to 10.14.3.0 --------- Signed-off-by: Dongjoon Hyun <[email protected]> Co-authored-by: Prashant Singh <[email protected]> Co-authored-by: yangjie01 <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Co-authored-by: attilapiros <[email protected]> Co-authored-by: Holden Karau <[email protected]> Co-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]>

* ODP-2189 Upgrade snakeyaml version to 2.0 * [SPARK-35579][SQL] Bump janino to 3.1.7 ### What changes were proposed in this pull request? upgrade janino to 3.1.7 from 3.0.16 ### Why are the changes needed? - The proposed version contains bug fix in janino by maropu. - janino-compiler/janino#148 - contains `getBytecodes` method which can be used to simplify the way to get bytecodes from ClassBodyEvaluator in CodeGenerator#updateAndGetCompilationStats method. (by LuciferYang) - apache#32536 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing UTs Closes apache#37202 from singhpk234/upgrade/bump-janino. Authored-by: Prashant Singh <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 29ed337) * [SPARK-40633][BUILD] Upgrade janino to 3.1.9 ### What changes were proposed in this pull request? This pr aims upgrade janino from 3.1.7 to 3.1.9 ### Why are the changes needed? This version bring some improvement and bug fix, and janino 3.1.9 will no longer test Java 12, 15, 16 because these STS versions have been EOL: - janino-compiler/janino@v3.1.7...v3.1.9 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions - Manual test this pr with Scala 2.13, all test passed Closes apache#38075 from LuciferYang/SPARK-40633. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 49e102b) * ODP-2167 Upgrade janino version from 3.1.9 to 3.1.10 * ODP-2190 Upgrade guava version to 32.1.3-jre * ODP-2193 Upgrade jettison version to 1.5.4 * ODP-2194 Upgrade wildfly-openssl version to 1.1.3 * ODP-2198 Upgrade gson version to 2.11.0 * ODP-2199 Upgrade kryo-shaded version to 4.0.3 * ODP-2200 Upgrade datanucleus-core and datanucleus-rdbms versions to 5.2.3 * ODP-2203 Upgrade Snappy and common-compress to 1.1.10.4 and 1.26.0 respectively * ODP-2198 Excluded gson from tink library * ODP-2205 Upgrade jdom2 to 2.0.6.1 * ODP-2198 Excluded gson from hive-exec * ODP-2175|SPARK-47018 Upgrade libthrift version and hive version * [SPARK-39688][K8S] `getReusablePVCs` should handle accounts with no PVC permission ### What changes were proposed in this pull request? This PR aims to handle `KubernetesClientException` in `getReusablePVCs` method to handle gracefully the cases where accounts has no PVC permission including `listing`. ### Why are the changes needed? To prevent a regression in Apache Spark 3.4. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Pass the CIs with the newly added test case. Closes apache#37095 from dongjoon-hyun/SPARK-39688. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 79f133b) * [SPARK-40458][K8S] Bump Kubernetes Client Version to 6.1.1 ### What changes were proposed in this pull request? Bump kubernetes-client version from 5.12.3 to 6.1.1 and clean up all the deprecations. ### Why are the changes needed? To keep up with kubernetes-client [changes](fabric8io/kubernetes-client@v5.12.3...v6.1.1). As this is an upgrade where the main version changed I have cleaned up all the deprecations. ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? #### Unit tests #### Manual tests for submit and application management Started an application in a non-default namespace (`bla`): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit \ --master k8s://http://127.0.0.1:8001 \ --deploy-mode cluster \ --name spark-pi \ --class org.apache.spark.examples.SparkPi \ --conf spark.executor.instances=5 \ --conf spark.kubernetes.namespace=bla \ --conf spark.kubernetes.container.image=docker.io/kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D \ local:///opt/spark/examples/jars/spark-examples_2.12-3.4.0-SNAPSHOT.jar 200000 ``` Check that we cannot find it in the default namespace even with glob without the namespace definition: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=default Context "minikube" modified. ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. No applications found. ``` Then check we can find it by specifying the namespace: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "bla:spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission bla:spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Changing the namespace to `bla` with `kubectl`: ``` ➜ spark git:(SPARK-40458) ✗ minikube kubectl -- config set-context --current --namespace=bla Context "minikube" modified. ``` Checking we can find it without specifying the namespace (and glob): ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --status "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request for the status of submission spark-pi-* in k8s://http://127.0.0.1:8001. Application status (driver): pod name: spark-pi-4c4e70837c86ae1a-driver namespace: bla labels: spark-app-name -> spark-pi, spark-app-selector -> spark-c95a9a0888214c01a286eb7ba23980a0, spark-role -> driver, spark-version -> 3.4.0-SNAPSHOT pod uid: 0be8952e-3e00-47a3-9082-9cb45278ed6d creation time: 2022-09-27T01:19:06Z service account name: default volumes: spark-local-dir-1, spark-conf-volume-driver, kube-api-access-wxnqw node name: minikube start time: 2022-09-27T01:19:06Z phase: Running container status: container name: spark-kubernetes-driver container image: kubespark/spark:3.4.0-SNAPSHOT_064A99CC-57AF-46D5-B743-5B12692C260D container state: running container started at: 2022-09-27T01:19:07Z ``` Killing the app: ``` ➜ spark git:(SPARK-40458) ✗ ./bin/spark-submit --kill "spark-pi-*" --master k8s://http://127.0.0.1:8001 Submitting a request to kill submission spark-pi-* in k8s://http://127.0.0.1:8001. Grace period in secs: not set. Deleting driver pod: spark-pi-4c4e70837c86ae1a-driver. ``` Closes apache#37990 from attilapiros/SPARK-40458. Authored-by: attilapiros <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit fa88651) * [SPARK-36462][K8S] Add the ability to selectively disable watching or polling ### What changes were proposed in this pull request? Add the ability to selectively disable watching or polling Updated version of apache#34264 ### Why are the changes needed? Watching or polling for pod status on Kubernetes can place additional load on etcd, with a large number of executors and large number of jobs this can have negative impacts and executors register themselves with the driver under normal operations anyways. ### Does this PR introduce _any_ user-facing change? Two new config flags. ### How was this patch tested? New unit tests + manually tested a forked version of this on an internal cluster with both watching and polling disabled. Closes apache#36433 from holdenk/SPARK-36462-allow-spark-on-kube-to-operate-without-watchers. Lead-authored-by: Holden Karau <[email protected]> Co-authored-by: Holden Karau <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 5bffb98) * ODP-2201|SPARK-48867 Upgrade okhttp to 4.12.0, okio to 3.9.0 and esdk-obs-java to 3.24.3 * [SPARK-41958][CORE][3.3] Disallow arbitrary custom classpath with proxy user in cluster mode Backporting fix for SPARK-41958 to 3.3 branch from apache#39474 Below description from original PR. -------------------------- ### What changes were proposed in this pull request? This PR proposes to disallow arbitrary custom classpath with proxy user in cluster mode by default. ### Why are the changes needed? To avoid arbitrary classpath in spark cluster. ### Does this PR introduce _any_ user-facing change? Yes. User should reenable this feature by `spark.submit.proxyUser.allowCustomClasspathInClusterMode`. ### How was this patch tested? Manually tested. Closes apache#39474 from Ngone51/dev. Lead-authored-by: Peter Toth <peter.tothgmail.com> Co-authored-by: Yi Wu <yi.wudatabricks.com> Signed-off-by: Hyukjin Kwon <gurwls223apache.org> (cherry picked from commit 909da96) ### What changes were proposed in this pull request? ### Why are the changes needed? ### Does this PR introduce _any_ user-facing change? ### How was this patch tested? Closes apache#41428 from degant/spark-41958-3.3. Lead-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> * ODP-2049 Changing Spark3 version from 3.3.3.3.2.3.2-2 to 3.3.3.3.2.3.2-201 * ODP-2049 Changing libthrift version to 0.16 in deps files * ODP-2049 Changing derby version to 10.14.3.0 --------- Signed-off-by: Dongjoon Hyun <[email protected]> Co-authored-by: Prashant Singh <[email protected]> Co-authored-by: yangjie01 <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Co-authored-by: attilapiros <[email protected]> Co-authored-by: Holden Karau <[email protected]> Co-authored-by: Degant Puri <[email protected]> Co-authored-by: Peter Toth <[email protected]>