-

Notifications

You must be signed in to change notification settings - Fork 28.7k

[SPARK-26424][SQL] Use java.time API in date/timestamp expressions #23358

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #100346 has finished for PR 23358 at commit

|

sql/core/src/test/scala/org/apache/spark/sql/DateFunctionsSuite.scala

Outdated

Show resolved

Hide resolved

|

Test build #100363 has finished for PR 23358 at commit

|

|

Test build #100369 has finished for PR 23358 at commit

|

| .appendPattern(pattern) | ||

| .parseDefaulting(ChronoField.YEAR_OF_ERA, 1970) | ||

| .parseDefaulting(ChronoField.ERA, 1) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Era is required in STRICT mode

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

is 1 a reasonable default value for ERA?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think so. This is our current era: https://docs.oracle.com/javase/8/docs/api/java/time/temporal/ChronoField.html#ERA : "The value of the era that was active on 1970-01-01 (ISO) must be assigned the value 1."

| .appendPattern(pattern) | ||

| .parseDefaulting(ChronoField.YEAR_OF_ERA, 1970) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Year must always present in timestamps/dates. Probability of an user is satisfied to default value 1970 is pretty low. Don't think if the user wants to parse let's say 14 Nov, he/she means 14 Nov 1970. I would guess current year but this approach is error prone.

| .parseDefaulting(ChronoField.MONTH_OF_YEAR, 1) | ||

| .parseDefaulting(ChronoField.DAY_OF_MONTH, 1) | ||

| .parseDefaulting(ChronoField.HOUR_OF_DAY, 0) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hours must always present in the time part. The default value causes conflict if the timestamp pattern has a (AM or PM). If there are no hours, we set the time part to zero later.

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

Show resolved

Hide resolved

| } | ||

|

|

||

| protected def toInstantWithZoneId(temporalAccessor: TemporalAccessor, zoneId: ZoneId): Instant = { | ||

| val localDateTime = LocalDateTime.from(temporalAccessor) | ||

| val localTime = if (temporalAccessor.query(TemporalQueries.localTime) == null) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If parsed timestamp does not have the time part at all, set all (hours, minutes, seconds and etc.) to zeros.

| */ | ||

| @throws(classOf[ParseException]) | ||

| @throws(classOf[DateTimeParseException]) | ||

| @throws(classOf[DateTimeException]) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This annotations are required in whole stage codegen otherwise I got an error about some exception catches are not reachable/used.

| @@ -36,7 +50,8 @@ class Iso8601TimestampFormatter( | |||

| pattern: String, | |||

| timeZone: TimeZone, | |||

| locale: Locale) extends TimestampFormatter with DateTimeFormatterHelper { | |||

| private val formatter = buildFormatter(pattern, locale) | |||

| @transient | |||

| private lazy val formatter = buildFormatter(pattern, locale) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The Iso8601TimestampFormatter class became serializable but the build is still not.

| @@ -27,7 +29,19 @@ import org.apache.commons.lang3.time.FastDateFormat | |||

|

|

|||

| import org.apache.spark.sql.internal.SQLConf | |||

|

|

|||

| sealed trait TimestampFormatter { | |||

| sealed trait TimestampFormatter extends Serializable { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Making it serializable otherwise I got task not serializable exception from generated code

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

Show resolved

Hide resolved

|

Test build #100371 has finished for PR 23358 at commit

|

|

jenkins, retest this, please |

|

Test build #100393 has finished for PR 23358 at commit

|

|

Test build #100398 has finished for PR 23358 at commit

|

sql/catalyst/src/test/scala/org/apache/spark/sql/util/DateFormatterSuite.scala

Outdated

Show resolved

Hide resolved

sql/catalyst/src/test/scala/org/apache/spark/sql/util/TimestampFormatterSuite.scala

Outdated

Show resolved

Hide resolved

|

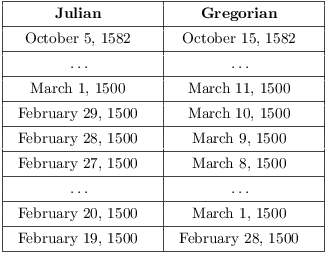

As @hvanhovell mentioned offline, the implementation based on java.time classes changes behavior since it uses IsoChronology which is actually Proleptic Gregorian calendar. It could cause some problems in manipulating old dates. I am going to mention that in the migration guide. I think we can support other calendars like Julian calendar the future by using libraries like ThreeTen-Extra. |

|

R build failed: |

|

jenkins, retest this, please |

|

Test build #100419 has finished for PR 23358 at commit

|

|

Test build #100424 has finished for PR 23358 at commit

|

|

Test build #100426 has finished for PR 23358 at commit

|

|

The migration guide update LGTM. Do we have an example to show the calendar problem? AFAIK SQL standard follows Gregorian calendar, so we shouldn't support Julian calendar. |

|

LGTM |

|

thanks, merging to master! |

## What changes were proposed in this pull request? In the PR, I propose to switch the `DateFormatClass`, `ToUnixTimestamp`, `FromUnixTime`, `UnixTime` on java.time API for parsing/formatting dates and timestamps. The API has been already implemented by the `Timestamp`/`DateFormatter` classes. One of benefit is those classes support parsing timestamps with microsecond precision. Old behaviour can be switched on via SQL config: `spark.sql.legacy.timeParser.enabled` (`false` by default). ## How was this patch tested? It was tested by existing test suites - `DateFunctionsSuite`, `DateExpressionsSuite`, `JsonSuite`, `CsvSuite`, `SQLQueryTestSuite` as well as PySpark tests. Closes apache#23358 from MaxGekk/new-time-cast. Lead-authored-by: Maxim Gekk <[email protected]> Co-authored-by: Maxim Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

## What changes were proposed in this pull request? This PR fixes the codegen bug introduced by apache#23358 . - https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.11/158/ ``` Line 44, Column 93: A method named "apply" is not declared in any enclosing class nor any supertype, nor through a static import ``` ## How was this patch tested? Manual. `DateExpressionsSuite` should be passed with Scala-2.11. Closes apache#23394 from dongjoon-hyun/SPARK-26424. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

## What changes were proposed in this pull request? In the PR, I propose to switch the `DateFormatClass`, `ToUnixTimestamp`, `FromUnixTime`, `UnixTime` on java.time API for parsing/formatting dates and timestamps. The API has been already implemented by the `Timestamp`/`DateFormatter` classes. One of benefit is those classes support parsing timestamps with microsecond precision. Old behaviour can be switched on via SQL config: `spark.sql.legacy.timeParser.enabled` (`false` by default). ## How was this patch tested? It was tested by existing test suites - `DateFunctionsSuite`, `DateExpressionsSuite`, `JsonSuite`, `CsvSuite`, `SQLQueryTestSuite` as well as PySpark tests. Closes apache#23358 from MaxGekk/new-time-cast. Lead-authored-by: Maxim Gekk <[email protected]> Co-authored-by: Maxim Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

## What changes were proposed in this pull request? This PR fixes the codegen bug introduced by apache#23358 . - https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.11/158/ ``` Line 44, Column 93: A method named "apply" is not declared in any enclosing class nor any supertype, nor through a static import ``` ## How was this patch tested? Manual. `DateExpressionsSuite` should be passed with Scala-2.11. Closes apache#23394 from dongjoon-hyun/SPARK-26424. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

What changes were proposed in this pull request?

In the PR, I propose to switch the

DateFormatClass,ToUnixTimestamp,FromUnixTime,UnixTimeon java.time API for parsing/formatting dates and timestamps. The API has been already implemented by theTimestamp/DateFormatterclasses. One of benefit is those classes support parsing timestamps with microsecond precision. Old behaviour can be switched on via SQL config:spark.sql.legacy.timeParser.enabled(falseby default).How was this patch tested?

It was tested by existing test suites -

DateFunctionsSuite,DateExpressionsSuite,JsonSuite,CsvSuite,SQLQueryTestSuiteas well as PySpark tests.