-

Notifications

You must be signed in to change notification settings - Fork 3.6k

Closed

Closed

Copy link

Labels

bugSomething isn't workingSomething isn't working

Description

🐛 Bug

In the beginning of training a table with the number of trainable parameters is printed.

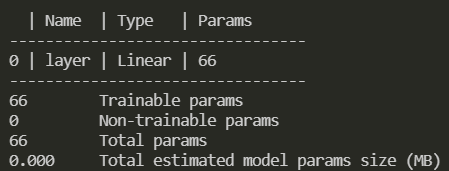

This is not the correct answers (66) when strategy="deepspeed_stage_3" (even when using only 1 gpu):

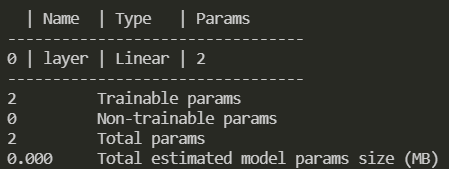

ddp/deepspeed_stage_1/deepspeed_stage_2:

I'm guessing this is something to do with the parameter shard-ing used in stage3...?

I'm curious to know which 2 parameters are counted, since there are no other parameters in the model.

To Reproduce

import os

import torch

from torch.utils.data import DataLoader, Dataset

from pytorch_lightning import LightningModule, Trainer

class RandomDataset(Dataset):

def __init__(self, size, num_samples):

self.len = num_samples

self.data = torch.randn(num_samples, size)

def __getitem__(self, index):

return self.data[index]

def __len__(self):

return self.len

class BoringModel(LightningModule):

def __init__(self):

super().__init__()

self.layer = torch.nn.Linear(32, 2)

def forward(self, x):

return self.layer(x)

def training_step(self, batch, batch_idx):

loss = self(batch).sum()

return {"loss": loss}

def validation_step(self, batch, batch_idx):

loss = self(batch).sum()

def configure_optimizers(self):

return torch.optim.SGD(self.layer.parameters(), lr=0.1)

def run():

train_data = DataLoader(RandomDataset(32, 64), batch_size=2)

val_data = DataLoader(RandomDataset(32, 64), batch_size=2)

model = BoringModel()

params = sum(p.numel() for p in model.parameters() if p.requires_grad)

print(f'TRAINABLE PARAMS: {params}')

trainer = Trainer(

default_root_dir=os.getcwd(),

limit_train_batches=1,

limit_val_batches=1,

limit_test_batches=1,

num_sanity_val_steps=0,

max_epochs=1,

enable_model_summary=True,

strategy="deepspeed_stage_3",

gpus=[0]

)

trainer.fit(model, train_dataloaders=train_data, val_dataloaders=val_data)

run()

Environment

- pytorch_lightning==1.5.10

- torch==1.10.2

- python==3.8.12

- deepspeed==0.5.10

- OS Linux

- CUDA/cuDNN 10.2

shagunsodhani

Metadata

Metadata

Assignees

Labels

bugSomething isn't workingSomething isn't working