deserializeManySparse(

/**

* Encode strings into web-safe base64 format.

- * Refer to the following article for more information on base64 format:

- * en.wikipedia.org/wiki/Base64. Base64 strings may have padding with '=' at the

+ * Refer to this article for more information on

+ * base64 format. Base64 strings may have padding with '=' at the

* end so that the encoded has length multiple of 4. See Padding section of the

* link above.

* Web-safe means that the encoder uses - and _ instead of + and /.

@@ -992,8 +993,8 @@ public WholeFileReader wholeFileReader(WholeFileReader.Options... options) {

}

/**

- * Writes contents to the file at input filename. Creates file and recursively

- * creates directory if not existing.

+ * Writes {@code contents} to the file at input {@code filename}.

+ * Creates the file and recursively creates directory if it does not exist.

*

* @param filename scalar. The name of the file to which we write the contents.

* @param contents scalar. The content to be written to the output file.

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/MathOps.java b/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/MathOps.java

index d0e1f2ca7f7..2575d62c8d8 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/MathOps.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/MathOps.java

@@ -1866,7 +1866,7 @@ public SegmentProd segmentProd(Operand data,

* For example:

*

* c = tf.constant([[1,2,3,4], [4, 3, 2, 1], [5,6,7,8]])

- * tf.segment_sum(c, tf.constant([0, 0, 1]))

+ * tf.math.segment_sum(c, tf.constant([0, 0, 1]))

* # ==> [[5, 5, 5, 5],

* # [5, 6, 7, 8]]

*

@@ -2056,8 +2056,8 @@ public Tan tan(Operand x) {

* x = tf.constant([-float("inf"), -5, -0.5, 1, 1.2, 2, 3, float("inf")])

* tf.math.tanh(x)

* <tf.Tensor: shape=(8,), dtype=float32, numpy=

- * array([-1. , -0.99990916, -0.46211717, 0.7615942 , 0.8336547 ,

- * 0.9640276 , 0.9950547 , 1. ], dtype=float32)>

+ * array([-1.0, -0.99990916, -0.46211717, 0.7615942 , 0.8336547 ,

+ * 0.9640276 , 0.9950547 , 1.0], dtype=float32)>

*

*

*

@@ -2111,8 +2111,7 @@ public TruncateMod truncateMod(Operand x, Operand y

* Read

* the section on segmentation

* for an explanation of segments.

- * This operator is similar to the unsorted segment sum operator found

- * (here) .

+ *

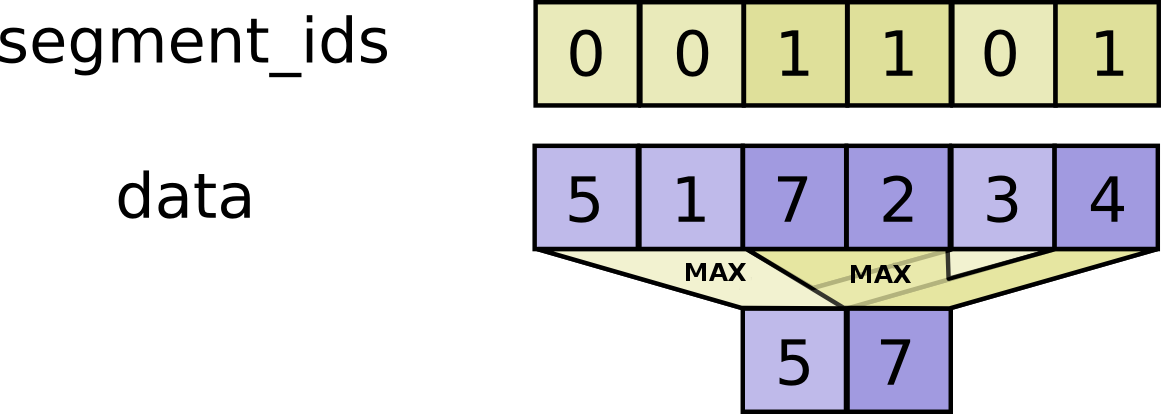

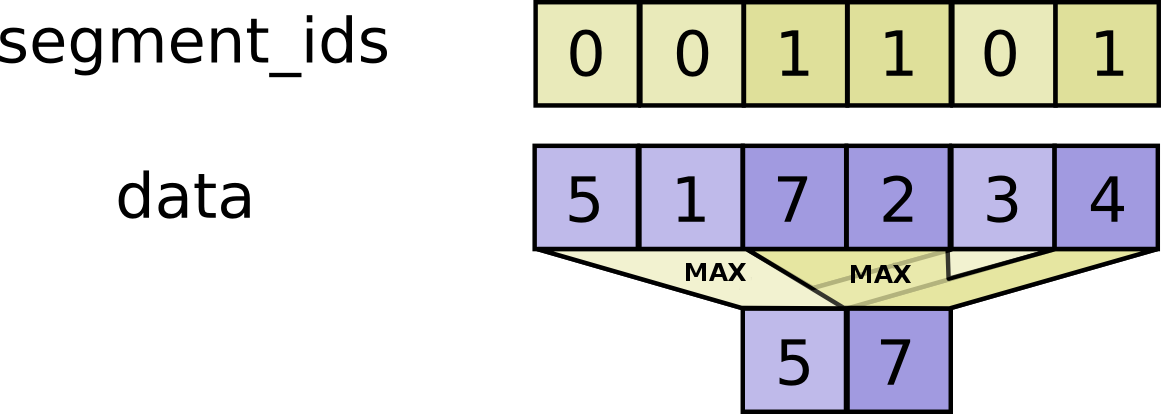

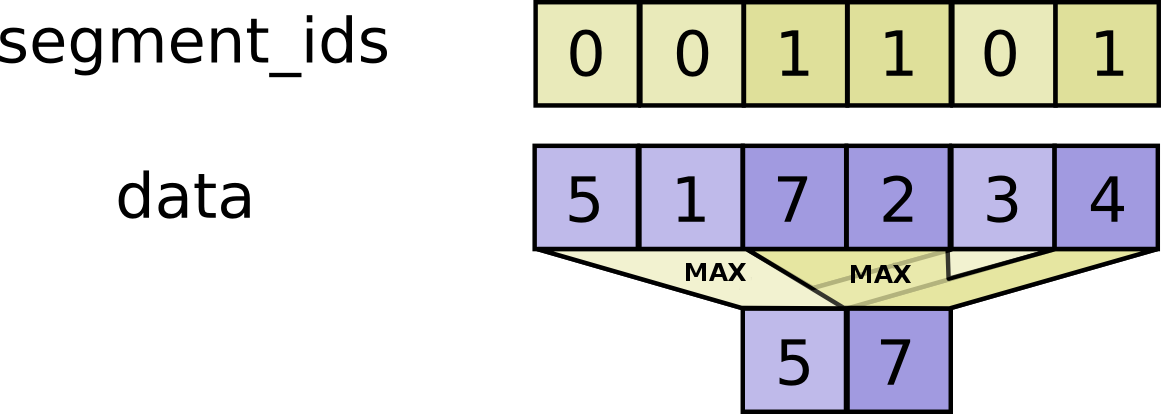

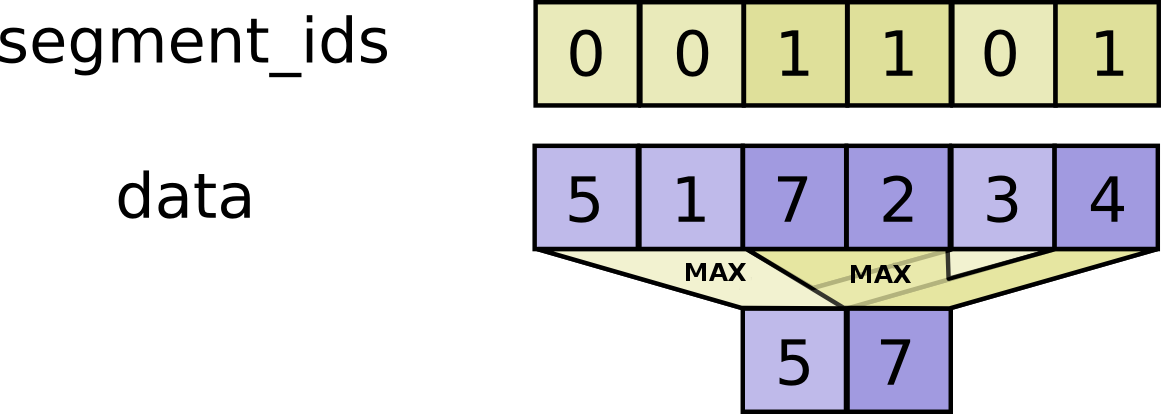

This operator is similar to {@code tf.math.unsorted_segment_sum},

* Instead of computing the sum over segments, it computes the maximum such that:

*

\(output_i = \max_{j...} data[j...]\) where max is over tuples {@code j...} such

* that {@code segment_ids[j...] == i}.

@@ -2121,20 +2120,33 @@ public TruncateMod truncateMod(Operand x, Operand y

* {@code output[i] = numeric_limits::lowest()}.

* If the given segment ID {@code i} is negative, then the corresponding value is

* dropped, and will not be included in the result.

+ *

Caution: On CPU, values in {@code segment_ids} are always validated to be less than

+ * {@code num_segments}, and an error is thrown for out-of-bound indices. On GPU, this

+ * does not throw an error for out-of-bound indices. On Gpu, out-of-bound indices

+ * result in safe but unspecified behavior, which may include ignoring

+ * out-of-bound indices or outputting a tensor with a 0 stored in the first

+ * dimension of its shape if {@code num_segments} is 0.

*

*

*

For example:

- *

- * c = tf.constant([[1,2,3,4], [5,6,7,8], [4,3,2,1]])

- * tf.unsorted_segment_max(c, tf.constant([0, 1, 0]), num_segments=2)

- * # ==> [[ 4, 3, 3, 4],

- * # [5, 6, 7, 8]]

- *

+ *

+ *

+ *

+ * c = tf.constant([[1,2,3,4], [5,6,7,8], [4,3,2,1]])

+ * tf.math.unsorted_segment_max(c, tf.constant([0, 1, 0]), num_segments=2).numpy()

+ * array([[4, 3, 3, 4],

+ * [5, 6, 7, 8]], dtype=int32)

+ *

+ *

+ *

*

* @param data type for {@code output} output

* @param data The data value

* @param segmentIds A tensor whose shape is a prefix of {@code data.shape}.

+ * The values must be less than {@code num_segments}.

+ * Caution: The values are always validated to be in range on CPU, never validated

+ * on GPU.

* @param numSegments The numSegments value

* @param data type for {@code UnsortedSegmentMax} output and operands

* @return a new instance of UnsortedSegmentMax

@@ -2149,8 +2161,7 @@ public UnsortedSegmentMax unsortedSegmentMax(Operand d

* Read

* the section on segmentation

* for an explanation of segments.

- * This operator is similar to the unsorted segment sum operator found

- * (here) .

+ *

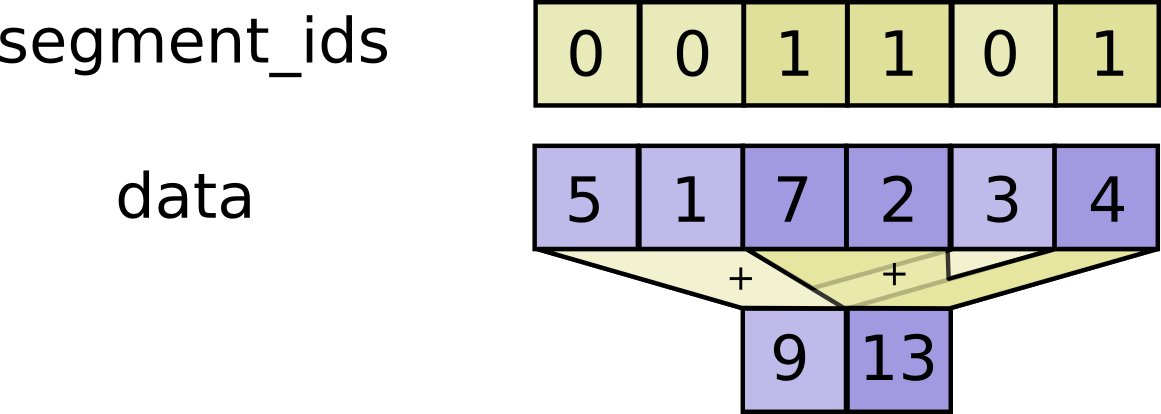

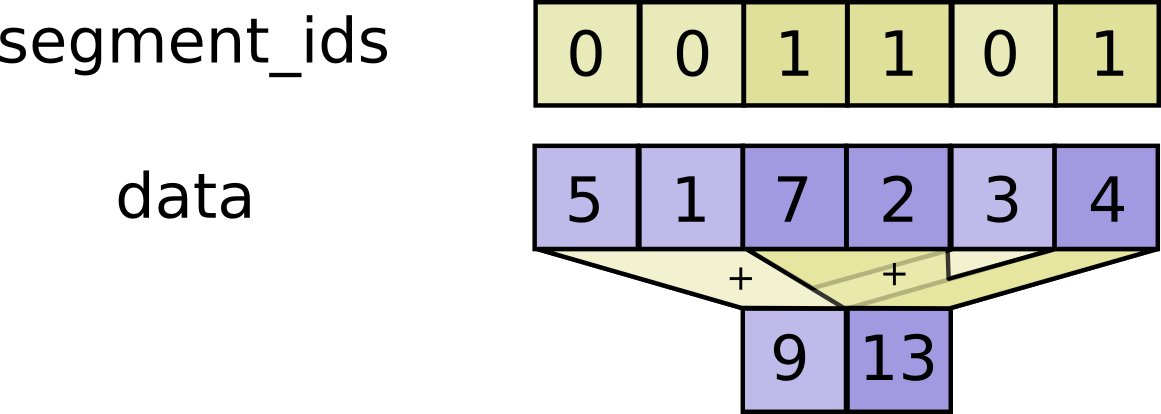

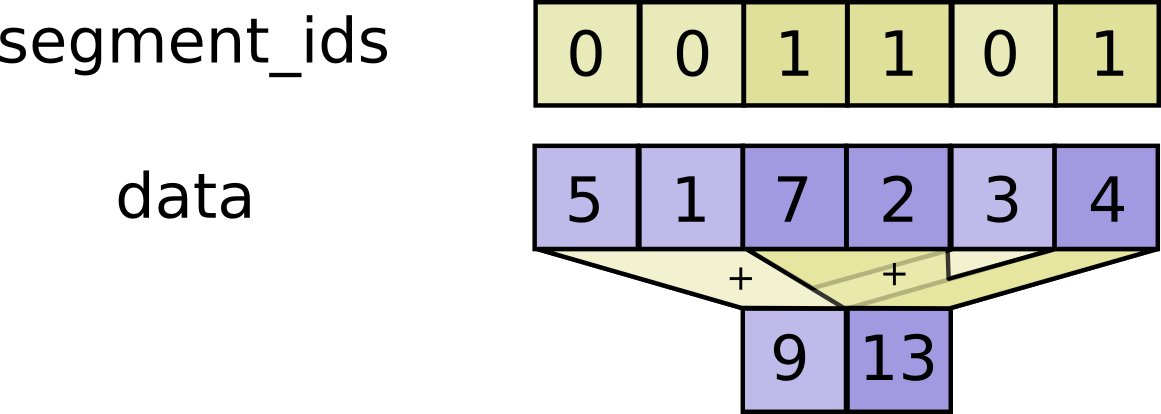

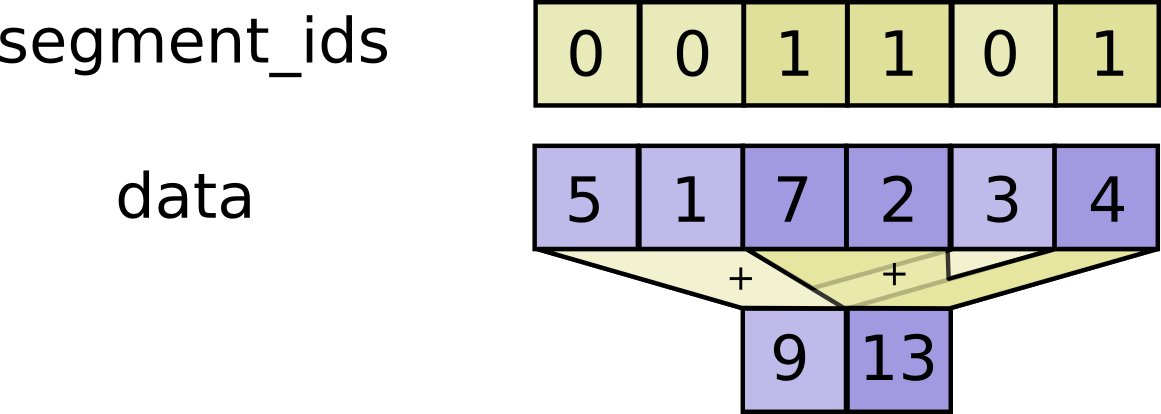

This operator is similar to {@code tf.math.unsorted_segment_sum},

* Instead of computing the sum over segments, it computes the minimum such that:

*

\(output_i = \min_{j...} data_[j...]\) where min is over tuples {@code j...} such

* that {@code segment_ids[j...] == i}.

@@ -2158,18 +2169,31 @@ public UnsortedSegmentMax unsortedSegmentMax(Operand d

* possible value for the specific numeric type,

* {@code output[i] = numeric_limits::max()}.

* For example:

- *

- * c = tf.constant([[1,2,3,4], [5,6,7,8], [4,3,2,1]])

- * tf.unsorted_segment_min(c, tf.constant([0, 1, 0]), num_segments=2)

- * # ==> [[ 1, 2, 2, 1],

- * # [5, 6, 7, 8]]

- *

+ *

+ *

+ *

+ * c = tf.constant([[1,2,3,4], [5,6,7,8], [4,3,2,1]])

+ * tf.math.unsorted_segment_min(c, tf.constant([0, 1, 0]), num_segments=2).numpy()

+ * array([[1, 2, 2, 1],

+ * [5, 6, 7, 8]], dtype=int32)

+ *

+ *

+ *

* If the given segment ID {@code i} is negative, then the corresponding value is

* dropped, and will not be included in the result.

+ *

Caution: On CPU, values in {@code segment_ids} are always validated to be less than

+ * {@code num_segments}, and an error is thrown for out-of-bound indices. On GPU, this

+ * does not throw an error for out-of-bound indices. On Gpu, out-of-bound indices

+ * result in safe but unspecified behavior, which may include ignoring

+ * out-of-bound indices or outputting a tensor with a 0 stored in the first

+ * dimension of its shape if {@code num_segments} is 0.

*

* @param data type for {@code output} output

* @param data The data value

* @param segmentIds A tensor whose shape is a prefix of {@code data.shape}.

+ * The values must be less than {@code num_segments}.

+ * Caution: The values are always validated to be in range on CPU, never validated

+ * on GPU.

* @param numSegments The numSegments value

* @param data type for {@code UnsortedSegmentMin} output and operands

* @return a new instance of UnsortedSegmentMin

@@ -2184,26 +2208,38 @@ public UnsortedSegmentMin unsortedSegmentMin(Operand d

* Read

* the section on segmentation

* for an explanation of segments.

- * This operator is similar to the unsorted segment sum operator found

- * (here) .

+ *

This operator is similar to {@code tf.math.unsorted_segment_sum},

* Instead of computing the sum over segments, it computes the product of all

* entries belonging to a segment such that:

*

\(output_i = \prod_{j...} data[j...]\) where the product is over tuples

* {@code j...} such that {@code segment_ids[j...] == i}.

*

For example:

- *

- * c = tf.constant([[1,2,3,4], [5,6,7,8], [4,3,2,1]])

- * tf.unsorted_segment_prod(c, tf.constant([0, 1, 0]), num_segments=2)

- * # ==> [[ 4, 6, 6, 4],

- * # [5, 6, 7, 8]]

- *

+ *

+ *

+ *

+ * c = tf.constant([[1,2,3,4], [5,6,7,8], [4,3,2,1]])

+ * tf.math.unsorted_segment_prod(c, tf.constant([0, 1, 0]), num_segments=2).numpy()

+ * array([[4, 6, 6, 4],

+ * [5, 6, 7, 8]], dtype=int32)

+ *

+ *

+ *

* If there is no entry for a given segment ID {@code i}, it outputs 1.

*

If the given segment ID {@code i} is negative, then the corresponding value is

* dropped, and will not be included in the result.

+ * Caution: On CPU, values in {@code segment_ids} are always validated to be less than

+ * {@code num_segments}, and an error is thrown for out-of-bound indices. On GPU, this

+ * does not throw an error for out-of-bound indices. On Gpu, out-of-bound indices

+ * result in safe but unspecified behavior, which may include ignoring

+ * out-of-bound indices or outputting a tensor with a 0 stored in the first

+ * dimension of its shape if {@code num_segments} is 0.

*

* @param data type for {@code output} output

* @param data The data value

* @param segmentIds A tensor whose shape is a prefix of {@code data.shape}.

+ * The values must be less than {@code num_segments}.

+ * Caution: The values are always validated to be in range on CPU, never validated

+ * on GPU.

* @param numSegments The numSegments value

* @param data type for {@code UnsortedSegmentProd} output and operands

* @return a new instance of UnsortedSegmentProd

@@ -2227,19 +2263,32 @@ public UnsortedSegmentProd unsortedSegmentProd(Operand d

* If the given segment ID {@code i} is negative, the value is dropped and will not be

* added to the sum of the segment.

* {@code num_segments} should equal the number of distinct segment IDs.

+ *

Caution: On CPU, values in {@code segment_ids} are always validated to be less than

+ * {@code num_segments}, and an error is thrown for out-of-bound indices. On GPU, this

+ * does not throw an error for out-of-bound indices. On Gpu, out-of-bound indices

+ * result in safe but unspecified behavior, which may include ignoring

+ * out-of-bound indices or outputting a tensor with a 0 stored in the first

+ * dimension of its shape if {@code num_segments} is 0.

*

*

*

- * c = tf.constant([[1,2,3,4], [5,6,7,8], [4,3,2,1]])

- * tf.math.unsorted_segment_sum(c, tf.constant([0, 1, 0]), num_segments=2)

- * # ==> [[ 5, 5, 5, 5],

- * # [5, 6, 7, 8]]

- *

+ *

+ *

+ *

+ * c = [[1,2,3,4], [5,6,7,8], [4,3,2,1]]

+ * tf.math.unsorted_segment_sum(c, [0, 1, 0], num_segments=2).numpy()

+ * array([[5, 5, 5, 5],

+ * [5, 6, 7, 8]], dtype=int32)

+ *

+ *

+ *

*

* @param data type for {@code output} output

* @param data The data value

* @param segmentIds A tensor whose shape is a prefix of {@code data.shape}.

+ * The values must be less than {@code num_segments}.

+ * Caution: The values are always validated to be in range on CPU, never validated

+ * on GPU.

* @param numSegments The numSegments value

* @param data type for {@code UnsortedSegmentSum} output and operands

* @return a new instance of UnsortedSegmentSum

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/Ops.java b/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/Ops.java

index 223754b0480..c5d4de34329 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/Ops.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/Ops.java

@@ -367,20 +367,20 @@ public final class Ops {

public final SparseOps sparse;

- public final BitwiseOps bitwise;

-

public final TpuOps tpu;

- public final AudioOps audio;

+ public final BitwiseOps bitwise;

public final MathOps math;

- public final SignalOps signal;

+ public final AudioOps audio;

- public final TrainOps train;

+ public final SignalOps signal;

public final QuantizationOps quantization;

+ public final TrainOps train;

+

private final Scope scope;

Ops(Scope scope) {

@@ -398,13 +398,13 @@ public final class Ops {

random = new RandomOps(this);

strings = new StringsOps(this);

sparse = new SparseOps(this);

- bitwise = new BitwiseOps(this);

tpu = new TpuOps(this);

- audio = new AudioOps(this);

+ bitwise = new BitwiseOps(this);

math = new MathOps(this);

+ audio = new AudioOps(this);

signal = new SignalOps(this);

- train = new TrainOps(this);

quantization = new QuantizationOps(this);

+ train = new TrainOps(this);

}

/**

@@ -637,11 +637,12 @@ public AssignSubVariableOp assignSubVariableOp(Operand resource

*

* @param resource handle to the resource in which to store the variable.

* @param value the value to set the new tensor to use.

+ * @param options carries optional attribute values

* @return a new instance of AssignVariableOp

*/

public AssignVariableOp assignVariableOp(Operand resource,

- Operand value) {

- return AssignVariableOp.create(scope, resource, value);

+ Operand value, AssignVariableOp.Options... options) {

+ return AssignVariableOp.create(scope, resource, value, options);

}

/**

@@ -2066,7 +2067,10 @@ public CountUpTo countUpTo(Operand ref, Long limit) {

/**

* The op extracts fields from a serialized protocol buffers message into tensors.

- * The {@code decode_proto} op extracts fields from a serialized protocol buffers

+ * Note: This API is designed for orthogonality rather than human-friendliness. It

+ * can be used to parse input protos by hand, but it is intended for use in

+ * generated code.

+ * The {@code decode_proto} op extracts fields from a serialized protocol buffers

* message into tensors. The fields in {@code field_names} are decoded and converted

* to the corresponding {@code output_types} if possible.

*

A {@code message_type} name must be provided to give context for the field names.

@@ -2096,6 +2100,16 @@ public CountUpTo countUpTo(Operand ref, Long limit) {

* {@code DT_INT64}, or using twos-complement if the caller specifies {@code DT_INT32} in

* the {@code output_types} attribute.

*

+ *

+ * {@code map} fields are not directly decoded. They are treated as {@code repeated} fields,

+ * of the appropriate entry type. The proto-compiler defines entry types for each

+ * map field. The type-name is the field name, converted to "CamelCase" with

+ * "Entry" appended. The {@code tf.train.Features.FeatureEntry} message is an example of

+ * one of these implicit {@code Entry} types.

+ *

+ *

+ * {@code enum} fields should be read as int32.

+ *

*

* Both binary and text proto serializations are supported, and can be

* chosen using the {@code format} attribute.

@@ -7238,7 +7252,21 @@ public TensorScatterNdAdd tensorScatterNdAdd(Operand ten

}

/**

- * The TensorScatterMax operation

+ * Apply a sparse update to a tensor taking the element-wise maximum.

+ * Returns a new tensor copied from {@code tensor} whose values are element-wise maximum between

+ * tensor and updates according to the indices.

+ *

+ *

+ *

+ * tensor = [0, 0, 0, 0, 0, 0, 0, 0]

+ * indices = [[1], [4], [5]]

+ * updates = [1, -1, 1]

+ * tf.tensor_scatter_nd_max(tensor, indices, updates).numpy()

+ * array([0, 1, 0, 0, 0, 1, 0, 0], dtype=int32)

+ *

+ *

+ *

+ * Refer to {@code tf.tensor_scatter_nd_update} for more details.

*

* @param data type for {@code output} output

* @param tensor Tensor to update.

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/XlaOps.java b/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/XlaOps.java

index fb1c5e66b71..cdd9ee0ac44 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/XlaOps.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/annotations/org/tensorflow/op/XlaOps.java

@@ -86,12 +86,17 @@ public final class XlaOps {

* @param input Array or a non-empty tuple of arrays to reduce across replicas.

* @param groupAssignment Groups between which the reductions are performed.

* @param reduceOp Reduction computation.

+ * @param mode group mode.

+ * CrossReplica: group_assignment contains replica_id. Each group contains the

+ * replicas for the current partition.

+ * CrossReplicaAndPartition: group_assignment contains replica_id. Each group

+ * contains the replicas for all partitions.

* @param data type for {@code XlaAllReduce} output and operands

* @return a new instance of AllReduce

*/

public AllReduce allReduce(Operand input,

- Operand groupAssignment, String reduceOp) {

- return AllReduce.create(scope, input, groupAssignment, reduceOp);

+ Operand groupAssignment, String reduceOp, String mode) {

+ return AllReduce.create(scope, input, groupAssignment, reduceOp, mode);

}

/**

@@ -130,16 +135,17 @@ public ClusterOutput clusterOutput(Operand input) {

* .

*

* @param data type for {@code output} output

- * @param lhs the input tensor

- * @param rhs the kernel tensor

- * @param windowStrides the inter-window strides

- * @param padding the padding to apply at the start and end of each input dimensions

+ * @param lhs input tensor

+ * @param rhs kernel tensor

+ * @param windowStrides inter-window strides

+ * @param padding padding to apply at the start and end of each input dimensions

* @param lhsDilation dilation to apply between input elements

* @param rhsDilation dilation to apply between kernel elements

* @param featureGroupCount number of feature groups for grouped convolution.

- * @param dimensionNumbers a serialized xla::ConvolutionDimensionNumbers proto.

- * @param precisionConfig a serialized xla::PrecisionConfig proto.

- * @param preferredElementType The type of the tensor.

+ * @param dimensionNumbers serialized xla::ConvolutionDimensionNumbers proto.

+ * @param precisionConfig serialized xla::PrecisionConfig proto.

+ * @param preferredElementType type of the tensor.

+ * @param options carries optional attribute values

* @param data type for {@code XlaConvV2} output and operands

* @param data type for {@code XlaConvV2} output and operands

* @return a new instance of Conv

@@ -147,8 +153,9 @@ public ClusterOutput clusterOutput(Operand input) {

public Conv conv(Operand lhs,

Operand rhs, Operand windowStrides, Operand padding,

Operand lhsDilation, Operand rhsDilation, Operand featureGroupCount,

- String dimensionNumbers, String precisionConfig, Class preferredElementType) {

- return Conv.create(scope, lhs, rhs, windowStrides, padding, lhsDilation, rhsDilation, featureGroupCount, dimensionNumbers, precisionConfig, preferredElementType);

+ String dimensionNumbers, String precisionConfig, Class preferredElementType,

+ Conv.Options... options) {

+ return Conv.create(scope, lhs, rhs, windowStrides, padding, lhsDilation, rhsDilation, featureGroupCount, dimensionNumbers, precisionConfig, preferredElementType, options);

}

/**

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Compute_func_Pointer_TF_OpKernelContext.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Compute_func_Pointer_TF_OpKernelContext.java

index 0bb944eed82..9bd5bc022b8 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Compute_func_Pointer_TF_OpKernelContext.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Compute_func_Pointer_TF_OpKernelContext.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Create_func_TF_OpKernelConstruction.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Create_func_TF_OpKernelConstruction.java

index 01c6b8b9cd8..f06b2a1814c 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Create_func_TF_OpKernelConstruction.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Create_func_TF_OpKernelConstruction.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Deallocator_Pointer_long_Pointer.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Deallocator_Pointer_long_Pointer.java

index 245b3e2018a..c3759e7b4b4 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Deallocator_Pointer_long_Pointer.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Deallocator_Pointer_long_Pointer.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Delete_func_Pointer.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Delete_func_Pointer.java

index 2e3b079ae0a..78502fe2469 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Delete_func_Pointer.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Delete_func_Pointer.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/GradFunc.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/GradFunc.java

index df5ceb98746..6235d14f0d2 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/GradFunc.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/GradFunc.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/GradOpRegistry.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/GradOpRegistry.java

index 7a1d69bafbd..f170c382b0f 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/GradOpRegistry.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/GradOpRegistry.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Listener_BytePointer.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Listener_BytePointer.java

index b01b2c229ea..11d41db1b5d 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Listener_BytePointer.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Listener_BytePointer.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Listener_String.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Listener_String.java

index 2ea782928ac..07d245d0b5c 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Listener_String.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Listener_String.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NameMap.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NameMap.java

index 0be7fab2798..5657520e96f 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NameMap.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NameMap.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeGraphPointer.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeGraphPointer.java

index b947a4b322f..eb94a898ee0 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeGraphPointer.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeGraphPointer.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOperation.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOperation.java

index af771a0aa12..9883b1acbde 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOperation.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOperation.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOutput.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOutput.java

index cd3020eef5d..c513954ce41 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOutput.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOutput.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOutputVector.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOutputVector.java

index 9dc9dd5b971..d9d8f654e0b 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOutputVector.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeOutputVector.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeStatus.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeStatus.java

index 193d3f86312..9307a1114a6 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeStatus.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NativeStatus.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Node.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Node.java

index 0bb4543d41c..19fbbdf86b4 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Node.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Node.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

@@ -153,4 +153,11 @@ public class Node extends Pointer {

// Called after an attr has changed. Decides whether we need to update some

// property of the node (stored in props_).

public native void UpdateProperties();

+

+ // Erases type information from the node.

+ public native void ClearTypeInfo();

+

+ // Called after an incident non-control edge has changed. Does nothing if not

+ // all input edges are defined.

+ public native void RunForwardTypeInference();

}

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NodeBuilder.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NodeBuilder.java

index 5922ff38b0a..9aea3e1c83d 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NodeBuilder.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/NodeBuilder.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Shape_inference_func_TF_ShapeInferenceContext_TF_Status.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Shape_inference_func_TF_ShapeInferenceContext_TF_Status.java

index b2168d8a48c..dd0e832881a 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Shape_inference_func_TF_ShapeInferenceContext_TF_Status.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Shape_inference_func_TF_ShapeInferenceContext_TF_Status.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_Context.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_Context.java

index f3309ed7530..548d576b560 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_Context.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_Context.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_ContextOptions.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_ContextOptions.java

index 93223d33e0c..fb64dbcb942 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_ContextOptions.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_ContextOptions.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_Op.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_Op.java

index 9c68d0d9920..31d2f11ad90 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_Op.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_Op.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_OpAttrs.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_OpAttrs.java

index 30398899f83..fafd4fb4da6 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_OpAttrs.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_OpAttrs.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_TensorDebugInfo.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_TensorDebugInfo.java

index fcdf2858a91..81da1e06f55 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_TensorDebugInfo.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_TensorDebugInfo.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_TensorHandle.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_TensorHandle.java

index 7fa3f7ec6cb..c0dfdde664e 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_TensorHandle.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TFE_TensorHandle.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

@@ -13,7 +13,7 @@

//

// Like a TF_Tensor, a TFE_TensorHandle refers to a tensor with a value, shape,

// type etc. Unlike a TF_Tensor, a TFE_TensorHandle may refer to such tensors

-// placed in memory of different devices or remote address spaces.

+// placed in the memory of different devices or remote address spaces.

@Opaque @Properties(inherit = org.tensorflow.internal.c_api.presets.tensorflow.class)

public class TFE_TensorHandle extends org.tensorflow.internal.c_api.AbstractTFE_TensorHandle {

/** Empty constructor. Calls {@code super((Pointer)null)}. */

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_AllocatorAttributes.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_AllocatorAttributes.java

index 4f622e33714..357b2f9de65 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_AllocatorAttributes.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_AllocatorAttributes.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ApiDefMap.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ApiDefMap.java

index 8da3d79e7f7..ef08c2003d5 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ApiDefMap.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ApiDefMap.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_AttrMetadata.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_AttrMetadata.java

index 0b83058a276..c6945d8906d 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_AttrMetadata.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_AttrMetadata.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Buffer.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Buffer.java

index a8653a2049f..e3c0b9b5625 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Buffer.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Buffer.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DeprecatedSession.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DeprecatedSession.java

index c2923086177..859803d0e5a 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DeprecatedSession.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DeprecatedSession.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DeviceList.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DeviceList.java

index bc86ab8a823..de72b3c9503 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DeviceList.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DeviceList.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DimensionHandle.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DimensionHandle.java

index e4bb017db8c..6743400fd7c 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DimensionHandle.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_DimensionHandle.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Function.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Function.java

index 29d075cafc1..390a8bc77fb 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Function.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Function.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_FunctionOptions.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_FunctionOptions.java

index fc12f275678..ff6cd4ad17c 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_FunctionOptions.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_FunctionOptions.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Graph.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Graph.java

index c4d88baf176..b6c60720b51 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Graph.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Graph.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ImportGraphDefOptions.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ImportGraphDefOptions.java

index 69ccb1f83ff..4c4ab67c75c 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ImportGraphDefOptions.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ImportGraphDefOptions.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ImportGraphDefResults.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ImportGraphDefResults.java

index 269a1b07f17..19eb011cc91 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ImportGraphDefResults.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ImportGraphDefResults.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Input.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Input.java

index 9e73e8dbf78..76622aeda6f 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Input.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Input.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_KernelBuilder.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_KernelBuilder.java

index e36b7b206bf..cb9b79815c3 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_KernelBuilder.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_KernelBuilder.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Library.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Library.java

index 6be7fcbec8d..d869e510d84 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Library.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Library.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpDefinitionBuilder.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpDefinitionBuilder.java

index 9949307e8e9..5b5aa507e04 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpDefinitionBuilder.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpDefinitionBuilder.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpKernelConstruction.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpKernelConstruction.java

index 0ee6afaae99..3c4bbd5ff8f 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpKernelConstruction.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpKernelConstruction.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpKernelContext.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpKernelContext.java

index 24c0373404d..a62de209c65 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpKernelContext.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OpKernelContext.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Operation.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Operation.java

index 96e5ef47b38..17b40eb0b9c 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Operation.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Operation.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OperationDescription.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OperationDescription.java

index 71738f4ac02..6531a3a5f82 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OperationDescription.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_OperationDescription.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Output.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Output.java

index bd36144620d..0498c9572fd 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Output.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Output.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Scope.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Scope.java

index bea7ec98a25..77a594c3848 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Scope.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Scope.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Server.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Server.java

index aceb639f7af..c4abf9cd874 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Server.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Server.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Session.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Session.java

index a034dd7f647..1fbe6a0c56b 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Session.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Session.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_SessionOptions.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_SessionOptions.java

index 749655f6209..009dda458b6 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_SessionOptions.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_SessionOptions.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ShapeHandle.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ShapeHandle.java

index 2721dcac4b6..5e1386a1e0d 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ShapeHandle.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ShapeHandle.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ShapeInferenceContext.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ShapeInferenceContext.java

index 15f0b1dc8b7..627bca2fd93 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ShapeInferenceContext.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_ShapeInferenceContext.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Status.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Status.java

index 7a70dc56e95..684335ca83b 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Status.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Status.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_StringView.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_StringView.java

index 85ac4ee877f..9d3768b8264 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_StringView.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_StringView.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString.java

index 7b4c3ad59a4..3f4d1531e26 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Large.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Large.java

index e9031c07e18..1e1beba05f4 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Large.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Large.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Offset.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Offset.java

index 19f06db9224..016b058e2ff 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Offset.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Offset.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Raw.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Raw.java

index c9dca734aa0..e67cb474c79 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Raw.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Raw.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Small.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Small.java

index 8efeeb5176d..1be7d9f6851 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Small.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Small.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Union.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Union.java

index d7ba9e1baa4..643add57748 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Union.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_Union.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_View.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_View.java

index b6c3278403d..c5a8cc47398 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_View.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_TString_View.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Tensor.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Tensor.java

index 9966d1cbfe3..6e462cb1d9c 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Tensor.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_Tensor.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_WhileParams.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_WhileParams.java

index 1777d9a4d35..80dfce4c69d 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_WhileParams.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/TF_WhileParams.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Tensor.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Tensor.java

index 3a4952d5b63..f1efc2b1d4d 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Tensor.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/Tensor.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api;

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/global/tensorflow.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/global/tensorflow.java

index 0e153289dff..06a5b588d0f 100644

--- a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/global/tensorflow.java

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/internal/c_api/global/tensorflow.java

@@ -1,4 +1,4 @@

-// Targeted by JavaCPP version 1.5.6: DO NOT EDIT THIS FILE

+// Targeted by JavaCPP version 1.5.7: DO NOT EDIT THIS FILE

package org.tensorflow.internal.c_api.global;

@@ -3509,6 +3509,23 @@ public static native void TF_OpKernelConstruction_GetAttrString(

TF_OpKernelConstruction ctx, String attr_name, @Cast("char*") byte[] val,

@Cast("size_t") long max_length, TF_Status status);

+// Interprets the named kernel construction attribute as tensor and places it

+// into *val. Allocates a new TF_Tensor which the caller is expected to take

+// ownership of (and can deallocate using TF_DeleteTensor). *status is set to

+// TF_OK.

+//

+// If the attribute could not be found or could not be interpreted as

+// tensor, *status is populated with an error.

+public static native void TF_OpKernelConstruction_GetAttrTensor(

+ TF_OpKernelConstruction ctx, @Cast("const char*") BytePointer attr_name, @Cast("TF_Tensor**") PointerPointer val,

+ TF_Status status);

+public static native void TF_OpKernelConstruction_GetAttrTensor(

+ TF_OpKernelConstruction ctx, @Cast("const char*") BytePointer attr_name, @ByPtrPtr TF_Tensor val,

+ TF_Status status);

+public static native void TF_OpKernelConstruction_GetAttrTensor(

+ TF_OpKernelConstruction ctx, String attr_name, @ByPtrPtr TF_Tensor val,

+ TF_Status status);

+

// Interprets the named kernel construction attribute as a TF_DataType array and

// places it into *vals. *status is set to TF_OK.

// `vals` must point to an array of length at least `max_values` (ideally set

@@ -3671,6 +3688,24 @@ public static native void TF_OpKernelConstruction_GetAttrStringList(

@Cast("size_t*") SizeTPointer lengths, int max_values, Pointer storage, @Cast("size_t") long storage_size,

TF_Status status);

+// Interprets the named kernel construction attribute as tensor array and places

+// it into *vals. *status is set to TF_OK.

+// `vals` must point to an array of length at least `max_values`

+// (ideally set to list_size from TF_OpKernelConstruction_GetAttrSize(ctx,

+// attr_name, list_size, total_size)).

+//

+// The caller takes ownership of all the non-null TF_Tensor* entries in `vals`

+// (which can be deleted using TF_DeleteTensor(vals[i])).

+public static native void TF_OpKernelConstruction_GetAttrTensorList(

+ TF_OpKernelConstruction ctx, @Cast("const char*") BytePointer attr_name, @Cast("TF_Tensor**") PointerPointer vals,

+ int max_values, TF_Status status);

+public static native void TF_OpKernelConstruction_GetAttrTensorList(

+ TF_OpKernelConstruction ctx, @Cast("const char*") BytePointer attr_name, @ByPtrPtr TF_Tensor vals,

+ int max_values, TF_Status status);

+public static native void TF_OpKernelConstruction_GetAttrTensorList(

+ TF_OpKernelConstruction ctx, String attr_name, @ByPtrPtr TF_Tensor vals,

+ int max_values, TF_Status status);

+

// Return true if the kernel construction has the attr_name

public static native @Cast("bool") boolean TF_OpKernelConstruction_HasAttr(

TF_OpKernelConstruction ctx, @Cast("const char*") BytePointer attr_name, TF_Status status);

@@ -4286,7 +4321,7 @@ public static native void TFE_ContextSetThreadLocalDevicePlacementPolicy(

public static native @Cast("TFE_ContextDevicePlacementPolicy") int TFE_ContextGetDevicePlacementPolicy(TFE_Context ctx);

// A tensorflow.ServerDef specifies remote workers (in addition to the current

-// workers name). Operations created on this context can then be executed on

+// workers name). Operations created in this context can then be executed on

// any of these remote workers by setting an appropriate device.

//

// If the following is set, all servers identified by the

@@ -4816,7 +4851,7 @@ public static native void TFE_ContextExportRunMetadata(TFE_Context ctx,

// Ends a step. When there is no active step (that is, every started step has

// been ended) step containers will be cleared. Note: it is not safe to call

-// TFE_ContextEndStep while ops which rely on the step container may be running.

+// TFE_ContextEndStep while ops that rely on the step container may be running.

public static native void TFE_ContextEndStep(TFE_Context ctx);

// #ifdef __cplusplus

@@ -5042,6 +5077,8 @@ public static native void TFE_OpSetAttrValueProto(@Const TFE_Op op,

@Namespace("tensorflow") public static native @StdString BytePointer TfCheckOpHelperOutOfLine(

@Const @ByRef NativeStatus v, String msg);

+@Namespace("tensorflow") public static native @StdString BytePointer error_name(@Cast("tensorflow::error::Code") int code);

+

@Namespace("tensorflow") public static native @StdString BytePointer TfCheckOpHelper(@ByVal NativeStatus v,

@Cast("const char*") BytePointer msg);

@Namespace("tensorflow") public static native @StdString BytePointer TfCheckOpHelper(@ByVal NativeStatus v,

diff --git a/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/op/core/AnonymousMutableDenseHashTable.java b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/op/core/AnonymousMutableDenseHashTable.java

new file mode 100644

index 00000000000..2625d9e0395

--- /dev/null

+++ b/tensorflow-core/tensorflow-core-api/src/gen/java/org/tensorflow/op/core/AnonymousMutableDenseHashTable.java

@@ -0,0 +1,259 @@

+/* Copyright 2018 The TensorFlow Authors. All Rights Reserved.

+

+Licensed under the Apache License, Version 2.0 (the "License");

+you may not use this file except in compliance with the License.

+You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing, software

+distributed under the License is distributed on an "AS IS" BASIS,

+WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+See the License for the specific language governing permissions and

+limitations under the License.

+=======================================================================*/

+

+// This class has been generated, DO NOT EDIT!

+

+package org.tensorflow.op.core;

+

+import java.util.Arrays;

+import org.tensorflow.GraphOperation;

+import org.tensorflow.Operand;

+import org.tensorflow.Operation;

+import org.tensorflow.OperationBuilder;

+import org.tensorflow.Output;

+import org.tensorflow.ndarray.Shape;

+import org.tensorflow.op.Operands;

+import org.tensorflow.op.RawOp;

+import org.tensorflow.op.RawOpInputs;

+import org.tensorflow.op.Scope;

+import org.tensorflow.op.annotation.Endpoint;

+import org.tensorflow.op.annotation.OpInputsMetadata;

+import org.tensorflow.op.annotation.OpMetadata;

+import org.tensorflow.proto.framework.DataType;

+import org.tensorflow.types.family.TType;

+

+/**

+ * Creates an empty anonymous mutable hash table that uses tensors as the backing store.

+ * This op creates a new anonymous mutable hash table (as a resource) everytime

+ * it is executed, with the specified dtype of its keys and values,

+ * returning the resource handle. Each value must be a scalar.

+ * Data can be inserted into the table using

+ * the insert operations. It does not support the initialization operation.

+ * It uses "open addressing" with quadratic reprobing to resolve

+ * collisions.

+ *

The table is anonymous in the sense that it can only be

+ * accessed by the returned resource handle (e.g. it cannot be looked up

+ * by a name in a resource manager). The table will be automatically

+ * deleted when all resource handles pointing to it are gone.

+ */

+@OpMetadata(

+ opType = AnonymousMutableDenseHashTable.OP_NAME,

+ inputsClass = AnonymousMutableDenseHashTable.Inputs.class

+)

+public final class AnonymousMutableDenseHashTable extends RawOp implements Operand {

+ /**

+ * The name of this op, as known by TensorFlow core engine

+ */

+ public static final String OP_NAME = "AnonymousMutableDenseHashTable";

+

+ private Output tableHandle;

+

+ @SuppressWarnings("unchecked")

+ public AnonymousMutableDenseHashTable(Operation operation) {

+ super(operation, OP_NAME);

+ int outputIdx = 0;

+ tableHandle = operation.output(outputIdx++);

+ }

+

+ /**

+ * Factory method to create a class wrapping a new AnonymousMutableDenseHashTable operation.

+ *

+ * @param scope current scope

+ * @param emptyKey The key used to represent empty key buckets internally. Must not

+ * be used in insert or lookup operations.

+ * @param deletedKey The deletedKey value

+ * @param valueDtype Type of the table values.

+ * @param options carries optional attribute values

+ * @param data type for {@code AnonymousMutableDenseHashTable} output and operands

+ * @param data type for {@code AnonymousMutableDenseHashTable} output and operands

+ * @return a new instance of AnonymousMutableDenseHashTable

+ */

+ @Endpoint(

+ describeByClass = true

+ )

+ public static AnonymousMutableDenseHashTable create(

+ Scope scope, Operand emptyKey, Operand deletedKey, Class valueDtype,

+ Options... options) {

+ OperationBuilder opBuilder = scope.opBuilder(OP_NAME, "AnonymousMutableDenseHashTable");

+ opBuilder.addInput(emptyKey.asOutput());

+ opBuilder.addInput(deletedKey.asOutput());

+ opBuilder.setAttr("value_dtype", Operands.toDataType(valueDtype));

+ if (options != null) {

+ for (Options opts : options) {

+ if (opts.valueShape != null) {

+ opBuilder.setAttr("value_shape", opts.valueShape);

+ }

+ if (opts.initialNumBuckets != null) {

+ opBuilder.setAttr("initial_num_buckets", opts.initialNumBuckets);

+ }

+ if (opts.maxLoadFactor != null) {

+ opBuilder.setAttr("max_load_factor", opts.maxLoadFactor);

+ }

+ }

+ }

+ return new AnonymousMutableDenseHashTable(opBuilder.build());

+ }

+

+ /**

+ * Sets the valueShape option.

+ *

+ * @param valueShape The shape of each value.

+ * @return this Options instance.

+ */

+ public static Options valueShape(Shape valueShape) {

+ return new Options().valueShape(valueShape);

+ }

+

+ /**

+ * Sets the initialNumBuckets option.

+ *

+ * @param initialNumBuckets The initial number of hash table buckets. Must be a power

+ * to 2.

+ * @return this Options instance.

+ */

+ public static Options initialNumBuckets(Long initialNumBuckets) {

+ return new Options().initialNumBuckets(initialNumBuckets);

+ }

+

+ /**

+ * Sets the maxLoadFactor option.

+ *

+ * @param maxLoadFactor The maximum ratio between number of entries and number of

+ * buckets before growing the table. Must be between 0 and 1.

+ * @return this Options instance.

+ */

+ public static Options maxLoadFactor(Float maxLoadFactor) {

+ return new Options().maxLoadFactor(maxLoadFactor);

+ }

+

+ /**

+ * Gets tableHandle.

+ * The resource handle to the newly created hash-table resource.

+ * @return tableHandle.

+ */

+ public Output tableHandle() {

+ return tableHandle;

+ }

+

+ @Override

+ @SuppressWarnings("unchecked")

+ public Output asOutput() {

+ return (Output) tableHandle;

+ }

+

+ /**

+ * Optional attributes for {@link org.tensorflow.op.core.AnonymousMutableDenseHashTable}

+ */

+ public static class Options {

+ private Shape valueShape;

+

+ private Long initialNumBuckets;

+

+ private Float maxLoadFactor;

+

+ private Options() {

+ }

+

+ /**

+ * Sets the valueShape option.

+ *

+ * @param valueShape The shape of each value.

+ * @return this Options instance.

+ */

+ public Options valueShape(Shape valueShape) {

+ this.valueShape = valueShape;

+ return this;

+ }

+

+ /**

+ * Sets the initialNumBuckets option.

+ *

+ * @param initialNumBuckets The initial number of hash table buckets. Must be a power

+ * to 2.

+ * @return this Options instance.

+ */

+ public Options initialNumBuckets(Long initialNumBuckets) {

+ this.initialNumBuckets = initialNumBuckets;

+ return this;

+ }

+

+ /**

+ * Sets the maxLoadFactor option.

+ *

+ * @param maxLoadFactor The maximum ratio between number of entries and number of

+ * buckets before growing the table. Must be between 0 and 1.

+ * @return this Options instance.

+ */

+ public Options maxLoadFactor(Float maxLoadFactor) {

+ this.maxLoadFactor = maxLoadFactor;

+ return this;

+ }

+ }

+

+ @OpInputsMetadata(

+ outputsClass = AnonymousMutableDenseHashTable.class

+ )

+ public static class Inputs extends RawOpInputs {

+ /**

+ * The key used to represent empty key buckets internally. Must not

+ * be used in insert or lookup operations.

+ */

+ public final Operand emptyKey;

+

+ /**

+ * The deletedKey input

+ */

+ public final Operand deletedKey;

+

+ /**

+ * Type of the table keys.

+ */

+ public final DataType keyDtype;

+

+ /**

+ * Type of the table values.

+ */

+ public final DataType valueDtype;

+

+ /**

+ * The shape of each value.

+ */

+ public final Shape valueShape;

+

+ /**

+ * The initial number of hash table buckets. Must be a power

+ * to 2.

+ */

+ public final long initialNumBuckets;

+

+ /**

+ * The maximum ratio between number of entries and number of

+ * buckets before growing the table. Must be between 0 and 1.

+ */

+ public final float maxLoadFactor;

+

+ public Inputs(GraphOperation op) {

+ super(new AnonymousMutableDenseHashTable(op), op, Arrays.asList("key_dtype", "value_dtype", "value_shape", "initial_num_buckets", "max_load_factor"));

+ int inputIndex = 0;

+ emptyKey = (Operand) op.input(inputIndex++);

+ deletedKey = (Operand) op.input(inputIndex++);

+ keyDtype = op.attributes().getAttrType("key_dtype");

+ valueDtype = op.attributes().getAttrType("value_dtype");