diff --git a/.github/workflows/build_documentation.yml b/.github/workflows/build_documentation.yml

index 982795752..ddd416799 100644

--- a/.github/workflows/build_documentation.yml

+++ b/.github/workflows/build_documentation.yml

@@ -14,6 +14,6 @@ jobs:

package: course

path_to_docs: course/chapters/

additional_args: --not_python_module

- languages: ar bn de en es fa fr gj he hi id it ja ko ne pl pt ru rum th tr vi zh-CN zh-TW

+ languages: ar bn de en es fa fr gj he hi id it ja ko ne pl pt ru rum te th tr vi zh-CN zh-TW

secrets:

hf_token: ${{ secrets.HF_DOC_BUILD_PUSH }}

diff --git a/.github/workflows/build_pr_documentation.yml b/.github/workflows/build_pr_documentation.yml

index 53541f297..43664e711 100644

--- a/.github/workflows/build_pr_documentation.yml

+++ b/.github/workflows/build_pr_documentation.yml

@@ -16,4 +16,4 @@ jobs:

package: course

path_to_docs: course/chapters/

additional_args: --not_python_module

- languages: ar bn de en es fa fr gj he hi id it ja ko ne pl pt ru rum th tr vi zh-CN zh-TW

+ languages: ar bn de en es fa fr gj he hi id it ja ko ne pl pt ru rum te th tr vi zh-CN zh-TW

diff --git a/README.md b/README.md

index e8b6311fb..07dadca56 100644

--- a/README.md

+++ b/README.md

@@ -21,12 +21,13 @@ This repo contains the content that's used to create the **[Hugging Face course]

| [Korean](https://huggingface.co/course/ko/chapter1/1) (WIP) | [`chapters/ko`](https://github.com/huggingface/course/tree/main/chapters/ko) | [@Doohae](https://github.com/Doohae), [@wonhyeongseo](https://github.com/wonhyeongseo), [@dlfrnaos19](https://github.com/dlfrnaos19), [@nsbg](https://github.com/nsbg) |

| [Portuguese](https://huggingface.co/course/pt/chapter1/1) (WIP) | [`chapters/pt`](https://github.com/huggingface/course/tree/main/chapters/pt) | [@johnnv1](https://github.com/johnnv1), [@victorescosta](https://github.com/victorescosta), [@LincolnVS](https://github.com/LincolnVS) |

| [Russian](https://huggingface.co/course/ru/chapter1/1) (WIP) | [`chapters/ru`](https://github.com/huggingface/course/tree/main/chapters/ru) | [@pdumin](https://github.com/pdumin), [@svv73](https://github.com/svv73), [@blademoon](https://github.com/blademoon) |

+| [Telugu]( https://huggingface.co/course/te/chapter0/1 ) (WIP) | [`chapters/te`](https://github.com/huggingface/course/tree/main/chapters/te) | [@Ajey95](https://github.com/Ajey95)

| [Thai](https://huggingface.co/course/th/chapter1/1) (WIP) | [`chapters/th`](https://github.com/huggingface/course/tree/main/chapters/th) | [@peeraponw](https://github.com/peeraponw), [@a-krirk](https://github.com/a-krirk), [@jomariya23156](https://github.com/jomariya23156), [@ckingkan](https://github.com/ckingkan) |

| [Turkish](https://huggingface.co/course/tr/chapter1/1) (WIP) | [`chapters/tr`](https://github.com/huggingface/course/tree/main/chapters/tr) | [@tanersekmen](https://github.com/tanersekmen), [@mertbozkir](https://github.com/mertbozkir), [@ftarlaci](https://github.com/ftarlaci), [@akkasayaz](https://github.com/akkasayaz) |

| [Vietnamese](https://huggingface.co/course/vi/chapter1/1) | [`chapters/vi`](https://github.com/huggingface/course/tree/main/chapters/vi) | [@honghanhh](https://github.com/honghanhh) |

| [Chinese (simplified)](https://huggingface.co/course/zh-CN/chapter1/1) | [`chapters/zh-CN`](https://github.com/huggingface/course/tree/main/chapters/zh-CN) | [@zhlhyx](https://github.com/zhlhyx), [petrichor1122](https://github.com/petrichor1122), [@1375626371](https://github.com/1375626371) |

| [Chinese (traditional)](https://huggingface.co/course/zh-TW/chapter1/1) (WIP) | [`chapters/zh-TW`](https://github.com/huggingface/course/tree/main/chapters/zh-TW) | [@davidpeng86](https://github.com/davidpeng86) |

-

+| [Romanian](https://huggingface.co/course/rum/chapter1/1) (WIP) | [`chapters/rum`](https://github.com/huggingface/course/tree/main/chapters/rum) | [@Sigmoid](https://github.com/SigmoidAI), [@eduard-balamatiuc](https://github.com/eduard-balamatiuc), [@FriptuLudmila](https://github.com/FriptuLudmila), [@tokyo-s](https://github.com/tokyo-s), [@hbkdesign](https://github.com/hbkdesign) |

### Translating the course into your language

diff --git a/chapters/en/_toctree.yml b/chapters/en/_toctree.yml

index 7f8d105ca..9a30743e0 100644

--- a/chapters/en/_toctree.yml

+++ b/chapters/en/_toctree.yml

@@ -14,7 +14,7 @@

- local: chapter1/4

title: How do Transformers work?

- local: chapter1/5

- title: How 🤗 Transformers solve tasks

+ title: Solving Tasks with Transformers

- local: chapter1/6

title: Transformer Architectures

- local: chapter1/7

@@ -46,6 +46,8 @@

- local: chapter2/7

title: Basic usage completed!

- local: chapter2/8

+ title: Optimized Inference Deployment

+ - local: chapter2/9

title: End-of-chapter quiz

quiz: 2

diff --git a/chapters/en/chapter12/3a.mdx b/chapters/en/chapter12/3a.mdx

index b4effb193..6235680e4 100644

--- a/chapters/en/chapter12/3a.mdx

+++ b/chapters/en/chapter12/3a.mdx

@@ -10,11 +10,11 @@ Let's deepen our understanding of GRPO so that we can improve our model's traini

GRPO directly evaluates the model-generated responses by comparing them within groups of generation to optimize policy model, instead of training a separate value model (Critic). This approach leads to significant reduction in computational cost!

-GRPO can be applied to any verifiable task where the correctness of the response can be determined. For instance, in math reasoning, the correctness of the response can be easily verified by comparing it to the ground truth.

+GRPO can be applied to any verifiable task where the correctness of the response can be determined. For instance, in math reasoning, the correctness of the response can be easily verified by comparing it to the ground truth.

Before diving into the technical details, let's visualize how GRPO works at a high level:

-

+

Now that we have a visual overview, let's break down how GRPO works step by step.

@@ -28,14 +28,19 @@ Let's walk through each step of the algorithm in detail:

The first step is to generate multiple possible answers for each question. This creates a diverse set of outputs that can be compared against each other.

-For each question $q$, the model will generate $G$ outputs (group size) from the trained policy:{ ${o_1, o_2, o_3, \dots, o_G}\pi_{\theta_{\text{old}}}$ }, $G=8$ where each $o_i$ represents one completion from the model.

+For each question \\( q \\), the model will generate \\( G \\) outputs (group size) from the trained policy: { \\( {o_1, o_2, o_3, \dots, o_G}\pi_{\theta_{\text{old}}} \\) }, \\( G=8 \\) where each \\( o_i \\) represents one completion from the model.

-#### Example:

+#### Example

To make this concrete, let's look at a simple arithmetic problem:

-- **Question** $q$ : $\text{Calculate}\space2 + 2 \times 6$

-- **Outputs** $(G = 8)$: $\{o_1:14 \text{ (correct)}, o_2:16 \text{ (wrong)}, o_3:10 \text{ (wrong)}, \ldots, o_8:14 \text{ (correct)}\}$

+**Question**

+

+\\( q \\) : \\( \text{Calculate}\space2 + 2 \times 6 \\)

+

+**Outputs**

+

+\\( (G = 8) \\): \\( \{o_1:14 \text{ (correct)}, o_2:16 \text{ (wrong)}, o_3:10 \text{ (wrong)}, \ldots, o_8:14 \text{ (correct)}\} \\)

Notice how some of the generated answers are correct (14) while others are wrong (16 or 10). This diversity is crucial for the next step.

@@ -43,34 +48,36 @@ Notice how some of the generated answers are correct (14) while others are wrong

Once we have multiple responses, we need a way to determine which ones are better than others. This is where the advantage calculation comes in.

-#### Reward Distribution:

+#### Reward Distribution

First, we assign a reward score to each generated response. In this example, we'll use a reward model, but as we learnt in the previous section, we can use any reward returning function.

-Assign a RM score to each of the generated responses based on the correctness $r_i$ *(e.g. 1 for correct response, 0 for wrong response)* then for each of the $r_i$ calculate the following Advantage value

+Assign a RM score to each of the generated responses based on the correctness \\( r_i \\) *(e.g. 1 for correct response, 0 for wrong response)* then for each of the \\( r_i \\) calculate the following Advantage value.

-#### Advantage Value Formula:

+#### Advantage Value Formula

The key insight of GRPO is that we don't need absolute measures of quality - we can compare outputs within the same group. This is done using standardization:

$$A_i = \frac{r_i - \text{mean}(\{r_1, r_2, \ldots, r_G\})}{\text{std}(\{r_1, r_2, \ldots, r_G\})}$$

-#### Example:

+#### Example

Continuing with our arithmetic example for the same example above, imagine we have 8 responses, 4 of which is correct and the rest wrong, therefore;

-- Group Average: $mean(r_i) = 0.5$

-- Std: $std(r_i) = 0.53$

-- Advantage Value:

- - Correct response: $A_i = \frac{1 - 0.5}{0.53}= 0.94$

- - Wrong response: $A_i = \frac{0 - 0.5}{0.53}= -0.94$

-#### Interpretation:

+| Metric | Value |

+|--------|-------|

+| Group Average | \\( mean(r_i) = 0.5 \\) |

+| Standard Deviation | \\( std(r_i) = 0.53 \\) |

+| Advantage Value (Correct response) | \\( A_i = \frac{1 - 0.5}{0.53}= 0.94 \\) |

+| Advantage Value (Wrong response) | \\( A_i = \frac{0 - 0.5}{0.53}= -0.94 \\) |

+

+#### Interpretation

Now that we have calculated the advantage values, let's understand what they mean:

-This standardization (i.e. $A_i$ weighting) allows the model to assess each response's relative performance, guiding the optimization process to favour responses that are better than average (high reward) and discourage those that are worse. For instance if $A_i > 0$, then the $o_i$ is better response than the average level within its group; and if $A_i < 0$, then the $o_i$ then the quality of the response is less than the average (i.e. poor quality/performance).

+This standardization (i.e. \\( A_i \\) weighting) allows the model to assess each response's relative performance, guiding the optimization process to favorable responses that are better than average (high reward) and discourage those that are worse. For instance if \\( A_i > 0 \\), then the \\( o_i \\) is better response than the average level within its group; and if \\( A_i < 0 \\), then the \\( o_i \\) then the quality of the response is less than the average (i.e. poor quality/performance).

-For the example above, if $A_i = 0.94 \text{(correct output)}$ then during optimization steps its generation probability will be increased.

+For the example above, if \\( A_i = 0.94 \text{(correct output)} \\) then during optimization steps its generation probability will be increased.

With our advantage values calculated, we're now ready to update the policy.

@@ -80,7 +87,7 @@ The final step is to use these advantage values to update our model so that it b

The target function for policy update is:

-$$J_{GRPO}(\theta) = \left[\frac{1}{G} \sum_{i=1}^{G} \min \left( \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)} A_i \text{clip}\left( \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)}, 1 - \epsilon, 1 + \epsilon \right) A_i \right)\right]- \beta D_{KL}(\pi_{\theta} || \pi_{ref})$$

+$$J_{GRPO}(\theta) = \left[\frac{1}{G} \sum_{i=1}^{G} \min \left( \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)} A_i \text{clip}\left( \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)}, 1 - \epsilon, 1 + \epsilon \right) A_i \right)\right]- \beta D_{KL}(\pi_{\theta} \|\| \pi_{ref})$$

This formula might look intimidating at first, but it's built from several components that each serve an important purpose. Let's break them down one by one.

@@ -92,13 +99,14 @@ The GRPO update function combines several techniques to ensure stable and effect

The probability ratio is defined as:

-$\left(\frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)}\right)$

+\\( \left(\frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)}\right) \\)

Intuitively, the formula compares how much the new model's response probability differs from the old model's response probability while incorporating a preference for responses that improve the expected outcome.

-#### Interpretation:

-- If $\text{ratio} > 1$, the new model assigns a higher probability to response $o_i$ than the old model.

-- If $\text{ratio} < 1$, the new model assigns a lower probability to $o_i$

+#### Interpretation

+

+- If \\( \text{ratio} > 1 \\), the new model assigns a higher probability to response \\( o_i \\) than the old model.

+- If \\( \text{ratio} < 1 \\), the new model assigns a lower probability to \\( o_i \\)

This ratio allows us to control how much the model changes at each step, which leads us to the next component.

@@ -106,22 +114,25 @@ This ratio allows us to control how much the model changes at each step, which l

The clipping function is defined as:

-$\text{clip}\left( \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)}, 1 - \epsilon, 1 + \epsilon\right)$

+\\( \text{clip}\left( \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)}, 1 - \epsilon, 1 + \epsilon\right) \\)

-Limit the ratio discussed above to be within $[1 - \epsilon, 1 + \epsilon]$ to avoid/control drastic changes or crazy updates and stepping too far off from the old policy. In other words, it limit how much the probability ratio can increase to help maintaining stability by avoiding updates that push the new model too far from the old one.

+Limit the ratio discussed above to be within \\( [1 - \epsilon, 1 + \epsilon] \\) to avoid/control drastic changes or crazy updates and stepping too far off from the old policy. In other words, it limit how much the probability ratio can increase to help maintaining stability by avoiding updates that push the new model too far from the old one.

+

+#### Example (ε = 0.2)

-#### Example $\space \text{suppose}(\epsilon = 0.2)$

Let's look at two different scenarios to better understand this clipping function:

- **Case 1**: if the new policy has a probability of 0.9 for a specific response and the old policy has a probabiliy of 0.5, it means this response is getting reinforeced by the new policy to have higher probability, but within a controlled limit which is the clipping to tight up its hands to not get drastic

- - $\text{Ratio}: \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)} = \frac{0.9}{0.5} = 1.8 → \text{Clip}\space1.2$ (upper bound limit 1.2)

+ - \\( \text{Ratio}: \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)} = \frac{0.9}{0.5} = 1.8 → \text{Clip}\space1.2 \\) (upper bound limit 1.2)

- **Case 2**: If the new policy is not in favour of a response (lower probability e.g. 0.2), meaning if the response is not beneficial the increase might be incorrect, and the model would be penalized.

- - $\text{Ratio}: \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)} = \frac{0.2}{0.5} = 0.4 →\text{Clip}\space0.8$ (lower bound limit 0.8)

-#### Interpretation:

+ - \\( \text{Ratio}: \frac{\pi_{\theta}(o_i|q)}{\pi_{\theta_{old}}(o_i|q)} = \frac{0.2}{0.5} = 0.4 →\text{Clip}\space0.8 \\) (lower bound limit 0.8)

+

+#### Interpretation

+

- The formula encourages the new model to favour responses that the old model underweighted **if they improve the outcome**.

-- If the old model already favoured a response with a high probability, the new model can still reinforce it **but only within a controlled limit $[1 - \epsilon, 1 + \epsilon]$, $\text{(e.g., }\epsilon = 0.2, \space \text{so} \space [0.8-1.2])$**.

+- If the old model already favoured a response with a high probability, the new model can still reinforce it **but only within a controlled limit \\( [1 - \epsilon, 1 + \epsilon] \\), \\( \text{(e.g., }\epsilon = 0.2, \space \text{so} \space [0.8-1.2]) \\)**.

- If the old model overestimated a response that performs poorly, the new model is **discouraged** from maintaining that high probability.

-- Therefore, intuitively, By incorporating the probability ratio, the objective function ensures that updates to the policy are proportional to the advantage $A_i$ while being moderated to prevent drastic changes. T

+- Therefore, intuitively, By incorporating the probability ratio, the objective function ensures that updates to the policy are proportional to the advantage \\( A_i \\) while being moderated to prevent drastic changes. T

While the clipping function helps prevent drastic changes, we need one more safeguard to ensure our model doesn't deviate too far from its original behavior.

@@ -129,33 +140,35 @@ While the clipping function helps prevent drastic changes, we need one more safe

The KL divergence term is:

-$\beta D_{KL}(\pi_{\theta} || \pi_{ref})$

+\\( \beta D_{KL}(\pi_{\theta} \|\| \pi_{ref}) \\)

-In the KL divergence term, the $\pi_{ref}$ is basically the pre-update model's output, `per_token_logps` and $\pi_{\theta}$ is the new model's output, `new_per_token_logps`. Theoretically, KL divergence is minimized to prevent the model from deviating too far from its original behavior during optimization. This helps strike a balance between improving performance based on the reward signal and maintaining coherence. In this context, minimizing KL divergence reduces the risk of the model generating nonsensical text or, in the case of mathematical reasoning, producing extremely incorrect answers.

+In the KL divergence term, the \\( \pi_{ref} \\) is basically the pre-update model's output, `per_token_logps` and \\( \pi_{\theta} \\) is the new model's output, `new_per_token_logps`. Theoretically, KL divergence is minimized to prevent the model from deviating too far from its original behavior during optimization. This helps strike a balance between improving performance based on the reward signal and maintaining coherence. In this context, minimizing KL divergence reduces the risk of the model generating nonsensical text or, in the case of mathematical reasoning, producing extremely incorrect answers.

#### Interpretation

+

- A KL divergence penalty keeps the model's outputs close to its original distribution, preventing extreme shifts.

- Instead of drifting towards completely irrational outputs, the model would refine its understanding while still allowing some exploration

#### Math Definition

+

For those interested in the mathematical details, let's look at the formal definition:

Recall that KL distance is defined as follows:

-$$D_{KL}(P || Q) = \sum_{x \in X} P(x) \log \frac{P(x)}{Q(x)}$$

+$$D_{KL}(P \|\| Q) = \sum_{x \in X} P(x) \log \frac{P(x)}{Q(x)}$$

In RLHF, the two distributions of interest are often the distribution of the new model version, P(x), and a distribution of the reference policy, Q(x).

-#### The Role of $\beta$ Parameter

+#### The Role of β Parameter

-The coefficient $\beta$ controls how strongly we enforce the KL divergence constraint:

+The coefficient \\( \beta \\) controls how strongly we enforce the KL divergence constraint:

-- **Higher $\beta$ (Stronger KL Penalty)**

+- **Higher β (Stronger KL Penalty)**

- More constraint on policy updates. The model remains close to its reference distribution.

- Can slow down adaptation: The model may struggle to explore better responses.

-- **Lower $\beta$ (Weaker KL Penalty)**

+- **Lower β (Weaker KL Penalty)**

- More freedom to update policy: The model can deviate more from the reference.

- Faster adaptation but risk of instability: The model might learn reward-hacking behaviors.

- Over-optimization risk: If the reward model is flawed, the policy might generate nonsensical outputs.

-- **Original** [DeepSeekMath](https://arxiv.org/abs/2402.03300) paper set this $\beta= 0.04$

+- **Original** [DeepSeekMath](https://arxiv.org/abs/2402.03300) paper set this \\( \beta= 0.04 \\)

Now that we understand the components of GRPO, let's see how they work together in a complete example.

@@ -169,9 +182,9 @@ $$\text{Q: Calculate}\space2 + 2 \times 6$$

### Step 1: Group Sampling

-First, we generate multiple responses from our model:

+First, we generate multiple responses from our model.

-Generate $(G = 8)$ responses, $4$ of which are correct answer ($14, \text{reward=} 1$) and $4$ incorrect $\text{(reward= 0)}$, Therefore:

+Generate \\( (G = 8) \\) responses, \\( 4 \\) of which are correct answer (\\( 14, \text{reward=} 1 \\)) and \\( 4 \\) incorrect \\( \text{(reward= 0)} \\), Therefore:

$${o_1:14(correct), o_2:10 (wrong), o_3:16 (wrong), ... o_G:14(correct)}$$

@@ -179,20 +192,20 @@ $${o_1:14(correct), o_2:10 (wrong), o_3:16 (wrong), ... o_G:14(correct)}$$

Next, we calculate the advantage values to determine which responses are better than average:

-- Group Average:

-$$mean(r_i) = 0.5$$

-- Std: $$std(r_i) = 0.53$$

-- Advantage Value:

- - Correct response: $A_i = \frac{1 - 0.5}{0.53}= 0.94$

- - Wrong response: $A_i = \frac{0 - 0.5}{0.53}= -0.94$

+| Statistic | Value |

+|-----------|-------|

+| Group Average | \\( mean(r_i) = 0.5 \\) |

+| Standard Deviation | \\( std(r_i) = 0.53 \\) |

+| Advantage Value (Correct response) | \\( A_i = \frac{1 - 0.5}{0.53}= 0.94 \\) |

+| Advantage Value (Wrong response) | \\( A_i = \frac{0 - 0.5}{0.53}= -0.94 \\) |

### Step 3: Policy Update

Finally, we update our model to reinforce the correct responses:

-- Assuming the probability of old policy ($\pi_{\theta_{old}}$) for a correct output $o_1$ is $0.5$ and the new policy increases it to $0.7$ then:

+- Assuming the probability of old policy (\\( \pi_{\theta_{old}} \\)) for a correct output \\( o_1 \\) is \\( 0.5 \\) and the new policy increases it to \\( 0.7 \\) then:

$$\text{Ratio}: \frac{0.7}{0.5} = 1.4 →\text{after Clip}\space1.2 \space (\epsilon = 0.2)$$

-- Then when the target function is re-weighted, the model tends to reinforce the generation of correct output, and the $\text{KL Divergence}$ limits the deviation from the reference policy.

+- Then when the target function is re-weighted, the model tends to reinforce the generation of correct output, and the \\( \text{KL Divergence} \\) limits the deviation from the reference policy.

With the theoretical understanding in place, let's see how GRPO can be implemented in code.

diff --git a/chapters/en/chapter12/img/1.png b/chapters/en/chapter12/img/1.png

deleted file mode 100644

index a53e8a186..000000000

Binary files a/chapters/en/chapter12/img/1.png and /dev/null differ

diff --git a/chapters/en/chapter12/img/2.jpg b/chapters/en/chapter12/img/2.jpg

deleted file mode 100644

index d1fd22662..000000000

Binary files a/chapters/en/chapter12/img/2.jpg and /dev/null differ

diff --git a/chapters/en/chapter2/1.mdx b/chapters/en/chapter2/1.mdx

index 16347ca94..70e290a9d 100644

--- a/chapters/en/chapter2/1.mdx

+++ b/chapters/en/chapter2/1.mdx

@@ -10,7 +10,7 @@ As you saw in [Chapter 1](/course/chapter1), Transformer models are usually very

The 🤗 Transformers library was created to solve this problem. Its goal is to provide a single API through which any Transformer model can be loaded, trained, and saved. The library's main features are:

- **Ease of use**: Downloading, loading, and using a state-of-the-art NLP model for inference can be done in just two lines of code.

-- **Flexibility**: At their core, all models are simple PyTorch `nn.Module` or TensorFlow `tf.keras.Model` classes and can be handled like any other models in their respective machine learning (ML) frameworks.

+- **Flexibility**: At their core, all models are simple PyTorch `nn.Module` classes and can be handled like any other models in their respective machine learning (ML) frameworks.

- **Simplicity**: Hardly any abstractions are made across the library. The "All in one file" is a core concept: a model's forward pass is entirely defined in a single file, so that the code itself is understandable and hackable.

This last feature makes 🤗 Transformers quite different from other ML libraries. The models are not built on modules

diff --git a/chapters/en/chapter2/2.mdx b/chapters/en/chapter2/2.mdx

index 2a35669d7..205e07e51 100644

--- a/chapters/en/chapter2/2.mdx

+++ b/chapters/en/chapter2/2.mdx

@@ -2,8 +2,6 @@

# Behind the pipeline[[behind-the-pipeline]]

-{#if fw === 'pt'}

-

-{:else}

-

-

-

-{/if}

-

-

-This is the first section where the content is slightly different depending on whether you use PyTorch or TensorFlow. Toggle the switch on top of the title to select the platform you prefer!

-

-

-{#if fw === 'pt'}

-{:else}

-

-{/if}

Let's start with a complete example, taking a look at what happened behind the scenes when we executed the following code in [Chapter 1](/course/chapter1):

@@ -83,11 +62,10 @@ tokenizer = AutoTokenizer.from_pretrained(checkpoint)

Once we have the tokenizer, we can directly pass our sentences to it and we'll get back a dictionary that's ready to feed to our model! The only thing left to do is to convert the list of input IDs to tensors.

-You can use 🤗 Transformers without having to worry about which ML framework is used as a backend; it might be PyTorch or TensorFlow, or Flax for some models. However, Transformer models only accept *tensors* as input. If this is your first time hearing about tensors, you can think of them as NumPy arrays instead. A NumPy array can be a scalar (0D), a vector (1D), a matrix (2D), or have more dimensions. It's effectively a tensor; other ML frameworks' tensors behave similarly, and are usually as simple to instantiate as NumPy arrays.

+You can use 🤗 Transformers without having to worry about which ML framework is used as a backend; it might be PyTorch or Flax for some models. However, Transformer models only accept *tensors* as input. If this is your first time hearing about tensors, you can think of them as NumPy arrays instead. A NumPy array can be a scalar (0D), a vector (1D), a matrix (2D), or have more dimensions. It's effectively a tensor; other ML frameworks' tensors behave similarly, and are usually as simple to instantiate as NumPy arrays.

-To specify the type of tensors we want to get back (PyTorch, TensorFlow, or plain NumPy), we use the `return_tensors` argument:

+To specify the type of tensors we want to get back (PyTorch or plain NumPy), we use the `return_tensors` argument:

-{#if fw === 'pt'}

```python

raw_inputs = [

"I've been waiting for a HuggingFace course my whole life.",

@@ -96,21 +74,9 @@ raw_inputs = [

inputs = tokenizer(raw_inputs, padding=True, truncation=True, return_tensors="pt")

print(inputs)

```

-{:else}

-```python

-raw_inputs = [

- "I've been waiting for a HuggingFace course my whole life.",

- "I hate this so much!",

-]

-inputs = tokenizer(raw_inputs, padding=True, truncation=True, return_tensors="tf")

-print(inputs)

-```

-{/if}

Don't worry about padding and truncation just yet; we'll explain those later. The main things to remember here are that you can pass one sentence or a list of sentences, as well as specifying the type of tensors you want to get back (if no type is passed, you will get a list of lists as a result).

-{#if fw === 'pt'}

-

Here's what the results look like as PyTorch tensors:

```python out

@@ -125,31 +91,11 @@ Here's what the results look like as PyTorch tensors:

])

}

```

-{:else}

-

-Here's what the results look like as TensorFlow tensors:

-

-```python out

-{

- 'input_ids': ,

- 'attention_mask':

-}

-```

-{/if}

The output itself is a dictionary containing two keys, `input_ids` and `attention_mask`. `input_ids` contains two rows of integers (one for each sentence) that are the unique identifiers of the tokens in each sentence. We'll explain what the `attention_mask` is later in this chapter.

## Going through the model[[going-through-the-model]]

-{#if fw === 'pt'}

We can download our pretrained model the same way we did with our tokenizer. 🤗 Transformers provides an `AutoModel` class which also has a `from_pretrained()` method:

```python

@@ -158,16 +104,6 @@ from transformers import AutoModel

checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

model = AutoModel.from_pretrained(checkpoint)

```

-{:else}

-We can download our pretrained model the same way we did with our tokenizer. 🤗 Transformers provides an `TFAutoModel` class which also has a `from_pretrained` method:

-

-```python

-from transformers import TFAutoModel

-

-checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

-model = TFAutoModel.from_pretrained(checkpoint)

-```

-{/if}

In this code snippet, we have downloaded the same checkpoint we used in our pipeline before (it should actually have been cached already) and instantiated a model with it.

@@ -189,7 +125,6 @@ It is said to be "high dimensional" because of the last value. The hidden size c

We can see this if we feed the inputs we preprocessed to our model:

-{#if fw === 'pt'}

```python

outputs = model(**inputs)

print(outputs.last_hidden_state.shape)

@@ -198,16 +133,6 @@ print(outputs.last_hidden_state.shape)

```python out

torch.Size([2, 16, 768])

```

-{:else}

-```py

-outputs = model(inputs)

-print(outputs.last_hidden_state.shape)

-```

-

-```python out

-(2, 16, 768)

-```

-{/if}

Note that the outputs of 🤗 Transformers models behave like `namedtuple`s or dictionaries. You can access the elements by attributes (like we did) or by key (`outputs["last_hidden_state"]`), or even by index if you know exactly where the thing you are looking for is (`outputs[0]`).

@@ -235,7 +160,6 @@ There are many different architectures available in 🤗 Transformers, with each

- `*ForTokenClassification`

- and others 🤗

-{#if fw === 'pt'}

For our example, we will need a model with a sequence classification head (to be able to classify the sentences as positive or negative). So, we won't actually use the `AutoModel` class, but `AutoModelForSequenceClassification`:

```python

@@ -245,17 +169,6 @@ checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

model = AutoModelForSequenceClassification.from_pretrained(checkpoint)

outputs = model(**inputs)

```

-{:else}

-For our example, we will need a model with a sequence classification head (to be able to classify the sentences as positive or negative). So, we won't actually use the `TFAutoModel` class, but `TFAutoModelForSequenceClassification`:

-

-```python

-from transformers import TFAutoModelForSequenceClassification

-

-checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

-model = TFAutoModelForSequenceClassification.from_pretrained(checkpoint)

-outputs = model(inputs)

-```

-{/if}

Now if we look at the shape of our outputs, the dimensionality will be much lower: the model head takes as input the high-dimensional vectors we saw before, and outputs vectors containing two values (one per label):

@@ -263,15 +176,9 @@ Now if we look at the shape of our outputs, the dimensionality will be much lowe

print(outputs.logits.shape)

```

-{#if fw === 'pt'}

```python out

torch.Size([2, 2])

```

-{:else}

-```python out

-(2, 2)

-```

-{/if}

Since we have just two sentences and two labels, the result we get from our model is of shape 2 x 2.

@@ -283,49 +190,24 @@ The values we get as output from our model don't necessarily make sense by thems

print(outputs.logits)

```

-{#if fw === 'pt'}

```python out

tensor([[-1.5607, 1.6123],

[ 4.1692, -3.3464]], grad_fn=)

```

-{:else}

-```python out

-

-```

-{/if}

Our model predicted `[-1.5607, 1.6123]` for the first sentence and `[ 4.1692, -3.3464]` for the second one. Those are not probabilities but *logits*, the raw, unnormalized scores outputted by the last layer of the model. To be converted to probabilities, they need to go through a [SoftMax](https://en.wikipedia.org/wiki/Softmax_function) layer (all 🤗 Transformers models output the logits, as the loss function for training will generally fuse the last activation function, such as SoftMax, with the actual loss function, such as cross entropy):

-{#if fw === 'pt'}

```py

import torch

predictions = torch.nn.functional.softmax(outputs.logits, dim=-1)

print(predictions)

```

-{:else}

-```py

-import tensorflow as tf

-

-predictions = tf.math.softmax(outputs.logits, axis=-1)

-print(predictions)

-```

-{/if}

-{#if fw === 'pt'}

```python out

tensor([[4.0195e-02, 9.5980e-01],

[9.9946e-01, 5.4418e-04]], grad_fn=)

```

-{:else}

-```python out

-tf.Tensor(

-[[4.01951671e-02 9.59804833e-01]

- [9.9945587e-01 5.4418424e-04]], shape=(2, 2), dtype=float32)

-```

-{/if}

Now we can see that the model predicted `[0.0402, 0.9598]` for the first sentence and `[0.9995, 0.0005]` for the second one. These are recognizable probability scores.

diff --git a/chapters/en/chapter2/3.mdx b/chapters/en/chapter2/3.mdx

index acc653704..91d3ddd00 100644

--- a/chapters/en/chapter2/3.mdx

+++ b/chapters/en/chapter2/3.mdx

@@ -1,8 +1,6 @@

-# Models[[models]]

-

-{#if fw === 'pt'}

+# Models[[the-models]]

-{:else}

+

-

+In this section, we'll take a closer look at creating and using models. We'll use the `AutoModel` class, which is handy when you want to instantiate any model from a checkpoint.

-{/if}

+## Creating a Transformer[[creating-a-transformer]]

-{#if fw === 'pt'}

-

-{:else}

-

-{/if}

+Let's begin by examining what happens when we instantiate an `AutoModel`:

-{#if fw === 'pt'}

-In this section we'll take a closer look at creating and using a model. We'll use the `AutoModel` class, which is handy when you want to instantiate any model from a checkpoint.

+```py

+from transformers import AutoModel

-The `AutoModel` class and all of its relatives are actually simple wrappers over the wide variety of models available in the library. It's a clever wrapper as it can automatically guess the appropriate model architecture for your checkpoint, and then instantiates a model with this architecture.

+model = AutoModel.from_pretrained("bert-base-cased")

+```

-{:else}

-In this section we'll take a closer look at creating and using a model. We'll use the `TFAutoModel` class, which is handy when you want to instantiate any model from a checkpoint.

+Similar to the tokenizer, the `from_pretrained()` method will download and cache the model data from the Hugging Face Hub. As mentioned previously, the checkpoint name corresponds to a specific model architecture and weights, in this case a BERT model with a basic architecture (12 layers, 768 hidden size, 12 attention heads) and cased inputs (meaning that the uppercase/lowercase distinction is important). There are many checkpoints available on the Hub — you can explore them [here](https://huggingface.co/models).

-The `TFAutoModel` class and all of its relatives are actually simple wrappers over the wide variety of models available in the library. It's a clever wrapper as it can automatically guess the appropriate model architecture for your checkpoint, and then instantiates a model with this architecture.

+The `AutoModel` class and its associates are actually simple wrappers designed to fetch the appropriate model architecture for a given checkpoint. It's an "auto" class meaning it will guess the appropriate model architecture for you and instantiate the correct model class. However, if you know the type of model you want to use, you can use the class that defines its architecture directly:

-{/if}

+```py

+from transformers import BertModel

-However, if you know the type of model you want to use, you can use the class that defines its architecture directly. Let's take a look at how this works with a BERT model.

+model = BertModel.from_pretrained("bert-base-cased")

+```

-## Creating a Transformer[[creating-a-transformer]]

+## Loading and saving[[loading-and-saving]]

-The first thing we'll need to do to initialize a BERT model is load a configuration object:

+Saving a model is as simple as saving a tokenizer. In fact, the models actually have the same `save_pretrained()` method, which saves the model's weights and architecture configuration:

-{#if fw === 'pt'}

```py

-from transformers import BertConfig, BertModel

+model.save_pretrained("directory_on_my_computer")

+```

-# Building the config

-config = BertConfig()

+This will save two files to your disk:

-# Building the model from the config

-model = BertModel(config)

```

-{:else}

+ls directory_on_my_computer

+

+config.json pytorch_model.bin

+```

+

+If you look inside the *config.json* file, you'll see all the necessary attributes needed to build the model architecture. This file also contains some metadata, such as where the checkpoint originated and what 🤗 Transformers version you were using when you last saved the checkpoint.

+

+The *pytorch_model.bin* file is known as the state dictionary; it contains all your model's weights. The two files work together: the configuration file is needed to know about the model architecture, while the model weights are the parameters of the model.

+

+To reuse a saved model, use the `from_pretrained()` method again:

+

```py

-from transformers import BertConfig, TFBertModel

+from transformers import AutoModel

+

+model = AutoModel.from_pretrained("directory_on_my_computer")

+```

+

+A wonderful feature of the 🤗 Transformers library is the ability to easily share models and tokenizers with the community. To do this, make sure you have an account on [Hugging Face](https://huggingface.co). If you're using a notebook, you can easily log in with this:

-# Building the config

-config = BertConfig()

+```python

+from huggingface_hub import notebook_login

-# Building the model from the config

-model = TFBertModel(config)

+notebook_login()

```

-{/if}

-The configuration contains many attributes that are used to build the model:

+Otherwise, at your terminal run:

+

+```bash

+huggingface-cli login

+```

+

+Then you can push the model to the Hub with the `push_to_hub()` method:

```py

-print(config)

+model.push_to_hub("my-awesome-model")

```

-```python out

-BertConfig {

- [...]

- "hidden_size": 768,

- "intermediate_size": 3072,

- "max_position_embeddings": 512,

- "num_attention_heads": 12,

- "num_hidden_layers": 12,

- [...]

-}

+This will upload the model files to the Hub, in a repository under your namespace named *my-awesome-model*. Then, anyone can load your model with the `from_pretrained()` method!

+

+```py

+from transformers import AutoModel

+

+model = AutoModel.from_pretrained("your-username/my-awesome-model")

```

-While you haven't seen what all of these attributes do yet, you should recognize some of them: the `hidden_size` attribute defines the size of the `hidden_states` vector, and `num_hidden_layers` defines the number of layers the Transformer model has.

+You can do a lot more with the Hub API:

+- Push a model from a local repository

+- Update specific files without re-uploading everything

+- Add model cards to document the model's abilities, limitations, known biases, etc.

-### Different loading methods[[different-loading-methods]]

+See [the documentation](https://huggingface.co/docs/huggingface_hub/how-to-upstream) for a complete tutorial on this, or check out the advanced [Chapter 4](/course/chapter4).

-Creating a model from the default configuration initializes it with random values:

+## Encoding text[[encoding-text]]

+

+Transformer models handle text by turning the inputs into numbers. Here we will look at exactly what happens when your text is processed by the tokenizer. We've already seen in [Chapter 1](/course/chapter1) that tokenizers split the text into tokens and then convert these tokens into numbers. We can see this conversion through a simple tokenizer:

-{#if fw === 'pt'}

```py

-from transformers import BertConfig, BertModel

+from transformers import AutoTokenizer

-config = BertConfig()

-model = BertModel(config)

+tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

-# Model is randomly initialized!

+encoded_input = tokenizer("Hello, I'm a single sentence!")

+print(encoded_input)

```

-{:else}

-```py

-from transformers import BertConfig, TFBertModel

-config = BertConfig()

-model = TFBertModel(config)

+```python out

+{'input_ids': [101, 8667, 117, 1000, 1045, 1005, 1049, 2235, 17662, 12172, 1012, 102],

+ 'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

+ 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]}

+```

+

+We get a dictionary with the following fields:

+- input_ids: numerical representations of your tokens

+- token_type_ids: these tell the model which part of the input is sentence A and which is sentence B (discussed more in the next section)

+- attention_mask: this indicates which tokens should be attended to and which should not (discussed more in a bit)

+

+We can decode the input IDs to get back the original text:

+

+```py

+tokenizer.decode(encoded_input["input_ids"])

+```

-# Model is randomly initialized!

+```python out

+"[CLS] Hello, I'm a single sentence! [SEP]"

```

-{/if}

-The model can be used in this state, but it will output gibberish; it needs to be trained first. We could train the model from scratch on the task at hand, but as you saw in [Chapter 1](/course/chapter1), this would require a long time and a lot of data, and it would have a non-negligible environmental impact. To avoid unnecessary and duplicated effort, it's imperative to be able to share and reuse models that have already been trained.

+You'll notice that the tokenizer has added special tokens — `[CLS]` and `[SEP]` — required by the model. Not all models need special tokens; they're utilized when a model was pretrained with them, in which case the tokenizer needs to add them as that model expects these tokens.

-Loading a Transformer model that is already trained is simple — we can do this using the `from_pretrained()` method:

+You can encode multiple sentences at once, either by batching them together (we'll discuss this soon) or by passing a list:

-{#if fw === 'pt'}

```py

-from transformers import BertModel

+encoded_input = tokenizer("How are you?", "I'm fine, thank you!")

+print(encoded_input)

+```

-model = BertModel.from_pretrained("bert-base-cased")

+```python out

+{'input_ids': [[101, 1731, 1132, 1128, 136, 102], [101, 1045, 1005, 1049, 2503, 117, 5763, 1128, 136, 102]],

+ 'token_type_ids': [[0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 0, 0, 0, 0, 0]],

+ 'attention_mask': [[1, 1, 1, 1, 1, 1], [1, 1, 1, 1, 1, 1, 1, 1, 1, 1]]}

```

-As you saw earlier, we could replace `BertModel` with the equivalent `AutoModel` class. We'll do this from now on as this produces checkpoint-agnostic code; if your code works for one checkpoint, it should work seamlessly with another. This applies even if the architecture is different, as long as the checkpoint was trained for a similar task (for example, a sentiment analysis task).

+Note that when passing multiple sentences, the tokenizer returns a list for each sentence for each dictionary value. We can also ask the tokenizer to return tensors directly from PyTorch:

-{:else}

```py

-from transformers import TFBertModel

+encoded_input = tokenizer("How are you?", "I'm fine, thank you!", return_tensors="pt")

+print(encoded_input)

+```

-model = TFBertModel.from_pretrained("bert-base-cased")

+```python out

+{'input_ids': tensor([[ 101, 1731, 1132, 1128, 136, 102],

+ [ 101, 1045, 1005, 1049, 2503, 117, 5763, 1128, 136, 102]]),

+ 'token_type_ids': tensor([[0, 0, 0, 0, 0, 0],

+ [0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]),

+ 'attention_mask': tensor([[1, 1, 1, 1, 1, 1],

+ [1, 1, 1, 1, 1, 1, 1, 1, 1, 1]])}

```

-As you saw earlier, we could replace `TFBertModel` with the equivalent `TFAutoModel` class. We'll do this from now on as this produces checkpoint-agnostic code; if your code works for one checkpoint, it should work seamlessly with another. This applies even if the architecture is different, as long as the checkpoint was trained for a similar task (for example, a sentiment analysis task).

+But there's a problem: the two lists don't have the same length! Arrays and tensors need to be rectangular, so we can't simply convert these lists to a PyTorch tensor (or NumPy array). The tokenizer provides an option for that: padding.

-{/if}

+### Padding inputs[[padding-inputs]]

-In the code sample above we didn't use `BertConfig`, and instead loaded a pretrained model via the `bert-base-cased` identifier. This is a model checkpoint that was trained by the authors of BERT themselves; you can find more details about it in its [model card](https://huggingface.co/bert-base-cased).

+If we ask the tokenizer to pad the inputs, it will make all sentences the same length by adding a special padding token to the sentences that are shorter than the longest one:

-This model is now initialized with all the weights of the checkpoint. It can be used directly for inference on the tasks it was trained on, and it can also be fine-tuned on a new task. By training with pretrained weights rather than from scratch, we can quickly achieve good results.

+```py

+encoded_input = tokenizer(

+ ["How are you?", "I'm fine, thank you!"], padding=True, return_tensors="pt"

+)

+print(encoded_input)

+```

-The weights have been downloaded and cached (so future calls to the `from_pretrained()` method won't re-download them) in the cache folder, which defaults to *~/.cache/huggingface/transformers*. You can customize your cache folder by setting the `HF_HOME` environment variable.

+```python out

+{'input_ids': tensor([[ 101, 1731, 1132, 1128, 136, 102, 0, 0, 0, 0],

+ [ 101, 1045, 1005, 1049, 2503, 117, 5763, 1128, 136, 102]]),

+ 'token_type_ids': tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

+ [0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]),

+ 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 0, 0, 0, 0],

+ [1, 1, 1, 1, 1, 1, 1, 1, 1, 1]])}

+```

-The identifier used to load the model can be the identifier of any model on the Model Hub, as long as it is compatible with the BERT architecture. The entire list of available BERT checkpoints can be found [here](https://huggingface.co/models?filter=bert).

+Now we have rectangular tensors! Note that the padding tokens have been encoded into input IDs with ID 0, and they have an attention mask value of 0 as well. This is because those padding tokens shouldn't be analyzed by the model: they're not part of the actual sentence.

-### Saving methods[[saving-methods]]

+### Truncating inputs[[truncating-inputs]]

-Saving a model is as easy as loading one — we use the `save_pretrained()` method, which is analogous to the `from_pretrained()` method:

+The tensors might get too big to be processed by the model. For instance, BERT was only pretrained with sequences up to 512 tokens, so it cannot process longer sequences. If you have sequences longer than the model can handle, you'll need to truncate them with the `truncation` parameter:

```py

-model.save_pretrained("directory_on_my_computer")

+encoded_input = tokenizer(

+ "This is a very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very very long sentence.",

+ truncation=True,

+)

+print(encoded_input["input_ids"])

```

-This saves two files to your disk:

-

-{#if fw === 'pt'}

+```python out

+[101, 1188, 1110, 170, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1505, 1179, 5650, 119, 102]

```

-ls directory_on_my_computer

-config.json pytorch_model.bin

-```

-{:else}

-```

-ls directory_on_my_computer

+By combining the padding and truncation arguments, you can make sure your tensors have the exact size you need:

-config.json tf_model.h5

+```py

+encoded_input = tokenizer(

+ ["How are you?", "I'm fine, thank you!"],

+ padding=True,

+ truncation=True,

+ max_length=5,

+ return_tensors="pt",

+)

+print(encoded_input)

```

-{/if}

-If you take a look at the *config.json* file, you'll recognize the attributes necessary to build the model architecture. This file also contains some metadata, such as where the checkpoint originated and what 🤗 Transformers version you were using when you last saved the checkpoint.

+```python out

+{'input_ids': tensor([[ 101, 1731, 1132, 1128, 102],

+ [ 101, 1045, 1005, 1049, 102]]),

+ 'token_type_ids': tensor([[0, 0, 0, 0, 0],

+ [0, 0, 0, 0, 0]]),

+ 'attention_mask': tensor([[1, 1, 1, 1, 1],

+ [1, 1, 1, 1, 1]])}

+```

-{#if fw === 'pt'}

-The *pytorch_model.bin* file is known as the *state dictionary*; it contains all your model's weights. The two files go hand in hand; the configuration is necessary to know your model's architecture, while the model weights are your model's parameters.

+### Adding special tokens

-{:else}

-The *tf_model.h5* file is known as the *state dictionary*; it contains all your model's weights. The two files go hand in hand; the configuration is necessary to know your model's architecture, while the model weights are your model's parameters.

+Special tokens (or at least the concept of them) is particularly important to BERT and derived models. These tokens are added to better represent the sentence boundaries, such as the beginning of a sentence (`[CLS]`) or separator between sentences (`[SEP]`). Let's look at a simple example:

-{/if}

+```py

+encoded_input = tokenizer("How are you?")

+print(encoded_input["input_ids"])

+tokenizer.decode(encoded_input["input_ids"])

+```

-## Using a Transformer model for inference[[using-a-transformer-model-for-inference]]

+```python out

+[101, 1731, 1132, 1128, 136, 102]

+'[CLS] How are you? [SEP]'

+```

-Now that you know how to load and save a model, let's try using it to make some predictions. Transformer models can only process numbers — numbers that the tokenizer generates. But before we discuss tokenizers, let's explore what inputs the model accepts.

+These special tokens are automatically added by the tokenizer. Not all models need special tokens; they are primarily used when a model was pretrained with them, in which case the tokenizer will add them since the model expects them.

-Tokenizers can take care of casting the inputs to the appropriate framework's tensors, but to help you understand what's going on, we'll take a quick look at what must be done before sending the inputs to the model.

+### Why is all of this necessary?

-Let's say we have a couple of sequences:

+Here's a concrete example. Consider these encoded sequences:

```py

-sequences = ["Hello!", "Cool.", "Nice!"]

+sequences = [

+ "I've been waiting for a HuggingFace course my whole life.",

+ "I hate this so much!",

+]

```

-The tokenizer converts these to vocabulary indices which are typically called *input IDs*. Each sequence is now a list of numbers! The resulting output is:

+Once tokenized, we have:

-```py no-format

+```python

encoded_sequences = [

- [101, 7592, 999, 102],

- [101, 4658, 1012, 102],

- [101, 3835, 999, 102],

+ [

+ 101,

+ 1045,

+ 1005,

+ 2310,

+ 2042,

+ 3403,

+ 2005,

+ 1037,

+ 17662,

+ 12172,

+ 2607,

+ 2026,

+ 2878,

+ 2166,

+ 1012,

+ 102,

+ ],

+ [101, 1045, 5223, 2023, 2061, 2172, 999, 102],

]

```

This is a list of encoded sequences: a list of lists. Tensors only accept rectangular shapes (think matrices). This "array" is already of rectangular shape, so converting it to a tensor is easy:

-{#if fw === 'pt'}

```py

import torch

model_inputs = torch.tensor(encoded_sequences)

```

-{:else}

-```py

-import tensorflow as tf

-

-model_inputs = tf.constant(encoded_sequences)

-```

-{/if}

### Using the tensors as inputs to the model[[using-the-tensors-as-inputs-to-the-model]]

diff --git a/chapters/en/chapter2/4.mdx b/chapters/en/chapter2/4.mdx

index 30167ddbd..d07264690 100644

--- a/chapters/en/chapter2/4.mdx

+++ b/chapters/en/chapter2/4.mdx

@@ -2,8 +2,6 @@

# Tokenizers[[tokenizers]]

-{#if fw === 'pt'}

-

-{:else}

-

-

-

-{/if}

-

Tokenizers are one of the core components of the NLP pipeline. They serve one purpose: to translate text into data that can be processed by the model. Models can only process numbers, so tokenizers need to convert our text inputs to numerical data. In this section, we'll explore exactly what happens in the tokenization pipeline.

@@ -131,14 +118,8 @@ from transformers import BertTokenizer

tokenizer = BertTokenizer.from_pretrained("bert-base-cased")

```

-{#if fw === 'pt'}

Similar to `AutoModel`, the `AutoTokenizer` class will grab the proper tokenizer class in the library based on the checkpoint name, and can be used directly with any checkpoint:

-{:else}

-Similar to `TFAutoModel`, the `AutoTokenizer` class will grab the proper tokenizer class in the library based on the checkpoint name, and can be used directly with any checkpoint:

-

-{/if}

-

```py

from transformers import AutoTokenizer

diff --git a/chapters/en/chapter2/5.mdx b/chapters/en/chapter2/5.mdx

index 33060505b..299a15c5f 100644

--- a/chapters/en/chapter2/5.mdx

+++ b/chapters/en/chapter2/5.mdx

@@ -2,8 +2,6 @@

# Handling multiple sequences[[handling-multiple-sequences]]

-{#if fw === 'pt'}

-

-{:else}

-

-

-

-{/if}

-

-{#if fw === 'pt'}

-{:else}

-

-{/if}

In the previous section, we explored the simplest of use cases: doing inference on a single sequence of a small length. However, some questions emerge already:

@@ -41,7 +24,6 @@ Let's see what kinds of problems these questions pose, and how we can solve them

In the previous exercise you saw how sequences get translated into lists of numbers. Let's convert this list of numbers to a tensor and send it to the model:

-{#if fw === 'pt'}

```py

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

@@ -62,34 +44,11 @@ model(input_ids)

```python out

IndexError: Dimension out of range (expected to be in range of [-1, 0], but got 1)

```

-{:else}

-```py

-import tensorflow as tf

-from transformers import AutoTokenizer, TFAutoModelForSequenceClassification

-

-checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

-tokenizer = AutoTokenizer.from_pretrained(checkpoint)

-model = TFAutoModelForSequenceClassification.from_pretrained(checkpoint)

-

-sequence = "I've been waiting for a HuggingFace course my whole life."

-

-tokens = tokenizer.tokenize(sequence)

-ids = tokenizer.convert_tokens_to_ids(tokens)

-input_ids = tf.constant(ids)

-# This line will fail.

-model(input_ids)

-```

-

-```py out

-InvalidArgumentError: Input to reshape is a tensor with 14 values, but the requested shape has 196 [Op:Reshape]

-```

-{/if}

Oh no! Why did this fail? We followed the steps from the pipeline in section 2.

The problem is that we sent a single sequence to the model, whereas 🤗 Transformers models expect multiple sentences by default. Here we tried to do everything the tokenizer did behind the scenes when we applied it to a `sequence`. But if you look closely, you'll see that the tokenizer didn't just convert the list of input IDs into a tensor, it added a dimension on top of it:

-{#if fw === 'pt'}

```py

tokenized_inputs = tokenizer(sequence, return_tensors="pt")

print(tokenized_inputs["input_ids"])

@@ -99,22 +58,9 @@ print(tokenized_inputs["input_ids"])

tensor([[ 101, 1045, 1005, 2310, 2042, 3403, 2005, 1037, 17662, 12172,

2607, 2026, 2878, 2166, 1012, 102]])

```

-{:else}

-```py

-tokenized_inputs = tokenizer(sequence, return_tensors="tf")

-print(tokenized_inputs["input_ids"])

-```

-

-```py out

-

-```

-{/if}

Let's try again and add a new dimension:

-{#if fw === 'pt'}

```py

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

@@ -134,43 +80,13 @@ print("Input IDs:", input_ids)

output = model(input_ids)

print("Logits:", output.logits)

```

-{:else}

-```py

-import tensorflow as tf

-from transformers import AutoTokenizer, TFAutoModelForSequenceClassification

-

-checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

-tokenizer = AutoTokenizer.from_pretrained(checkpoint)

-model = TFAutoModelForSequenceClassification.from_pretrained(checkpoint)

-

-sequence = "I've been waiting for a HuggingFace course my whole life."

-

-tokens = tokenizer.tokenize(sequence)

-ids = tokenizer.convert_tokens_to_ids(tokens)

-

-input_ids = tf.constant([ids])

-print("Input IDs:", input_ids)

-

-output = model(input_ids)

-print("Logits:", output.logits)

-```

-{/if}

We print the input IDs as well as the resulting logits — here's the output:

-{#if fw === 'pt'}

```python out

Input IDs: [[ 1045, 1005, 2310, 2042, 3403, 2005, 1037, 17662, 12172, 2607, 2026, 2878, 2166, 1012]]

Logits: [[-2.7276, 2.8789]]

```

-{:else}

-```py out

-Input IDs: tf.Tensor(

-[[ 1045 1005 2310 2042 3403 2005 1037 17662 12172 2607 2026 2878

- 2166 1012]], shape=(1, 14), dtype=int32)

-Logits: tf.Tensor([[-2.7276208 2.8789377]], shape=(1, 2), dtype=float32)

-```

-{/if}

*Batching* is the act of sending multiple sentences through the model, all at once. If you only have one sentence, you can just build a batch with a single sequence:

@@ -212,7 +128,6 @@ batched_ids = [

The padding token ID can be found in `tokenizer.pad_token_id`. Let's use it and send our two sentences through the model individually and batched together:

-{#if fw === 'pt'}

```py no-format

model = AutoModelForSequenceClassification.from_pretrained(checkpoint)

@@ -234,30 +149,6 @@ tensor([[ 0.5803, -0.4125]], grad_fn=)

tensor([[ 1.5694, -1.3895],

[ 1.3373, -1.2163]], grad_fn=)

```

-{:else}

-```py no-format

-model = TFAutoModelForSequenceClassification.from_pretrained(checkpoint)

-

-sequence1_ids = [[200, 200, 200]]

-sequence2_ids = [[200, 200]]

-batched_ids = [

- [200, 200, 200],

- [200, 200, tokenizer.pad_token_id],

-]

-

-print(model(tf.constant(sequence1_ids)).logits)

-print(model(tf.constant(sequence2_ids)).logits)

-print(model(tf.constant(batched_ids)).logits)

-```

-

-```py out

-tf.Tensor([[ 1.5693678 -1.3894581]], shape=(1, 2), dtype=float32)

-tf.Tensor([[ 0.5803005 -0.41252428]], shape=(1, 2), dtype=float32)

-tf.Tensor(

-[[ 1.5693681 -1.3894582]

- [ 1.3373486 -1.2163193]], shape=(2, 2), dtype=float32)

-```

-{/if}

There's something wrong with the logits in our batched predictions: the second row should be the same as the logits for the second sentence, but we've got completely different values!

@@ -269,7 +160,6 @@ This is because the key feature of Transformer models is attention layers that *

Let's complete the previous example with an attention mask:

-{#if fw === 'pt'}

```py no-format

batched_ids = [

[200, 200, 200],

@@ -289,28 +179,6 @@ print(outputs.logits)

tensor([[ 1.5694, -1.3895],

[ 0.5803, -0.4125]], grad_fn=)

```

-{:else}

-```py no-format

-batched_ids = [

- [200, 200, 200],

- [200, 200, tokenizer.pad_token_id],

-]

-

-attention_mask = [

- [1, 1, 1],

- [1, 1, 0],

-]

-

-outputs = model(tf.constant(batched_ids), attention_mask=tf.constant(attention_mask))

-print(outputs.logits)

-```

-

-```py out

-tf.Tensor(

-[[ 1.5693681 -1.3894582 ]

- [ 0.5803021 -0.41252586]], shape=(2, 2), dtype=float32)

-```

-{/if}

Now we get the same logits for the second sentence in the batch.

diff --git a/chapters/en/chapter2/6.mdx b/chapters/en/chapter2/6.mdx

index d26118501..3a0dac876 100644

--- a/chapters/en/chapter2/6.mdx

+++ b/chapters/en/chapter2/6.mdx

@@ -2,8 +2,6 @@

# Putting it all together[[putting-it-all-together]]

-{#if fw === 'pt'}

-

-{:else}

-

-

-

-{/if}

-

In the last few sections, we've been trying our best to do most of the work by hand. We've explored how tokenizers work and looked at tokenization, conversion to input IDs, padding, truncation, and attention masks.

However, as we saw in section 2, the 🤗 Transformers API can handle all of this for us with a high-level function that we'll dive into here. When you call your `tokenizer` directly on the sentence, you get back inputs that are ready to pass through your model:

@@ -82,7 +69,7 @@ model_inputs = tokenizer(sequences, truncation=True)

model_inputs = tokenizer(sequences, max_length=8, truncation=True)

```

-The `tokenizer` object can handle the conversion to specific framework tensors, which can then be directly sent to the model. For example, in the following code sample we are prompting the tokenizer to return tensors from the different frameworks — `"pt"` returns PyTorch tensors, `"tf"` returns TensorFlow tensors, and `"np"` returns NumPy arrays:

+The `tokenizer` object can handle the conversion to specific framework tensors, which can then be directly sent to the model. For example, in the following code sample we are prompting the tokenizer to return tensors from the different frameworks — `"pt"` returns PyTorch tensors and `"np"` returns NumPy arrays:

```py

sequences = ["I've been waiting for a HuggingFace course my whole life.", "So have I!"]

@@ -90,9 +77,6 @@ sequences = ["I've been waiting for a HuggingFace course my whole life.", "So ha

# Returns PyTorch tensors

model_inputs = tokenizer(sequences, padding=True, return_tensors="pt")

-# Returns TensorFlow tensors

-model_inputs = tokenizer(sequences, padding=True, return_tensors="tf")

-

# Returns NumPy arrays

model_inputs = tokenizer(sequences, padding=True, return_tensors="np")

```

@@ -135,7 +119,6 @@ The tokenizer added the special word `[CLS]` at the beginning and the special wo

Now that we've seen all the individual steps the `tokenizer` object uses when applied on texts, let's see one final time how it can handle multiple sequences (padding!), very long sequences (truncation!), and multiple types of tensors with its main API:

-{#if fw === 'pt'}

```py

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

@@ -148,17 +131,3 @@ sequences = ["I've been waiting for a HuggingFace course my whole life.", "So ha

tokens = tokenizer(sequences, padding=True, truncation=True, return_tensors="pt")

output = model(**tokens)

```

-{:else}

-```py

-import tensorflow as tf

-from transformers import AutoTokenizer, TFAutoModelForSequenceClassification

-

-checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

-tokenizer = AutoTokenizer.from_pretrained(checkpoint)

-model = TFAutoModelForSequenceClassification.from_pretrained(checkpoint)

-sequences = ["I've been waiting for a HuggingFace course my whole life.", "So have I!"]

-

-tokens = tokenizer(sequences, padding=True, truncation=True, return_tensors="tf")

-output = model(**tokens)

-```

-{/if}

diff --git a/chapters/en/chapter2/8.mdx b/chapters/en/chapter2/8.mdx

index c41f27936..f2f7ab431 100644

--- a/chapters/en/chapter2/8.mdx

+++ b/chapters/en/chapter2/8.mdx

@@ -1,310 +1,821 @@

-

-

-

-

-# End-of-chapter quiz[[end-of-chapter-quiz]]

-

-

-

-### 1. What is the order of the language modeling pipeline?

-

-

-

-### 2. How many dimensions does the tensor output by the base Transformer model have, and what are they?

-

-

-

-### 3. Which of the following is an example of subword tokenization?

-

-

-

-### 4. What is a model head?

-

-

-

-{#if fw === 'pt'}

-### 5. What is an AutoModel?

-

-AutoTrain product?"

- },

- {

- text: "An object that returns the correct architecture based on the checkpoint",

- explain: "Exactly: the AutoModel only needs to know the checkpoint from which to initialize to return the correct architecture.",

- correct: true

- },

- {

- text: "A model that automatically detects the language used for its inputs to load the correct weights",

- explain: "Incorrect; while some checkpoints and models are capable of handling multiple languages, there are no built-in tools for automatic checkpoint selection according to language. You should head over to the Model Hub to find the best checkpoint for your task!"

- }

- ]}

-/>

-

-{:else}

-### 5. What is an TFAutoModel?

-

-AutoTrain product?"

- },

- {

- text: "An object that returns the correct architecture based on the checkpoint",

- explain: "Exactly: the TFAutoModel only needs to know the checkpoint from which to initialize to return the correct architecture.",

- correct: true

- },

- {

- text: "A model that automatically detects the language used for its inputs to load the correct weights",

- explain: "Incorrect; while some checkpoints and models are capable of handling multiple languages, there are no built-in tools for automatic checkpoint selection according to language. You should head over to the Model Hub to find the best checkpoint for your task!"

- }

- ]}

-/>

-

-{/if}

-

-### 6. What are the techniques to be aware of when batching sequences of different lengths together?

-

-

-

-### 7. What is the point of applying a SoftMax function to the logits output by a sequence classification model?

-

-

-

-### 8. What method is most of the tokenizer API centered around?

-

-encode, as it can encode text into IDs and IDs into predictions",

- explain: "Wrong! While the encode method does exist on tokenizers, it does not exist on models."

- },

- {

- text: "Calling the tokenizer object directly.",

- explain: "Exactly! The __call__ method of the tokenizer is a very powerful method which can handle pretty much anything. It is also the method used to retrieve predictions from a model.",

- correct: true

- },

- {

- text: "pad",

- explain: "Wrong! Padding is very useful, but it's just one part of the tokenizer API."

- },

- {

- text: "tokenize",

- explain: "The tokenize method is arguably one of the most useful methods, but it isn't the core of the tokenizer API."

- }

- ]}

-/>

-

-### 9. What does the `result` variable contain in this code sample?

-

-```py

-from transformers import AutoTokenizer

-

-tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

-result = tokenizer.tokenize("Hello!")

-```

-

-__call__ or convert_tokens_to_ids method is for!"

- },

- {

- text: "A string containing all of the tokens",

- explain: "This would be suboptimal, as the goal is to split the string into multiple tokens."

- }

- ]}

-/>

-

-{#if fw === 'pt'}

-### 10. Is there something wrong with the following code?

-

-```py

-from transformers import AutoTokenizer, AutoModel

-

-tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

-model = AutoModel.from_pretrained("gpt2")

-

-encoded = tokenizer("Hey!", return_tensors="pt")

-result = model(**encoded)

-```

-

-

-

-{:else}

-### 10. Is there something wrong with the following code?

-

-```py

-from transformers import AutoTokenizer, TFAutoModel

-

-tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

-model = TFAutoModel.from_pretrained("gpt2")

-

-encoded = tokenizer("Hey!", return_tensors="pt")

-result = model(**encoded)

-```

-

-

-

-{/if}

+# Optimized Inference Deployment

+

+In this section, we'll explore advanced frameworks for optimizing LLM deployments: Text Generation Inference (TGI), vLLM, and llama.cpp. These applications are primarily used in production environments to serve LLMs to users. This section focuses on how to deploy these frameworks in production rather than how to use them for inference on a single machine.

+

+We'll cover how these tools maximize inference efficiency and simplify production deployments of Large Language Models.

+

+## Framework Selection Guide

+

+TGI, vLLM, and llama.cpp serve similar purposes but have distinct characteristics that make them better suited for different use cases. Let's look at the key differences between them, focusing on performance and integration.

+

+### Memory Management and Performance

+

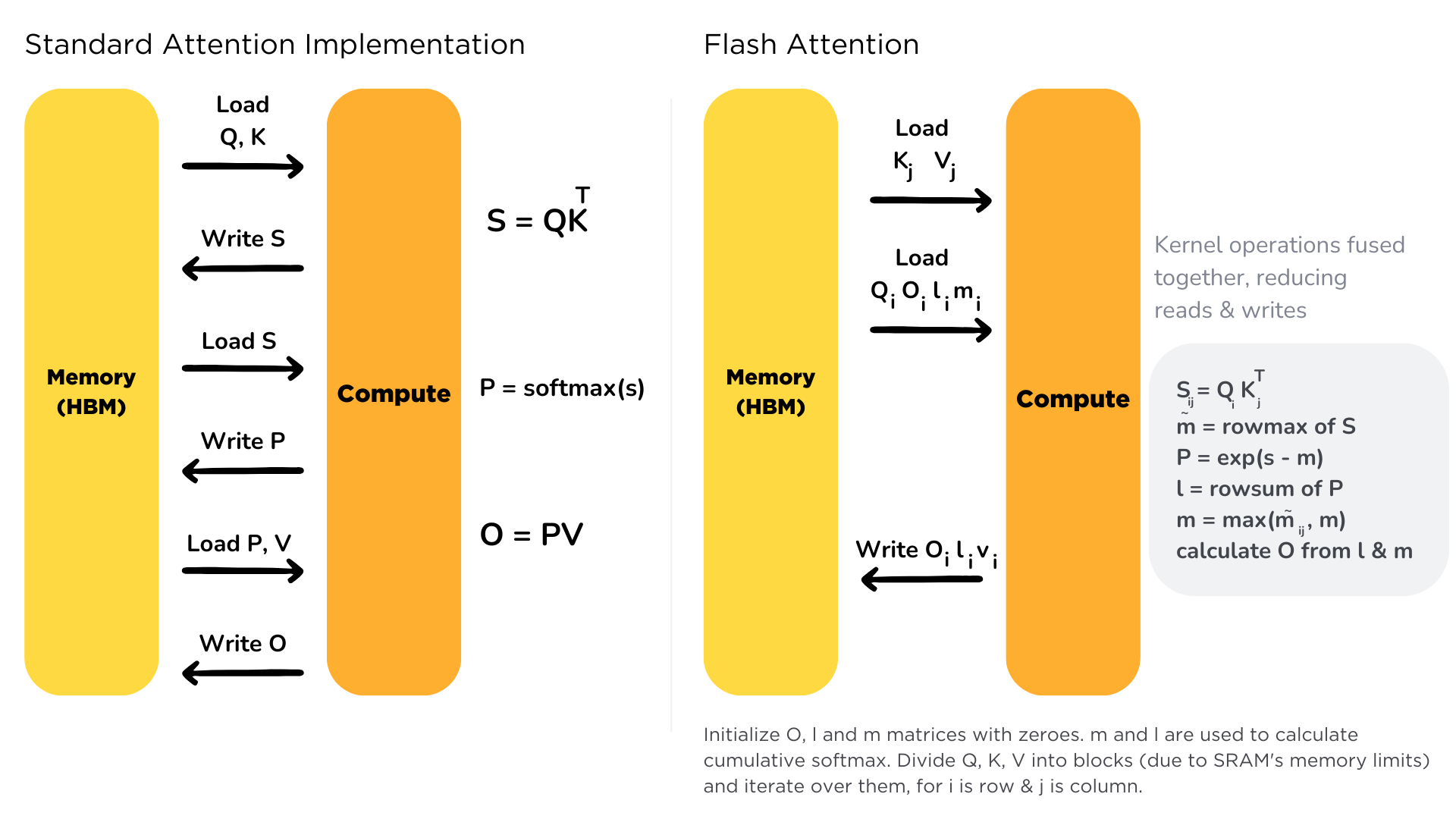

+**TGI** is designed to be stable and predictable in production, using fixed sequence lengths to keep memory usage consistent. TGI manages memory using Flash Attention 2 and continuous batching techniques. This means it can process attention calculations very efficiently and keep the GPU busy by constantly feeding it work. The system can move parts of the model between CPU and GPU when needed, which helps handle larger models.

+

+ +

+

+Flash Attention is a technique that optimizes the attention mechanism in transformer models by addressing memory bandwidth bottlenecks. As discussed earlier in [Chapter 1.8](/course/chapter1/8), the attention mechanism has quadratic complexity and memory usage, making it inefficient for long sequences.

+

+The key innovation is in how it manages memory transfers between High Bandwidth Memory (HBM) and faster SRAM cache. Traditional attention repeatedly transfers data between HBM and SRAM, creating bottlenecks by leaving the GPU idle. Flash Attention loads data once into SRAM and performs all calculations there, minimizing expensive memory transfers.

+

+While the benefits are most significant during training, Flash Attention's reduced VRAM usage and improved efficiency make it valuable for inference as well, enabling faster and more scalable LLM serving.

+

+

+**vLLM** takes a different approach by using PagedAttention. Just like how a computer manages its memory in pages, vLLM splits the model's memory into smaller blocks. This clever system means it can handle different-sized requests more flexibly and doesn't waste memory space. It's particularly good at sharing memory between different requests and reduces memory fragmentation, which makes the whole system more efficient.

+

+

+PagedAttention is a technique that addresses another critical bottleneck in LLM inference: KV cache memory management. As discussed in [Chapter 1.8](/course/chapter1/8), during text generation, the model stores attention keys and values (KV cache) for each generated token to reduce redundant computations. The KV cache can become enormous, especially with long sequences or multiple concurrent requests.

+

+vLLM's key innovation lies in how it manages this cache:

+

+1. **Memory Paging**: Instead of treating the KV cache as one large block, it's divided into fixed-size "pages" (similar to virtual memory in operating systems).

+2. **Non-contiguous Storage**: Pages don't need to be stored contiguously in GPU memory, allowing for more flexible memory allocation.

+3. **Page Table Management**: A page table tracks which pages belong to which sequence, enabling efficient lookup and access.

+4. **Memory Sharing**: For operations like parallel sampling, pages storing the KV cache for the prompt can be shared across multiple sequences.

+

+The PagedAttention approach can lead to up to 24x higher throughput compared to traditional methods, making it a game-changer for production LLM deployments. If you want to go really deep into how PagedAttention works, you can read the [the guide from the vLLM documentation](https://docs.vllm.ai/en/latest/design/kernel/paged_attention.html).

+

+

+**llama.cpp** is a highly optimized C/C++ implementation originally designed for running LLaMA models on consumer hardware. It focuses on CPU efficiency with optional GPU acceleration and is ideal for resource-constrained environments. llama.cpp uses quantization techniques to reduce model size and memory requirements while maintaining good performance. It implements optimized kernels for various CPU architectures and supports basic KV cache management for efficient token generation.

+

+

+Quantization in llama.cpp reduces the precision of model weights from 32-bit or 16-bit floating point to lower precision formats like 8-bit integers (INT8), 4-bit, or even lower. This significantly reduces memory usage and improves inference speed with minimal quality loss.

+

+Key quantization features in llama.cpp include:

+1. **Multiple Quantization Levels**: Supports 8-bit, 4-bit, 3-bit, and even 2-bit quantization

+2. **GGML/GGUF Format**: Uses custom tensor formats optimized for quantized inference

+3. **Mixed Precision**: Can apply different quantization levels to different parts of the model

+4. **Hardware-Specific Optimizations**: Includes optimized code paths for various CPU architectures (AVX2, AVX-512, NEON)

+

+This approach enables running billion-parameter models on consumer hardware with limited memory, making it perfect for local deployments and edge devices.

+

+

+

+

+### Deployment and Integration

+

+Let's move on to the deployment and integration differences between the frameworks.

+